Introduction

In today's fast-paced digital ecosystem, the performance of your web applications can make the difference between a seamless user experience and a frustrating one. This guide aims to equip you with the knowledge and tools necessary to optimize your Strapi applications for maximum performance, ensuring they can handle increasing loads without compromising on speed or reliability. By the end of this guide, you will have a thorough understanding of key performance factors specific to Strapi, as well as practical steps to optimize and monitor your application's performance through load testing with LoadForge.

Understanding Strapi and Its Importance

Strapi is an open-source Node.js Headless CMS that empowers developers to easily create and manage content for their applications. Its flexible and customizable architecture makes it a popular choice for building APIs, web, and mobile applications. Despite its robust features, ensuring optimal performance in a Strapi application is crucial. High API response times, inefficient database interactions, and suboptimal server resource utilization can lead to performance bottlenecks, adversely affecting user satisfaction and application scalability.

Why Performance Optimization is Crucial

Performance optimization is not just about making your application faster; it's about improving the overall user experience and ensuring your application can handle its intended load. Poor performance can lead to:

- High bounce rates, as users are less likely to stick around if pages load slowly.

- Lower search engine rankings, as page speed is a crucial factor in SEO.

- Increased operational costs due to inefficient server resource usage.

Optimizing your Strapi application ensures a smoother user experience, better search engine rankings, and cost-efficiency.

The Role of Load Testing in Performance Optimization

Load testing is an essential step in the performance optimization process. It involves simulating a high number of users interacting with your application simultaneously to identify how it performs under stress. This helps in:

- Identifying performance bottlenecks, such as slow API response times and database queries.

- Understanding how your application scales under increased load.

- Validating that recent changes or updates do not adversely affect performance.

In this guide, we will employ LoadForge, a powerful load testing tool designed to help developers and DevOps teams assess the resilience of their applications. LoadForge provides comprehensive test scenarios, real-time monitoring, and detailed analysis to ensure your Strapi application can withstand and excel under pressure.

What This Guide Will Cover

This guide is structured to provide a holistic approach to optimizing and load testing your Strapi application. Here's what we will cover in each section:

- Understanding Strapi Performance: Key performance factors specific to Strapi.

- Setting Up Your Strapi Environment: Best practices for server and database configuration.

- Optimizing Strapi with Caching: Implementing effective caching strategies.

- Enhancing Database Performance: Techniques for optimizing database queries and structures.

- Load Testing Basics: Introduction to the fundamentals of load testing.

- Getting Started with LoadForge: Guide to setting up LoadForge for your Strapi application.

- Creating Load Tests for Strapi: Instructions for developing effective load tests.

- Running Your Load Tests: Execution and monitoring of load tests.

- Analyzing Load Test Results: Interpreting results and identifying key performance issues.

- Strapi Performance Tuning Based on Test Results: Actionable fixes and optimizations.

- Continuous Performance Monitoring: Strategies for ongoing monitoring and testing.

- Conclusion: Recap of the key points and the importance of regular performance testing.

By following the steps outlined in this guide, you will be well-equipped to ensure that your Strapi application remains robust, responsive, and scalable, providing a superior user experience consistently. Let’s get started!

Understanding Strapi Performance

When building applications with Strapi, it's crucial to understand the key performance factors that can influence the overall efficiency and responsiveness of your application. In this section, we will delve into the primary aspects that you need to focus on to ensure that your Strapi application performs optimally. These factors include API response times, database interactions, and server resource utilization.

API Response Times

API response time is one of the most critical performance metrics for any web application. It measures the time elapsed between a client's request and the server's response. For Strapi applications, swift API responses are essential to provide a seamless user experience. Several factors contribute to API response times, including:

- Endpoint Complexity: The logic and operations performed in each endpoint can affect the response time. Simplified endpoints usually respond faster.

- Middleware: Middleware that processes requests and responses can add latency. Ensure that only essential middleware is active.

- Data Volume: Large payloads increase the time taken to transmit and process data. Opt for pagination or data filtering to manage large datasets effectively.

Database Interactions

Strapi is often used in conjunction with databases like MongoDB, PostgreSQL, or MySQL. The performance of these databases directly affects the performance of your Strapi application. Key considerations for database performance include:

- Query Optimization: Efficient database queries are crucial. Avoid fetching more data than necessary and use indexes to speed up searches.

- Connection Pooling: This technique manages database connections efficiently, reducing the overhead of establishing new connections.

- Data Modeling: Properly design your data models to minimize redundant data and optimize relationships between different entities.

Server Resource Utilization

Ensuring that your server resources are effectively utilized is essential for maintaining good application performance under load. Important factors to monitor include:

- CPU and Memory Usage: High CPU or memory usage can indicate bottlenecks or inefficient code. Regularly monitor these metrics and optimize resource-intensive operations.

- Concurrency and Threads: Strapi applications can be configured to handle multiple concurrent requests. Understanding and optimizing concurrency settings can help manage server load better.

- Scaling: Depending on the expected load, consider horizontal scaling (adding more servers) or vertical scaling (upgrading server specifications) to maintain performance.

Example: Monitoring API Response Times

To keep track of API response times in Strapi, you can use middleware to log performance metrics:

module.exports = (strapi) => {

return {

initialize() {

strapi.app.use(async (ctx, next) => {

const start = Date.now();

await next();

const delta = Math.ceil(Date.now() - start);

console.log(`${ctx.method} ${ctx.url} - ${delta}ms`);

});

}

};

};

Including this middleware logs the duration of each request, allowing you to identify and troubleshoot slow endpoints.

Conclusion

Understanding these key performance factors is the first step towards optimizing your Strapi application. By focusing on API response times, optimizing database interactions, and effectively managing server resources, you can ensure that your Strapi application remains robust and responsive. In the following sections, we will cover how to set up and optimize your Strapi environment, implement caching, and perform load testing using LoadForge to tackle performance bottlenecks proactively.

Setting Up Your Strapi Environment

Optimizing your Strapi setup is crucial for achieving high performance and reliability. This section will guide you through the best practices for server configuration, middleware usage, and database connections to ensure your Strapi environment is primed for performance.

Server Configuration

Proper server configuration is the foundation of a performant Strapi application. Below are some key guidelines:

-

Node.js Version: Ensure you are using a stable and up-to-date version of Node.js. Strapi is officially supported on Node.js versions 14.x and 16.x.

-

Environment Variables: Utilize environment variables for configuration settings to keep your environment consistent and secure.

-

Cluster Mode: Utilize the cluster mode to leverage multiple CPU cores for handling more concurrent requests.

const cluster = require('cluster');

const os = require('os');

if (cluster.isMaster) {

const numCPUs = os.cpus().length;

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

} else {

require('./server');

}

-

Process Managers: Use process managers like PM2 to manage your application's lifecycle, monitor resource usage, and automatically restart the application in case of failures.

pm2 start server.js -i max

pm2 set pm2-logrotate:retain 30

pm2 set pm2-logrotate:compress true

Middleware Usage

Middlewares in Strapi can significantly impact performance. Here’s how to optimize their use:

-

Compression Middleware: Use middleware like koa-compress to reduce the size of your responses, decreasing load times.

module.exports = {

settings: {

compression: {

enabled: true,

options: {

threshold: 2048,

},

},

},

};

-

Rate Limiting: Implement rate limiting to protect your API from abuse and ensure it's responsive to legitimate users.

module.exports = {

settings: {

rateLimit: {

enabled: true,

options: {

interval: 1 * 60 * 1000, // 1 minute

max: 100, // limit each IP to 100 requests per interval

},

},

},

};

-

Security Middleware: Use security packages like helmet to set HTTP headers for improved security.

module.exports = {

settings: {

security: {

enabled: true,

options: {

helmet: {

contentSecurityPolicy: false,

},

},

},

},

};

Database Connections

Your choice and configuration of the database can significantly affect the performance of your Strapi application.

-

Connection Pooling: Enable connection pooling to reuse database connections, reducing the overhead of establishing new connections.

pool: {

min: 2,

max: 10,

},

-

Optimized Queries: Use the database query builder to write optimized queries and avoid fetching unnecessary data.

const { knex } = strapi.connections.default;

const users = await knex('users')

.select('name', 'email')

.where('status', 'active');

-

Indexes: Ensure that your database tables have appropriate indexes to speed up query execution. For example, adding an index to the email column in a users table:

CREATE INDEX idx_users_email ON users(email);

-

Database Configuration: Choose the right database and ensure it's configured for performance. For instance, when using PostgreSQL, ensure your pg_hba.conf is properly set for performance and security.

By adhering to these guidelines, you'll lay a robust foundation for a high-performing Strapi application, ready to be further optimized and load-tested using LoadForge. These configurations ensure that your Strapi setup is not only performant but also scalable and secure.

Optimizing Strapi with Caching

In a Strapi application, effective caching can drastically reduce database load and improve API response times. By storing frequently accessed data in a cache, you can serve repeated queries directly from the in-memory cache instead of querying the database each time. This section covers tips and strategies for implementing caching within your Strapi application to enhance performance.

1. Understanding Caching Strategies

Before diving into implementation, it's essential to understand the different types of caching strategies and when to use them:

- In-Memory Caching: Stores data in the memory (RAM) for extremely fast access. Ideal for session data or frequently queried results.

- Database Query Caching: Caches the results of database queries to avoid re-execution of the same queries.

- Content Delivery Network (CDN) Caching: Caches static assets and API responses at the network edge to reduce server load and improve response times globally.

2. Implementing In-Memory Caching with Redis

Redis is a popular choice for in-memory caching and can be easily integrated with Strapi. Follow these steps to add Redis caching:

-

Install Redis and Redis Client:

npm install redis

-

Configure Redis Client:

Create a config/database.js file and set up the Redis client:

const redis = require('redis');

const client = redis.createClient({

host: '127.0.0.1',

port: 6379,

});

client.on('error', (err) => {

console.error('Redis error:', err);

});

module.exports = client;

-

Creating Middleware for Caching:

Create a custom middleware middlewares/cache.js:

const client = require('../config/database');

module.exports = async (ctx, next) => {

const cacheKey = ctx.request.url;

const cachedData = await client.getAsync(cacheKey);

if (cachedData) {

ctx.body = JSON.parse(cachedData);

return;

}

await next();

if (ctx.response.status === 200) {

client.setex(cacheKey, 3600, JSON.stringify(ctx.body)); // Cache for 1 hour

}

};

-

Apply the Middleware:

Modify config/middleware.js to include the caching middleware:

module.exports = {

settings: {

logging: {

level: 'info',

},

cache: {

enabled: true,

provider: 'redis',

},

},

load: {

before: ['responseTime', 'logger'],

after: ['parser', 'router'],

},

routes: [

{

method: 'GET',

path: '/**',

handler: 'cache',

},

],

custom: {

cache: require('../middlewares/cache'),

},

};

3. Utilizing Strapi's Built-in Caching

Strapi allows for built-in caching in various parts of the application like responses and database queries. Out of the box, Strapi uses LruCache for response caching.

- Enable Response Caching:

Edit

config/middleware.js to enable response caching:

module.exports = {

settings: {

cache: {

enabled: true,

type: 'memory', // You can choose 'redis' to use Redis

max: 1000,

ttl: 3600,

},

},

};

4. Using CDN for Static Asset Caching

For static assets like images, CSS, and JavaScript files, using a CDN can offload the delivery from your server and significantly improve load times. Popular CDNs include Cloudflare, AWS CloudFront, and Fastly.

Best Practices for Effective Caching

- Cache Invalidation: Ensure you have a strategy for cache invalidation to avoid stale data. Use expiration times (TTL) judiciously.

- Granular Caching: Cache at appropriate granularities—avoid over-caching dynamic content.

- Cache Monitoring: Regularly monitor your cache hit/miss ratio to ensure caching is effective and make adjustments as necessary.

- Security: Ensure sensitive data isn't cached inadvertently, leading to potential data breaches.

By applying these caching strategies, you can significantly enhance the performance of your Strapi application, leading to faster response times and a better user experience. In the next sections, we will focus on more advanced performance enhancements and how to measure their effectiveness using LoadForge.

Enhancing Database Performance

When it comes to optimizing Strapi applications, database performance plays a crucial role. Efficient interactions with the database can significantly improve response times, reduce server load, and provide a smoother experience for end-users. In this section, we'll explore best practices for database optimization in the context of Strapi, focusing on indexing, query optimization, and connection pooling.

Indexing

Indexes are essential for improving the speed of data retrieval operations on your database. By creating indexes on columns that are frequently queried, you can drastically reduce the time it takes to execute those queries. Here's how you can effectively use indexing in Strapi:

-

Identify Frequently Queried Fields:

- Determine which fields are most commonly used in queries, especially those used in filter conditions or sorting.

-

Create Indexes:

- Use your database management tools or SQL statements to create indexes on these fields. For example, in a PostgreSQL database, you can create an index with the following command:

CREATE INDEX idx_name ON table_name (column_name);

-

Compound Indexes:

- Where applicable, create compound indexes on multiple columns that are often queried together.

-

Monitor Index Usage:

- Use performance monitoring tools to ensure that your indexes are being used effectively and adjust them as needed.

Query Optimization

Optimizing your queries is another critical aspect of database performance. Inefficient queries can lead to slow response times and increased server load:

-

Analyze Queries:

- Use tools like PostgreSQL's

EXPLAIN or MySQL's EXPLAIN to analyze your queries and understand their execution plans.

EXPLAIN SELECT * FROM table_name WHERE column_name = 'value';

-

Optimize Query Structure:

- Avoid SELECT *; instead, specify only the columns you need.

- Use joins wisely and ensure that joined columns are indexed.

- Minimize the use of subqueries, replacing them with joins or other techniques where possible.

-

Caching Queries:

- Implement caching mechanisms for frequently executed queries to reduce database load. Strapi's built-in caching can be configured to store results in memory or other cache stores.

Connection Pooling

Efficient management of database connections can prevent resource exhaustion and ensure that your Strapi application performs well under load:

-

Configure Connection Pooling:

- Use connection pooling to manage and reuse database connections efficiently. This reduces the overhead of establishing connections repeatedly.

-

Adjust Pool Size:

- Configure the pool size according to your application's needs and the capacity of your database server. Strapi uses the

knex.js library for database connections, and you can configure the pool settings in your database.js configuration file.

// config/database.js

module.exports = ({ env }) => ({

defaultConnection: 'default',

connections: {

default: {

connector: 'bookshelf',

settings: {

client: 'postgres',

host: env('DATABASE_HOST', 'localhost'),

port: env.int('DATABASE_PORT', 5432),

database: env('DATABASE_NAME', 'strapi'),

username: env('DATABASE_USERNAME', 'strapi'),

password: env('DATABASE_PASSWORD', 'strapi'),

ssl: env.bool('DATABASE_SSL', false),

},

options: {

pool: {

min: 2,

max: 10

}

}

}

}

});

-

Monitor Connection Usage:

- Use database monitoring tools to keep an eye on connection usage and adjust pool settings as necessary for optimal performance.

By focusing on these best practices—indexing, query optimization, and connection pooling—you can significantly enhance the database performance of your Strapi application. This not only results in faster response times and improved user experience but also ensures that your application can handle increased load more effectively.

Load Testing Basics

Introduction to Load Testing

Load testing is the process of subjecting a system to a simulated load, or demand, to measure its performance and behavior under various conditions. This practice is essential for understanding the capability of your Strapi application, ensuring it can handle expected user loads without compromising on performance or reliability. It helps developers and system administrators identify bottlenecks, optimize resource utilization, and maintain a seamless user experience.

Why Load Testing is Important

In the context of a Strapi application, load testing plays a vital role for several reasons:

- Performance Verification: It validates that your application can meet performance expectations under peak usage.

- Scalability Assessment: It helps determine how well your application scales with increasing user loads.

- Bottleneck Identification: It exposes weak points in your setup, whether they are in your Strapi instance, database layer, or server configuration.

- User Experience Assurance: It ensures your application delivers a smooth and fast user experience, even during high traffic periods.

Identifying Performance Bottlenecks in Strapi

When load testing your Strapi application, the primary focus is to identify and address performance bottlenecks. These bottlenecks can manifest in various forms, including:

- API Response Times: Slow responses can frustrate users and degrade the overall experience. Load testing helps pinpoint slow endpoints.

- Database Interactions: Inefficient queries or unoptimized schemas can slow down the application. Monitoring database performance during load tests can highlight such issues.

- Server Resource Utilization: CPU, memory, and network bandwidth usage under load can indicate resource constraints. Load testing allows you to monitor resource consumption and identify potential scaling needs.

By running comprehensive load tests, you can gather valuable data to understand where your Strapi application stands in terms of performance and reliability. This data serves as the foundation for further optimization efforts.

Example Load Test Scenario

Here's an example of how you might set up a load test scenario for a Strapi application using LoadForge:

const scenarios = [

{

name: "API Load Test",

description: "Simulating user interaction with public API",

method: "GET",

endpoint: "/products",

headers: {

"Content-Type": "application/json"

},

expectedStatus: 200,

users: 100,

rampUpTime: 60

}

];

module.exports = scenarios;

In this example, we simulate 100 users making GET requests to the /products endpoint over a ramp-up period of 60 seconds. This test helps identify how the /products API endpoint performs under concurrent access.

Conclusion

Load testing is a critical aspect of ensuring your Strapi application can meet user demands and provide a robust experience. By understanding the concept of load testing, recognizing its importance, and knowing how it can uncover performance bottlenecks, you can take proactive steps to optimize and scale your application effectively. The following sections will delve into specific strategies and tools, including how to set up and execute load tests with LoadForge, to help you make your Strapi application resilient and responsive.

Getting Started with LoadForge

In this section, we'll walk you through the essential steps to get started with LoadForge for load testing your Strapi application. We'll cover everything from creating an account to setting up the necessary tools, and introduce you to the key elements of the LoadForge interface.

Step 1: Create Your LoadForge Account

The first step in leveraging LoadForge for load testing is to create an account. Follow these steps:

-

Visit the LoadForge Website

Navigate to LoadForge and click on the "Sign Up" button.

-

Register for an Account

Fill in the required details such as your name, email address, and password.

-

Verify Your Email

Check your email inbox for a verification link from LoadForge. Click the link to activate your account.

Step 2: Install Necessary Tools

After setting up your account, you need to install the necessary tools to interact with LoadForge. This typically involves setting up the LoadForge CLI and any required dependencies:

-

Install Node.js

Ensure you have Node.js installed on your machine as LoadForge tools are Node.js-based.

Verify installation by running:

node -v

You should see the version number if Node.js is installed.

-

Install LoadForge CLI

Use npm (Node Package Manager) to install the LoadForge CLI:

npm install -g loadforge-cli

-

Verify Installation

After installation, verify the LoadForge CLI installation:

loadforge -V

This should return the version of the LoadForge CLI you've installed.

Step 3: LoadForge Interface Overview

It's essential to get familiar with the LoadForge interface for an efficient load testing experience. The LoadForge interface consists of the following main sections:

- Dashboard: Provides a summary of your recent activity, active tests, and quick links to important features.

- Projects: Organize your load tests into projects for better management. Create new projects to categorize tests based on different Strapi applications or environments.

- Tests: This section lists all the created load tests. You can create, configure, and execute your load tests from here.

- Reports: Detailed reports and analytics of executed load tests are available here. This is crucial for analyzing test results and identifying performance bottlenecks.

Step 4: Set Up Your First Project

-

Create a New Project

- Navigate to the "Projects" section and click on the "Create New Project" button.

- Enter a name and description for your project and click "Save".

-

Add Load Tests to Your Project

- Within your project, click on "Add New Test".

- Follow the prompts to configure the basic settings for your test, such as URL, number of users, duration, and ramp-up time.

Step 5: Configure LoadForge CLI for Project Management

LoadForge CLI enables you to manage and run load tests directly from your terminal. Here’s a quick setup guide:

-

Authenticate CLI with Your Account

loadforge login

Enter your LoadForge account credentials to authenticate.

-

Initialize a Project

loadforge init

This command initializes a new project configuration in the current directory.

-

Configure the Load Test

Create a file named loadtest.json to define your load test parameters:

{

"url": "https://your-strapi-app.com/api/endpoint",

"users": 100,

"duration": 300,

"rampUp": 60

}

Step 6: Running Your First Load Test

Run your configured test using the LoadForge CLI:

loadforge run loadtest.json

Monitor the progress and results of your test in real-time through the LoadForge dashboard.

By following these steps, you will have successfully set up LoadForge and configured your first load test for your Strapi application. This foundation prepares you for identifying and addressing performance issues, ensuring a smooth and robust user experience.

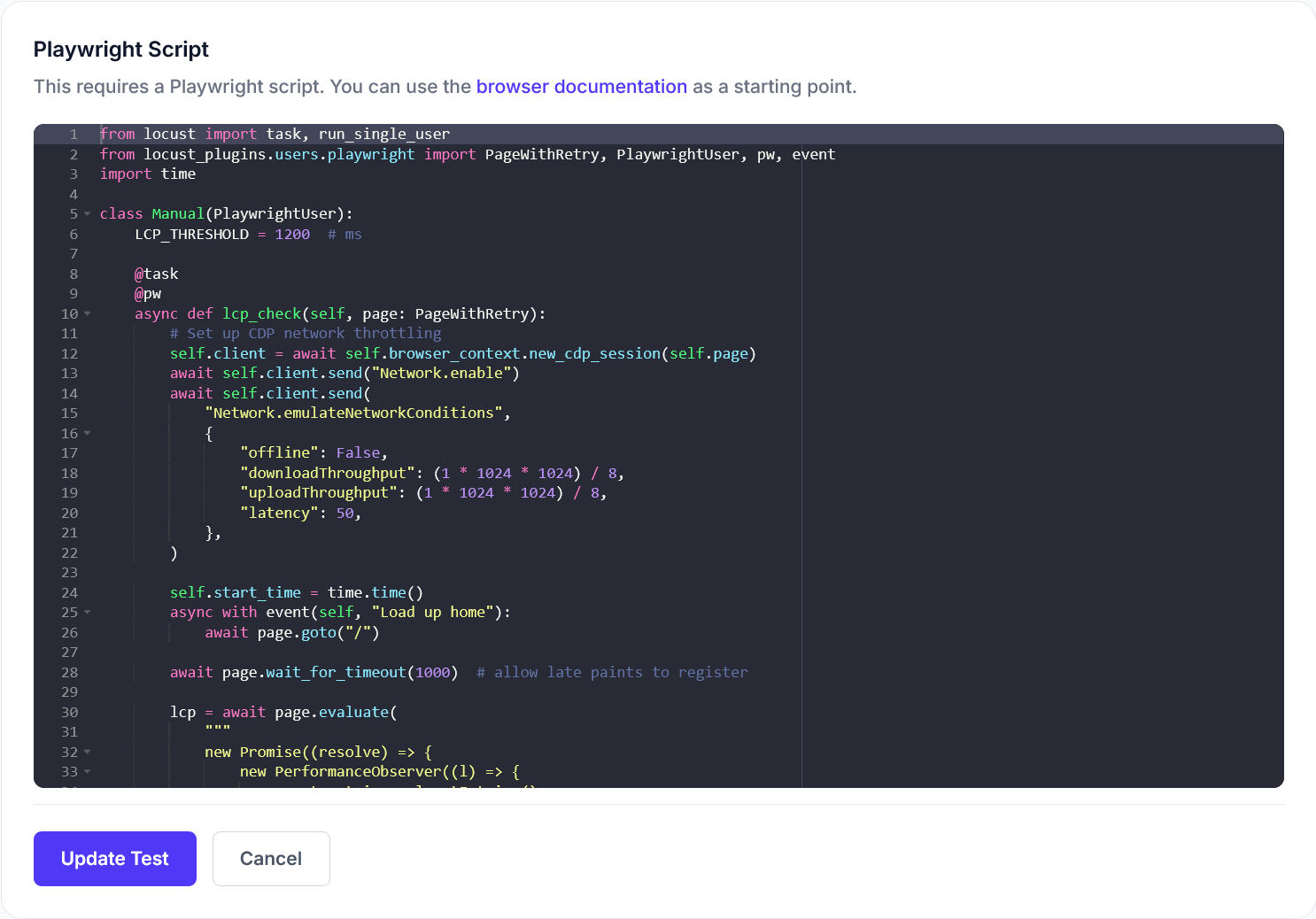

Creating Load Tests for Strapi

Creating effective load tests for your Strapi application using LoadForge involves setting up scenarios that accurately simulate the expected usage patterns. This section provides detailed instructions on how to tailor your load tests specifically for Strapi, ensuring comprehensive performance evaluation. Below are the steps to create, configure, and optimize your load tests.

Step 1: Define Your Test Scenarios

First, outline the key scenarios you want to test. These scenarios should represent typical interactions within your Strapi application, such as:

- Fetching a list of content entries.

- Creating a new content entry.

- Updating an existing content entry.

- Deleting a content entry.

- Performing user authentication.

Step 2: Choose Appropriate Test Parameters

Determine the parameters for each test scenario, including:

- Number of Concurrent Users: Decide how many users will be simulated concurrently.

- Test Duration: Set the duration for how long each test scenario will run.

- Request Frequency: Define how frequently requests will be made during the test.

For example:

- Concurrent Users: 100

- Test Duration: 30 minutes

- Request Frequency: 5 requests per second

Step 3: Configure LoadForge for Strapi

Log into your LoadForge account and follow these steps to set up your load tests:

- Create a New Test: Click on "Create Test" and provide a name and description for your test.

- Add Scenarios: Define the scenarios outlined in Step 1.

- Set Parameters: Enter the parameters decided in Step 2, such as the number of concurrent users and the test duration.

Step 4: Define API Endpoints and Payloads

Specify the API endpoints for each scenario and the corresponding request payloads. This ensures the load test accurately simulates real interactions with your Strapi application. Below is an example of a POST request for creating a new content entry:

POST /articles

{

"title": "Load Testing Strapi Applications",

"content": "This is a test article created during load testing.",

"published": true

}

Step 5: Implement Authentication (If Required)

If your Strapi application uses authentication, ensure your load tests include appropriate headers and tokens. Here’s an example of how to add an authorization header in LoadForge:

GET /users/me

Headers:

{

"Authorization": "Bearer YOUR_ACCESS_TOKEN"

}

Step 6: Incorporate Think Times

In a real-world scenario, users take some time to read and interact with the content before making another request. To replicate this, introduce "think times" within your test scenarios. Think times are pauses between requests that make the load test more realistic.

Step 7: Validate Your Load Test Configurations

Before running your load tests, validate your configurations to ensure everything is set up correctly. Verify the API endpoints, payloads, headers, and parameters. This prevents errors and ensures the load tests provide meaningful results.

Summary

By carefully defining test scenarios, choosing appropriate parameters, and accurately replicating user interactions with your Strapi application's API, you can create effective load tests using LoadForge. These tests will help identify performance bottlenecks and areas for optimization in your Strapi application.

This section now provides clear, step-by-step instructions for creating effective load tests for Strapi applications using LoadForge, ensuring readers can replicate the process and achieve reliable performance metrics.

Running Your Load Tests

In this section, we'll walk through the steps to execute load tests using LoadForge and monitor the results in real-time. We'll also provide tips to ensure your tests reflect realistic usage patterns, ensuring meaningful and actionable insights.

Executing Load Tests with LoadForge

-

Login to LoadForge

Begin by logging into your LoadForge account. If you haven't created an account yet or set up the necessary tools, refer to the "Getting Started with LoadForge" section of this guide.

-

Create a New Load Test

Navigate to the Load Tests section and click on the "Create Load Test" button. You'll be prompted to fill in details such as:

- Test Name: Give your test a meaningful name.

- Target URL: Enter the base URL of your Strapi application.

- Method: Select the HTTP method (e.g., GET, POST).

- Headers: Add any necessary headers, such as authorization tokens or custom headers.

-

Configure Test Scenarios

- Users and Ramping: Define the number of virtual users and how the load should ramp up. For example, you might start with 10 users and increase up to 1000 users over a defined period.

- Duration: Set the total duration of the test. A common practice is to run a test for at least 30 minutes to an hour to capture meaningful data.

- Endpoints and Payload: Specify the endpoints to hit and include any necessary request payloads for POST/PUT requests.

Example configuration:

{

"test_name": "Strapi API Stress Test",

"target_url": "https://your-strapi-app.com/api",

"method": "GET",

"headers": {

"Authorization": "Bearer your-token",

"Content-Type": "application/json"

},

"users": 100,

"ramp_up": "5m",

"duration": "1h",

"endpoints": [

"/articles",

"/users",

"...other endpoints..."

]

}

- Advanced Settings (Optional)

LoadForge provides advanced settings for more granular control over your tests, such as defining custom scripts for more complex scenarios. Refer to the LoadForge documentation for additional options.

Monitoring Real-time Results

Once your test is running, LoadForge offers a real-time dashboard to monitor various metrics. Here’s what you should keep an eye on:

- Response Times: Average, median, P90, and P99 response times give insights into the performance under load.

- Request Rates: Monitor the requests per second (RPS) to ensure your application handles the intended load.

- Error Rates: Track the percentage of HTTP errors to identify failure points.

- Server Metrics: CPU, memory, and other server metrics can help diagnose resource bottlenecks.

Ensuring Realistic Load Patterns

To simulate realistic usage patterns and get the most accurate results, consider the following tips:

- Mixed Traffic Patterns: Include a diverse set of endpoints in your test scenario to mimic real-world usage.

- Dynamic Data: Use dynamic data where applicable to avoid caching anomalies. For example, vary the payload data in POST requests.

- Think Times: Incorporate think times between requests to simulate human interaction, preventing the test from hitting your server with constant requests.

Example with think time:

{

"test_name": "Strapi API Usage Pattern",

"target_url": "https://your-strapi-app.com/api",

"method": "GET",

"users": 100,

"ramp_up": "5m",

"duration": "1h",

"think_time": "2s",

"endpoints": [

"/articles",

"/users",

"...other endpoints..."

]

}

Conclusion

Executing load tests using LoadForge is a critical step in ensuring your Strapi application can handle expected traffic and perform optimally. By closely monitoring real-time metrics and simulating realistic usage patterns, you can uncover and address performance bottlenecks effectively.

In the next section, we’ll dive into analyzing the test results and using the insights to fine-tune your Strapi application for peak performance.

Analyzing Load Test Results

When it comes to understanding the performance of your Strapi application, the ability to interpret the results from LoadForge load tests is critical. Effective analysis will help you identify key performance issues and understand essential metrics such as response times and error rates. This section will guide you through the process of analyzing your load test results, turning raw data into actionable insights.

Key Metrics to Monitor

The primary metrics to focus on when analyzing load test results are:

- Response Times: This includes average, median, and percentile-based measures (e.g., 95th percentile) of the time it takes for your server to respond to requests.

- Throughput: The number of requests your application can handle per second.

- Error Rates: The percentage of requests that failed due to server issues or other factors.

- Resource Utilization: CPU, memory, and other server resource usage during the test.

Interpreting Response Times

Response times are a critical indicator of your Strapi application's performance. They provide insight into how quickly your API can serve requests under different loads. When reviewing response times, consider:

- Average Response Time: This gives a general sense of performance but can be skewed by outliers.

- Median Response Time: A better measure of typical performance, particularly useful in understanding the user experience.

- 95th Percentile: This tells you that 95% of your requests responded within this time, providing insight into the worst-case scenarios for the majority of users.

Example interpretation:

Average Response Time: 150ms

Median Response Time: 140ms

95th Percentile: 220ms

If your 95th percentile is significantly higher than your median, this might indicate occasional spikes in response time, possibly due to database delays or server resource contention.

Evaluating Throughput

Throughput measures the capacity of your Strapi application to handle incoming requests. High throughput with low error rates typically indicates good performance and scalability.

Requests per Second: 120

Check how throughput changes as you increase the load. A plateau or decline in throughput suggests that your application has reached its capacity limits.

Assessing Error Rates

Error rates reveal the stability of your Strapi application under load. A high error rate indicates that your server is unable to handle the current load effectively.

Error Rate: 2%

Common sources of errors may include:

- Backend errors: Caused by database disconnections, timeouts, or exhausted resources.

- HTTP errors: Such as 5xx server errors, often indicative of server-side issues.

Monitoring Resource Utilization

Resource utilization metrics give you a view of how your Strapi application is using server resources during load testing. High CPU or memory usage might suggest the need for resource optimization or scaling adjustments.

Example:

CPU Usage: 70%

Memory Usage: 60%

Identifying Bottlenecks

Once you've gathered performance metrics, identify potential bottlenecks:

- Slow API endpoints: Identify specific routes with high response times.

- Resource-intensive operations: Look for database queries or other operations consuming excessive time.

- Scalability limits: Check for signs that your application struggles as load increases, such as rising error rates or degraded response times.

Example Analysis Steps

- Collect Data: Run a LoadForge test and gather detailed metrics.

- Review Response Times: Check average, median, and 95th percentile response times.

- Analyze Throughput: Assess how request handling capacity changes with load.

- Evaluate Error Rates: Note any increase in errors and their potential causes.

- Monitor Resources: Observe CPU, memory usage, and other server metrics.

- Identify Issues: Determine specific areas needing improvement based on the collected data.

Visualizing Data

LoadForge enables visualization of your test metrics, making it easier to spot trends and issues. Utilize graphs and charts to quickly comprehend performance data and share insights with your team.

Conclusion

Effective analysis of LoadForge load test results provides a clear picture of your Strapi application's performance under varying loads. By focusing on key metrics such as response times, throughput, error rates, and resource utilization, you can identify performance bottlenecks and areas for improvement. This crucial step ensures that your Strapi application remains robust, efficient, and scalable.

Strapi Performance Tuning Based on Test Results

After running load tests on your Strapi application using LoadForge, you'll have valuable insights into how your application performs under various conditions. This section focuses on actionable recommendations to fine-tune your Strapi setup, based on the results of these load tests. Effective tuning involves adjusting server configurations, optimizing application code, and making the best use of caching and database strategies.

Analyzing Load Test Results

Before diving into optimizations, it's crucial to carefully analyze your load test results. Look for key metrics such as:

- API Response Times: Average, median, and max response times.

- Error Rates: Frequency and types of errors returned.

- Throughput: Number of requests handled per second.

- Resource Utilization: CPU and memory usage statistics.

These metrics will help you identify bottlenecks and determine where optimization efforts should be concentrated.

Server Configuration Adjustments

Based on your load test results, you may need to adjust your server configurations to handle higher loads more effectively. Some common tweaks include:

-

Scaling Vertically: Increase the server's CPU and RAM to handle more concurrent requests.

-

Scaling Horizontally: Add more instances of your Strapi application behind a load balancer to distribute the load.

-

Adjusting Timeouts:

// config/middleware.js

module.exports = {

settings: {

timeout: 120*1000, // 2 minutes timeout

},

};

-

Optimizing Middleware: Ensure you are using essential middleware and disabling any that are not requisite to minimize processing times.

Code Optimizations

To bolster your Strapi application's efficiency, consider the following code-level adjustments:

-

Efficient Querying:

- Use projections to return only necessary fields:

const entries = await strapi.query('article').find({}, ['title', 'summary']);

- Batch multiple resource requests to reduce the number of round trips to the database.

-

Asynchronous Operations:

- Leverage asynchronous processing to handle I/O operations without blocking the main thread.

const data = await Promise.all([

strapi.query('article').findOne({ id: 1 }),

strapi.query('user').findOne({ id: 5 })

]);

Database Optimization

Database performance can significantly impact your Strapi application's overall performance. Here are a few practices to optimize your database layer:

-

Indexing: Properly index fields that are frequently queried to speed up read operations.

CREATE INDEX idx_articles_title ON articles(title);

-

Query Optimization: Review and optimize complex queries to ensure they execute efficiently, using joins and subqueries judiciously.

-

Connection Pooling: Ensure your database connection pooling settings are configured correctly to handle high traffic.

// config/database.js

connections: {

default: {

options: {

pool: {

min: 2,

max: 10

}

}

}

};

Enhancing Caching

Caching is paramount for reducing load times and minimizing database stress. Implement caching strategies based on your load test insights:

-

HTTP Caching: Use HTTP headers to control caching behaviors for static content and APIs.

// config/middleware.js

module.exports = {

settings: {

cache: {

maxAge: 300000, // 5 minutes

},

},

};

-

Redis Caching: Employ Redis for caching database query results or frequently accessed data.

const redis = require('redis');

const client = redis.createClient();

async function getCachedData(key) {

const cachedData = await client.get(key);

if (cachedData) return JSON.parse(cachedData);

const data = await fetchDataFromDB();

client.set(key, JSON.stringify(data), 'EX', 300); // Cache for 5 minutes

return data;

}

By integrating these performance tuning recommendations, your Strapi application can handle higher loads with enhanced efficiency and stability. Continue monitoring your application’s performance, testing for new bottlenecks, and applying iterative optimizations to ensure a robust, scalable application.

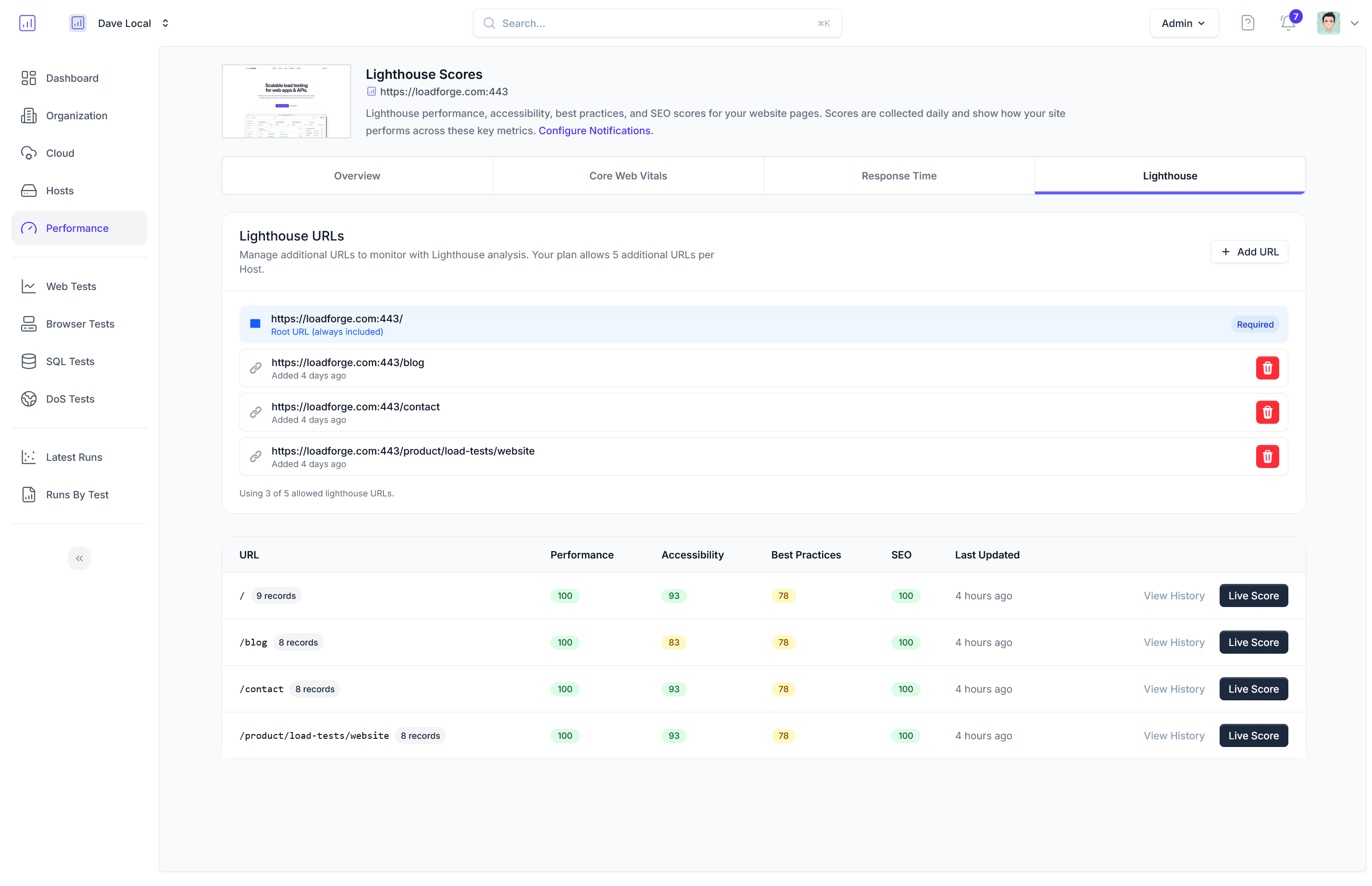

Continuous Performance Monitoring

Ensuring that your Strapi application remains optimized and performs efficiently as it scales and evolves is crucial for delivering a seamless user experience. Continuous performance monitoring allows you to proactively identify issues and tune your application before they become major problems. In this section, we'll discuss strategies for ongoing performance monitoring and testing of your Strapi application.

Implementing Continual Monitoring Tools

To keep an eye on your application's performance, it's essential to use robust monitoring tools. Here are a few tools and services that can help you monitor Strapi:

- New Relic, Datadog, or Prometheus: These are powerful APM (Application Performance Monitoring) tools that provide deep insights into your application's performance, including response times, throughput, and error rates.

- Grafana: Use Grafana in conjunction with Prometheus for visualizing performance metrics in real-time.

Example of configuring Grafana with Prometheus:

# prometheus.yml

scrape_configs:

- job_name: 'strapi'

static_configs:

- targets: ['localhost:1337']

Tracking Key Performance Metrics

To effectively monitor your Strapi application, focus on these key performance metrics:

- API Response Times: Monitor the time taken to process requests in both Strapi and the database.

- Error Rates: Keep track of the frequency and types of errors occurring.

- CPU and Memory Usage: Ensure that the server has sufficient resources to handle the load.

- Throughput: Measure the number of requests handled per second to gauge traffic handling capacity.

- Database Performance: Monitor query execution times, slow queries, and index usage.

Setting Up Alerts

Configure automated alerts to notify you when performance metrics exceed predefined thresholds. This allows you to quickly respond to potential issues. For example, using Prometheus and Alertmanager:

# alert_rules.yml

groups:

- name: strapi_alerts

rules:

- alert: HighResponseTime

expr: sum(rate(http_request_duration_seconds_sum[5m])) by (job) / sum(rate(http_request_duration_seconds_count[5m])) by (job) > 1

for: 5m

labels:

severity: warning

annotations:

summary: "High response time detected"

description: "The response time for {{ $labels.job }} is above 1 second."

Load Testing as Part of CI/CD

Integrate LoadForge into your CI/CD pipeline to ensure performance testing becomes a routine part of your deployment process. This approach guarantees that any code changes are tested under load conditions before being released to production.

Example using a CI/CD tool like GitLab CI/CD:

# .gitlab-ci.yml

stages:

- test

- deploy

load_test:

stage: test

script:

- loadforge run your_test_id

only:

- master

deploy_production:

stage: deploy

script:

- ./deploy.sh

only:

- master

Regular Audits and Optimizations

Schedule regular audits of your Strapi application to review and optimize performance. This periodic review should involve:

- Analyzing Monitoring Data: Review data collected from monitoring tools to identify patterns and potential performance degradation.

- Code Profiling: Use profiling tools to identify inefficient code paths or heavy database queries.

- Caching Strategy Review: Regularly review and update your caching strategy to ensure effective use of cache.

- Database Maintenance: Perform routine database maintenance tasks such as indexing and archiving old data.

Leveraging Feedback Loops

Create a feedback loop by regularly discussing performance metrics and issues with your development team. This ongoing dialogue helps in improving coding practices and understanding the performance impacts of different code changes.

Conclusion

By implementing continuous performance monitoring strategies, you can ensure that your Strapi application remains efficient and high-performing. Regular monitoring, alerting, load testing, and optimization practices will help you maintain a robust application capable of scaling with your user base. Integrating these practices into your development workflow is essential for proactive performance management and delivering a superior user experience.

Conclusion

In this guide, we've journeyed through the critical aspects of optimizing and load testing Strapi applications, underlining the importance of maintaining high performance and robustness as your application scales. Here, we'll summarize the key points we've covered and reiterate the importance of incorporating regular performance reviews into your development practices.

Recap of Key Points

-

Introduction: We began the guide by highlighting the significance of performance optimization and load testing in ensuring the scalability and reliability of your Strapi applications.

-

Understanding Strapi Performance: We delved into Strapi-specific performance factors such as API response times, database interactions, and efficient server resource utilization.

-

Setting Up Your Strapi Environment: We provided guidelines on optimizing your Strapi setup, including server configurations, middleware usage, and effective management of database connections.

-

Optimizing Strapi with Caching: We discussed various caching strategies to reduce database load and improve API response times, essential for a high-performing Strapi application.

-

Enhancing Database Performance: We covered best practices for database optimization, such as indexing, query optimization, and connection pooling, to ensure that your database can handle increasing loads efficiently.

-

Load Testing Basics: We introduced the concept of load testing, stressing its importance in identifying performance bottlenecks and ensuring your Strapi application can withstand real-world usage.

-

Getting Started with LoadForge: We provided a step-by-step guide on setting up LoadForge for load testing, making it easy to integrate into your workflow.

-

Creating Load Tests for Strapi: We walked through the process of creating effective load tests with LoadForge, tailored specifically for Strapi applications, including setting up realistic test scenarios and parameters.

-

Running Your Load Tests: We detailed the execution of load tests using LoadForge, including tips for monitoring the results in real-time and ensuring simulations reflect actual usage patterns.

-

Analyzing Load Test Results: We guided you through interpreting load test results from LoadForge, focusing on key metrics such as response times and error rates to pinpoint performance issues.

-

Strapi Performance Tuning Based on Test Results: We provided actionable recommendations for tuning your Strapi application based on load test insights, including server configuration adjustments and code optimizations.

-

Continuous Performance Monitoring: Finally, we outlined strategies for ongoing performance monitoring and testing to ensure your Strapi application remains optimized as it evolves.

Final Thoughts

Load testing is not a one-time activity but a continuous process that should be integrated into your development workflow. Regular performance testing ensures that your Strapi application can handle the demands of your users, providing a seamless and efficient experience even under peak loads. By leveraging tools like LoadForge, you can systematically identify and rectify performance bottlenecks, leading to a more resilient and responsive application.

Encouragement for Continuous Improvement

The digital landscape is ever-evolving, and so are user expectations. Incorporating regular performance reviews and load testing into your development cycle will help you stay ahead of potential issues and keep your Strapi application performing at its best. Utilize the insights gained from LoadForge tests to make informed decisions and iterative improvements, ensuring a robust, high-performing application for all your users.

Let’s make performance optimization and load testing a cornerstone of your development process, driving excellence in your Strapi applications!