Introduction to Django Rest Framework (DRF)

Django Rest Framework (DRF) is a powerful and flexible toolkit for building Web APIs in Python. Leveraging the strengths of both Django and Python, DRF facilitates the quick development of robust APIs, which are an essential component of modern web services and applications. Its ability to cater to both beginners and experienced developers through comprehensive documentation and customizable functionalities makes it a popular choice among developers worldwide.

The Importance of DRF in API Development

APIs (Application Programming Interfaces) are the backbone of many web services, allowing different software systems to communicate with each other. DRF simplifies the process of creating these APIs by providing:

- Serialization that supports both ORM and non-ORM data sources.

- Authentication policies including packages for OAuth1 and OAuth2.

- Customizable all the way down - from the way that data is accessed to the way it is rendered.

- Browsable API features which make it easy for developers to visually navigate the APIs they create through a web browser.

Utilizing Django's models and powerful ORM, DRF allows for the creation of clean and pragmatic APIs adhering to the RESTful principles.

Why Performance Optimization is Critical

In today's fast-paced digital landscape, the performance of web applications is crucial. Performance issues can lead to slow response times, which not only frustrate users but can also affect a company's SEO and revenue. Here are some critical reasons why performance optimization is essential in modern web applications:

- User Experience (UX): Fast-loading APIs improve overall user satisfaction and help maintain an active user base.

- Scalability: Efficiently optimized APIs handle increases in traffic more smoothly, making it easier to scale applications.

- Cost-efficiency: Optimizing API performance can reduce server load and, hence, the cost of infrastructure required to serve users.

- Competitive Edge: High-performing web applications contribute to a better service perception, giving businesses a competitive advantage.

For APIs, especially those created with frameworks like DRF, performance gains can be achieved through various strategies such as optimizing database queries, employing caching mechanisms, and correctly utilizing asynchronous programming paradigms. Each of these aspects not only enhances the response times but also ensures that the APIs can handle large volumes of requests, which is typical in enterprise situations.

All these factors underscore why selecting a framework like Django Rest Framework and focusing on performance optimization is not merely a good practice — it’s a necessity in today’s digital economy. The subsequent sections of this guide will delve deeper into the specific techniques and strategies that help achieve these performance benefits in DRF-based applications.

Database Optimization Techniques

Optimizing database interactions is a pivotal aspect of enhancing the performance of Django REST Framework (DRF) applications. Efficient database management not only improves response times but also helps in managing larger volumes of data without a significant increase in load times. This section will explore several strategies for optimizing your database interactions in DRF.

Efficient Query Design

The way you design and execute your queries can greatly impact the performance of your DRF application. Here are a few tips for optimizing your queries:

-

Use select_related and prefetch_related: These Django ORM tools are essential for reducing the number of database queries. select_related is used for single-valued relationships and helps perform a SQL join and include the fields of the related object in the SELECT statement, thus reducing the number of queries.

from django.db import models

class Author(models.Model):

name = models.CharField(max_length=100)

class Book(models.Model):

author = models.ForeignKey(Author, on_delete=models.CASCADE)

title = models.CharField(max_length=100)

# Using select_related to optimize queries

books = Book.objects.select_related('author').all()

-

prefetch_related, on the other hand, is used for fetching multi-valued relationships and performs separate queries, which it then joins in Python. This can dramatically cut down on database hits.

# Using prefetch_related to optimize queries

authors = Author.objects.prefetch_related('book_set').all()

-

Filter early and use annotations wisely: Apply filters as early as possible in your queries to reduce the size of the result set. Using annotations can help add fields that you can filter against without having to calculate them in Python.

from django.db.models import Count

# Using annotations to optimize queries

authors = Author.objects.annotate(num_books=Count('book')).filter(num_books__gt=1)

Indexing

Proper indexing is crucial for database performance. An index can drastically improve query performance by allowing the database to find rows faster.

-

Add indexes to frequently queried fields: Consider adding database indexes to fields that are frequently used in WHERE clauses, or as part of an ORDER BY.

-

Use composite indexes if working with multiple fields: If you often filter by multiple fields, consider adding a composite index that includes all these fields.

Using Django’s ORM Capabilities

Django's ORM comes with several capabilities that can help reduce database load:

-

Transaction Management: Use transactions to wrap multiple operations that need to be executed together. This reduces the time spent in communication with the database.

from django.db import transaction

# Using transactions to optimize database operations

with transaction.atomic():

author = Author(name='John Doe')

author.save()

book = Book(title='Django for Professionals', author=author)

book.save()

-

Connection Pooling: Although Django does not provide connection pooling directly, it can be configured to use third-party database pooling applications like pgbouncer for PostgreSQL. Connection pooling can significantly reduce the connection overhead for each request.

Conclusion

By optimizing your database queries, properly using indexes, and efficiently utilizing Django's ORM capabilities, you can significantly enhance the performance of your Django REST Framework applications. Always keep your database interactions in mind when designing your API and consider these optimization techniques to ensure smooth and efficient database operations.

Effective Use of Caching

In the realm of web development, particularly for APIs, caching is a critical element that can drastically improve response times and decrease server load. This holds especially true for Django Rest Framework (DRF), where effective caching strategies ensure that your API performs efficiently under varying loads.

Overview of Caching in Django

Django comes equipped with a robust caching framework, which can be configured to use various backends such as Memcached, Redis, or even a simple local memory cache. Caching in Django can be applied at various levels: per-view, per-site, or using low-level cache APIs for fine-grained control over what is cached.

Caching Techniques for DRF

- Per-view Caching:

Implementing caching at the view level in DRF is straightforward and can be managed with the

@cache_page decorator, which caches the output of the view for a specified time. Here’s an example to cache a view for 10 minutes:

from django.views.decorators.cache import cache_page

@cache_page(60 * 10) # Cache for 10 minutes

def my_view(request):

# view implementation

pass

- Caching Querysets:

For APIs driven by database interactions, caching entire querysets can dramatically reduce response times. This can be handled efficiently by using Django's

cache framework. Here's a basic snippet:

from django.core.cache import cache

def get_data():

if 'my_data' in cache:

# hit the cache

return cache.get('my_data')

else:

data = MyModel.objects.all()

cache.set('my_data', data, timeout=300) # cache for 5 minutes

return data

- Using DRF Extensions:

The

drf-extensions package provides additional caching capabilities, such as CacheResponseMixin, which can be added to your viewsets to automatically handle caching:

from rest_framework_extensions.cache.mixins import CacheResponseMixin

class MyViewSet(CacheResponseMixin, viewsets.ViewSet):

def list(self, request, *args, **kwargs):

# handle list operation

pass

Integrating Third-Party Caching Tools

While Django’s built-in caching mechanisms provide significant improvements, incorporating third-party tools like Redis can further enhance your caching strategy.

- Redis as a Cache Backend:

Redis is an in-memory data structure store, known for its fast performance. You can configure Redis as your cache backend in Django by setting it in

settings.py:

CACHES = {

"default": {

"BACKEND": "django_redis.cache.RedisCache",

"LOCATION": "redis://127.0.0.1:6379/1",

"OPTIONS": {

"CLIENT_CLASS": "django_redis.client.DefaultClient",

}

}

}

- Advanced Caching Patterns:

Beyond simple key-value caching, use Redis for advanced patterns like sorted sets for leaderboards, pub/sub for real-time updates, and more complex data types to suit your application's needs.

Best Practices

- Cache Invalidation: Always have a strategy for invalidating cache when the underlying data changes. Over-reliance on stale data can lead to inconsistencies.

- Selective Caching: Not all data needs to be cached. Identify high-impact areas based on your application's access patterns.

- Monitor Usage: Employ monitoring tools to analyze cache effectiveness and adjust strategies accordingly.

By implementing these caching strategies in your DRF application, you can achieve substantial improvements in API response times while simultaneously reducing the load on your servers. Remember, an effective cache strategy is crucial for scaling applications and providing a smooth user experience.

Data Serialization Best Practices

Data serialization in Django Rest Framework (DRF) is a critical component that can notably influence the overall performance of your APIs. Serialization in DRF involves converting complex data types such as queryset and model instances into native Python datatypes that can be easily rendered into JSON or XML. The efficiency of this process directly impacts the response time of the API and the load on your server, especially as the size and complexity of the data grow.

Impact of Data Serialization on Performance

Serialization processes can become a bottleneck in your application if not handled efficiently. This impact is pronounced when dealing with large datasets or complex nested relationships. Inefficient serialization contributes to:

- Increased response times as the server spends more time processing the data.

- Higher memory usage which can affect the overall performance of the server.

- Increased load on the database if serialization logic requires multiple or complex queries.

Best Practices for Optimizing Serialization Tasks

To mitigate the performance issues associated with data serialization in DRF, consider the following best practices:

1. Selective Field Serialization

Limit the fields that are serialized and sent in a response based on what the client actually needs. This not only reduces the amount of data that needs to be serialized but also minimizes the bandwidth usage.

from rest_framework import serializers

from .models import User

class UserSerializer(serializers.ModelSerializer):

class Meta:

model = User

fields = ['id', 'username', 'email'] # Only serialize these fields

2. Use depth Wisely

The depth option in DRF serializers allows for automatic nested serialization up to a specified depth. While useful, excessive depth can lead to significant performance degradation, especially with large datasets.

3. Optimize Querysets

Before serialization, optimize how data is fetched from the database. Use select_related and prefetch_related to minimize database access for foreign keys and many-to-many relationships, respectively.

from .models import Author

# Optimized queryset

authors = Author.objects.select_related('book').all()

4. Implement Custom Serializer Methods

When built-in serialization methods do not meet your application’s performance needs, consider implementing custom serializer methods. Custom methods allow for finer control over the serialization process, which can be tailored to optimize performance.

class OptimizedUserSerializer(serializers.ModelSerializer):

full_name = serializers.SerializerMethodField()

class Meta:

model = User

fields = ['id', 'full_name', 'email']

def get_full_name(self, obj):

return f"{obj.first_name} {obj.last_name}"

5. Avoid N+1 Query Problems

An N+1 query problem occurs when the serializer makes new database queries for each item in a list of items, typically seen with nested serialization. Utilizing prefetch_related and select_related judiciously can help reduce additional database hits.

Conclusion

Effective data serialization is key to improving the responsiveness and efficiency of your DRF-based applications. By focusing on optimizing both the quantity of data serialized and the process of serialization itself, you can significantly enhance the performance of your APIs. Remember, the ultimate goal is not just to speed up data serialization, but to ensure it complements the overall user experience and scalability of your application.

Concurrency and Asynchronous Techniques

In modern web applications, efficiently handling multiple user requests simultaneously is crucial for maintaining performance and responsiveness. Django Rest Framework (DRF) provides several capabilities for managing concurrency and leveraging asynchronous programming models to boost application performance. This section delves into these techniques, illustrating how they can be applied to enhance your DRF applications.

Understanding Concurrency in Django

Concurrency involves multiple processes or threads handling tasks in a way that they appear to occur simultaneously. Django traditionally handles requests synchronously, where each request is processed one at a time, blocking the server from tackling another request until the current one is complete. This model, while simple and reliable, may not always be the most efficient, especially under high load.

Asynchronous Support in Django

With the release of Django 3.1, support for asynchronous views and middleware has been introduced, allowing developers to write asynchronous code using async def. This asynchronous capability helps in dealing with long-running operations or handling high volumes of requests by not blocking the main execution thread.

Asynchronous Views in Django

To handle concurrent users more effectively, you can convert your traditional Django views to asynchronous ones. Here’s a basic example:

from django.http import JsonResponse

from asgiref.sync import sync_to_async

import asyncio

async def async_view(request):

data = await sync_to_async(get_data_from_db)()

return JsonResponse(data)

In this snippet, sync_to_async is a helper function used to transform a synchronous function (get_data_from_db) into an asynchronous one, making it compatible with the async view.

Using Django Channels for WebSocket

WebSocket provides a more interactive communication session between the user's browser and a server. Django Channels extends Django to handle not just HTTP but also protocols that require long-lived connections like WebSockets, crucial for real-time features.

from channels.generic.websocket import AsyncWebsocketConsumer

import json

class NotificationsConsumer(AsyncWebsocketConsumer):

async def connect(self):

await self.accept()

async def disconnect(self, close_code):

pass

async def receive(self, text_data):

text_data_json = json.loads(text_data)

message = text_data_json['message']

await self.send(text_data=json.dumps({

'message': message

}))

Task Queues for Background Jobs

For operations that are resource-intensive or time-consuming, consider using a task queue such as Celery with Django. This approach offloads tasks to a separate worker process, enabling the web server to remain responsive.

Example of a Celery Task:

from celery import shared_task

@shared_task

def process_data(data_id):

# Process data asynchronously

pass

Profiling and Tuning

It’s vital to profile your asynchronous code to understand where bottlenecks lie. Python’s asyncio provides several profiling tools that can help identify issues in your asynchronous routines.

Conclusion

Implementing asynchronous techniques and handling concurrency properly can significantly improve the responsiveness and scalability of your Django Rest Framework applications. By judiciously applying these methods, you can ensure that your applications can handle high loads and provide a smoother user experience. Whether you are using async views, Django Channels, or integrating with a task queue like Celery, each approach comes with its own set of benefits tailored to different scenarios within your web applications.

Profiling and Monitoring DRF Applications

Profiling and monitoring are critical components of maintaining and improving the performance of web applications. In Django Rest Framework (DRF), effective profiling helps developers identify performance bottlenecks, while monitoring ensures that applications run smoothly under various conditions. This section discusses techniques and tools useful for profiling and monitoring DRF applications.

Profiling Tools for Django

Profiling involves measuring the resources that your application is using, identifying what parts of your code are causing slowdowns. Here are some tools and techniques to consider:

Django Debug Toolbar

Django Debug Toolbar is an essential tool for development. It attaches a comprehensive debugging interface to every response, showing SQL queries, request data, headers, and much more.

To install it:

pip install django-debug-toolbar

Configuration is straightforward:

# settings.py

if DEBUG:

INSTALLED_APPS += ['debug_toolbar']

MIDDLEWARE += ['debug_toolbar.middleware.DebugToolbarMiddleware']

INTERNAL_IPS = ['127.0.0.1']

Django Silk

Django Silk is a profiling tool which intercepts and stores HTTP requests and database queries. It provides a rich interface to inspect their performance.

To get started, add it to your installed apps and middleware:

# settings.py

INSTALLED_APPS += ['silk']

MIDDLEWARE += ['silk.middleware.SilkyMiddleware']

Then, run python manage.py migrate silk to set up its data tables.

Django Performance and Profiling

You can also use lower-level tools such as cProfile to profile your Django application. cProfile is a built-in Python module that can profile any Python code, including Django views.

Example usage:

import cProfile

import io

import pstats

def profile_view(request):

pr = cProfile.Profile()

pr.enable()

# your view logic here

pr.disable()

s = io.StringIO()

sortby = 'cumulative'

ps = pstats.Stats(pr, stream=s).sort_stats(sortby)

ps.print_stats()

return HttpResponse(s.getvalue())

Monitoring Techniques

Keeping track of your application's health in production is vital. Here’s how you can monitor your DRF applications:

Logging

Proper logging can provide insights into what your application is doing and help identify issues in real-time. Configure Django's logging to capture warnings and errors, and consider sending logs to a centralized logging service for better analysis.

Example configuration:

# settings.py

LOGGING = {

'version': 1,

'disable_existing_loggers': False,

'handlers': {

'file': {

'level': 'DEBUG',

'class': 'logging.FileHandler',

'filename': '/path/to/django/debug.log',

},

},

'loggers': {

'django': {

'handlers': ['file'],

'level': 'DEBUG',

'propagate': True,

},

},

}

Performance Monitoring Tools

Integrate Django with an application performance monitoring (APM) tool like New Relic, Datadog, or Sentry. These tools help in visualizing requests per second, response times, error rates, and more in real-time.

When setting up an APM tool, make sure it's configured to report detailed information specific to Django. This often involves adding a middleware and setting up necessary environment variables.

Health Checks

Implement health check endpoints to continuously validate the operational status of your application and its external dependencies. Tools like Django-Health-Check can help set this up easily.

# urls.py

from health_check import urls as health_urls

urlpatterns = [

path('health/', include(health_urls)),

]

Monitoring and profiling are integral to the success of any production application. By effectively using these tools and strategies, you can ensure that your DRF applications not only perform well but are also maintainable and scalable in the long run.

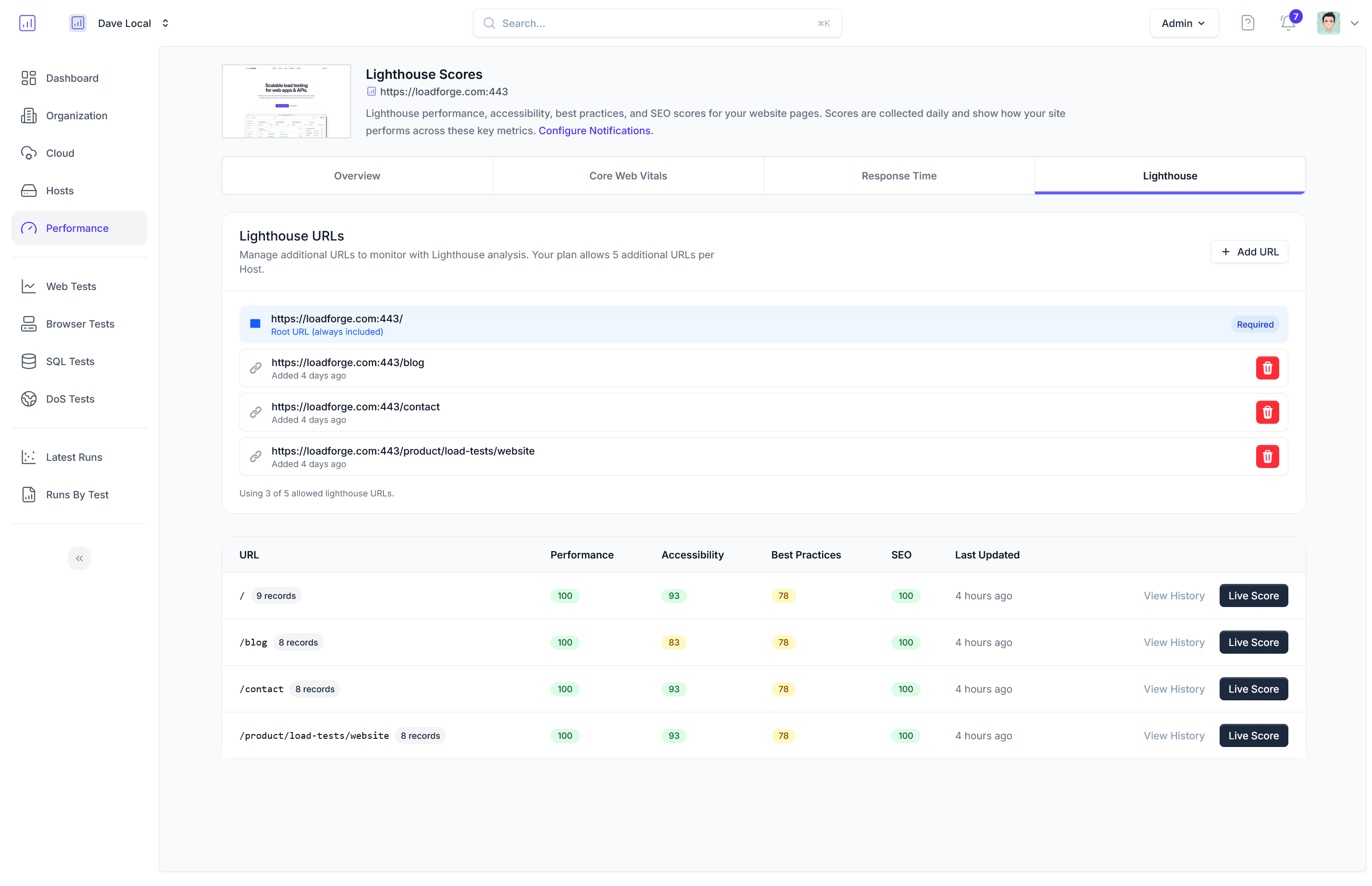

Load Testing with LoadForge

Load testing is a crucial component of performance tuning for any web application, and Django REST Framework (DRF) APIs are no exception. By simulating traffic and performing load tests, developers can identify potential scalability issues and performance limitations that might not be evident during development or in lower-traffic conditions. In this section, we introduce LoadForge, a versatile load testing solution, and describe how to implement it to test the performance of Django APIs.

Why Use LoadForge for Django API Testing?

LoadForge provides a robust and user-friendly platform for creating and conducting load tests. It allows teams to simulate thousands of users interacting with your API, offering insights into how the system behaves under stress. This can be critical for ensuring your application can handle high traffic events without degrading performance.

Steps to Load Test Your DRF Application with LoadForge

1. Set Up Your LoadForge Account

- Sign up or log in to your LoadForge account.

- Prepare your testing environment by ensuring your Django API is accessible from LoadForge.

2. Define Test Scripts

LoadForge uses custom scripts to simulate user behavior. Here’s a basic example of what a LoadForge test script might look like for a DRF application:

from locust import HttpUser, task, between

class QuickstartUser(HttpUser):

wait_time = between(1, 5)

@task

def index_page(self):

self.client.get("/api/v1/resource", auth=('user', 'pass'))

- This script defines a single user class that makes GET requests to

/api/v1/resource.

- Adjust the endpoint, add other HTTP methods, and modify authentication details as needed for your specific API.

3. Configure Test Parameters

- On your LoadForge dashboard, specify the number of users and spawn rate to simulate different levels of traffic.

- Set the duration of the test to get a comprehensive view over time.

4. Run Your Tests

- Execute the test script.

- Monitor the performance while the test runs to catch any immediate issues.

5. Analyze the Results

After the test completes, LoadForge provides detailed results that help identify bottlenecks. Key metrics to watch include:

- Response Times: Average and maximum response times help determine API responsiveness.

- Request Rate: Insights into throughput capabilities of your API.

- Error Rates: High error rates might hint at stability issues under load.

Tips for Effective Load Testing with LoadForge

- Gradual Increase in Load: Start with a low number of virtual users and gradually increase to see how your API handles transitions from low to high traffic.

- Realistic User Behavior: Simulate realistic user interactions with your API by replicating actual request patterns and behaviors observed in production.

- Regular Testing: Incorporate load testing into regular performance review cycles, especially before major releases or updates.

Conclusion

Load testing your Django REST Framework API with LoadForge can significantly aid in preparing your application for real-world usage conditions, helping ensure performance is maintained at scale. By following these steps and tips, developers can effectively use LoadForge to reveal important insights about their API's scalability and robustness, leading to a more resilient application.

Implementing Code Optimization and Refactoring

In the realm of Django Rest Framework (DRF), writing cleaner, more efficient code isn't just about keeping your codebase manageable; it's fundamental to enhancing application performance and maintainability. As your API scales, the importance of code optimization and systematic refactoring cannot be overstated. Below, we delve into practical tips and methodologies to refine your DRF applications.

1. Embrace Pythonic Code Principles

The Python community encourages writing code that is not only functional but also readable and elegant. Pythonic principles advocate for simplicity and directness in code, which often translates into performance improvements. Here are some Pythonic practices to consider:

-

Use list comprehensions and generator expressions: These provide a concise way to create lists or to iterate over elements, which are not only more readable but can also be more memory efficient than traditional loops.

# Non-pythonic way

filtered_data = []

for item in data:

if item meets_condition:

filtered_data.append(item)

# Pythonic way using list comprehension

filtered_data = [item for item in data if item meets_condition]

-

Leverage built-in functions and libraries: Python and DRF come with a multitude of optimized built-in functions and libraries. Utilizing these can reduce the need for custom, potentially less efficient, implementations.

2. Refactor Using DRY Principles

Don’t Repeat Yourself (DRY) is a core tenet in software development. Repeated code can lead to larger codebases, which are harder to maintain and more prone to bugs. In DRF, this might mean:

- Abstracting common functionality into base classes or utility functions.

- Using mixins to share behavior across multiple DRF views or serializers.

3. Optimize Database Queries

Inefficient database queries can severely degrade your API's performance. Django's ORM is powerful, but misusing it can lead to costly operations:

-

Use select_related and prefetch_related wisely: These queryset methods can help prevent N+1 query problems by fetching related objects in fewer database queries.

# Using select_related to optimize foreign key access

queryset = Book.objects.select_related('author').all()

-

Utilize values and values_list when full model instances aren't needed: This can reduce memory usage and speed up query execution.

4. Lean on Effective Debugging and Profiling Tools

Identifying areas in your code that need optimization is pivotal. Use Django's profiling tools or Python modules like cProfile to analyze your API’s performance. This helps in pinpointing heavy function calls or slow execution blocks that are ripe for refactoring.

5. Regular Code Reviews

Encourage regular code reviews as part of your development cycle. Peer reviews can uncover inefficient code practices, logical errors, or areas where refactoring could introduce performance benefits. Also, leveraging collective knowledge within your team often leads to discovering new, more efficient coding approaches.

6. Unit Testing and Continuous Integration (CI)

Refactoring without a proper testing framework is risky; it can introduce bugs into previously stable code. Maintain a robust suite of unit tests, and use CI tools to ensure that changes pass all tests before merging them into the main codebase.

7. Follow Up-to-Date DRF and Django Releases

Keep your DRF and Django frameworks up to date. New releases often come with optimizations and features that can improve performance. Additionally, staying current with the latest versions ensures better security and support.

# Simple test to ensure refactoring does not break functionality

from django.test import TestCase

from myapp.models import MyModel

class MyModelTestCase(TestCase):

def test_something_about_my_model(self):

# Actual tests go here

pass

By refining code quality and continually adapting refactoring practices, Django developers can substantially uplift both the performance and maintainability of DRF applications. This commitment to code quality not only yields faster response times but also makes the API more robust and easier to manage as it scales.

Security Considerations and Performance

In the world of web APIs, security cannot be an afterthought; it needs to be integrated into the application architecture without detracting from performance. In DRF (Django Rest Framework), marrying security measures with performance optimization requires a strategic approach to ensure that your applications are both secure and efficient.

Balancing Security and Performance

Security features often introduce additional processing, which can potentially slow down your API responses. For instance, encrypting data, validating tokens, and checking permissions are vital tasks that add overhead to your API requests.

Strategies for Optimizing Security

-

Efficient Authentication Mechanisms:

- Use token-based authentication (such as JWT) which is stateless, and therefore does not require server resources to maintain user state.

- Implement token-caching mechanisms to reduce database hits each time a token is checked.

from rest_framework.authentication import TokenAuthentication

from rest_framework.permissions import IsAuthenticated

from django.core.cache import cache

class CachedTokenAuthentication(TokenAuthentication):

def authenticate_credentials(self, key):

user = cache.get(key)

if not user:

user, _ = super().authenticate_credentials(key)

cache.set(key, user, timeout=3600)

return (user, key)

-

Selective Field Encryption:

- Encrypt only sensitive data fields instead of the entire payload which can help reduce encryption and decryption time.

-

Reducing Permissions Overhead:

- Use a highly efficient permission checking system or caching frequently accessed permissions to avoid additional database queries.

-

Using Advanced Security Tools:

- Integrate third-party security tools that provide a balance of security and performance such as those designed specifically for Django.

Performance-friendly Security Practices

-

Asynchronous Security Tasks: Where possible, handle time-consuming security tasks (like log analysis and threat detection) asynchronously.

-

Database Security Indices: Just as with your application data, ensure that security-related queries leverage database indices to speed up lookups.

Testing Security with Performance

Before deploying enhancements to live environments, security features should be thoroughly load tested. This helps in identifying potential performance bottlenecks introduced by the security measures.

Load Testing with LoadForge:

You can utilize LoadForge to simulate various authenticated and unauthenticated API requests to measure the impact of security enhancements on API performance. In your load test scripts, you can imitate different security scenarios to ensure that these features work seamlessly under stress and at scale:

from loadforge.http import HttpUser

from loadforge import Events

class WebsiteUser(HttpUser):

host = "https://api.example.com"

min_wait = 5000

max_wait = 15000

@Events.task

def auth_request(self):

self.client.post("/api/token/", {"username": "user", "password": "pass"})

self.client.get("/api/secure-data/", headers={"Authorization": "Bearer YOUR_ACCESS_TOKEN"})

Conclusion

Optimal DRF application security requires meticulous planning to balance security measures and performance impacts. Effective implementation and robust testing ensure that your API remains secure and responsive, safeguarding both your data and your user experience.

Conclusion and Best Practices

Throughout this guide, we have explored various strategies aimed at boosting the performance of Django Rest Framework (DRF) applications. Given the growing demand for efficient, scalable web applications, understanding and applying these performance tips is vital for any developer working with Django APIs.

Key Points Recap

-

Database Optimization: Utilizing efficient querying techniques, proper indexing, and optimizing ORM usage ensures minimized database load and quicker response times.

-

Caching Mechanisms: We discussed implementing Django’s cache framework to store precomputed responses, reducing the number of expensive database hits and speeding up common requests.

-

Data Serialization: Choosing the right serialization methods can drastically reduce overhead. We emphasized model serialization optimizations and using lighter custom serializers for enhanced performance.

-

Concurrency and Asynchronous Techniques: Leveraging Django’s async views and other concurrency methods helps in handling more requests simultaneously, significantly boosting application responsiveness.

-

Profiling and Monitoring: Regular use of profiling tools to identify bottlenecks and continual monitoring of performance metrics is essential for maintaining a healthy DRF application.

-

Load Testing with LoadForge: We illustrated how LoadForge can be used to simulate different traffic scenarios, which helps in identifying scalability challenges and ensuring your API can handle real-world usage.

-

Code Optimization and Refactoring: Continuously refactoring and adopting cleaner, more efficient code practices not only improves performance but also enhances the maintainability of the application.

-

Security and Performance: Implementing security without compromising performance requires a balanced approach, ensuring that security measures do not negatively impact the user experience.

Best Practices for Maintaining and Improving Performance

To keep your Django APIs performing at their best, adhere to the following best practices:

-

Regularly Update Dependencies: Keep Django and DRF updated along with any third-party packages to benefit from the latest performance optimizations and features.

-

Employ Performance Testing Routines: Integrate regular load testing sessions using LoadForge to anticipate and plan for potential scalability issues.

-

Monitor and Analyze: Use monitoring tools to keep a close eye on your application’s performance in real-time. Analyze logs and metrics frequently to preemptively address issues.

-

Database Maintenance: Periodically review and optimize your database schema. Use database profiling tools to find and optimize slow queries.

-

Refine Caching Strategies: Evaluate and update your caching strategies based on usage patterns and data mutability.

-

Optimize Data Structures and Algorithms: Always choose the most efficient data structures and algorithms for your application’s needs. Complex operations should be streamlined or offloaded.

-

Asynchronous Everything Possible: Wherever applicable, use Django’s asynchronous features to free up your application’s throughput and reduce latency.

-

Security Audits: Regularly perform security audits to ensure that no performance optimization undermines security protocols.

By implementing these strategies and continuously monitoring their impact, you can ensure that your Django APIs remain robust, responsive, and scalable. This proactive approach to performance and maintenance will set your applications apart in today’s dynamic and demanding tech landscape.