Introduction

In the rapidly evolving landscape of web technologies, ensuring your website can efficiently handle high traffic is crucial. One of the key strategies to achieve this is through load balancing, a technique that distributes incoming network traffic across multiple servers. This not only enhances the reliability and availability of your website but also optimizes resource utilization and improves user experience. One of the most powerful and flexible tools for implementing load balancing is NGINX.

Why Choose NGINX for Load Balancing?

NGINX, initially released as a web server, has rapidly gained a reputation for its high performance, stability, rich feature set, and simple configuration. Here are some core reasons why NGINX stands out as an excellent choice for load balancing:

- High Performance and Low Resource Usage: NGINX's architecture, based on asynchronous, event-driven handling, allows it to serve a vast number of simultaneous connections with minimal resource consumption.

- Scalability: NGINX can effortlessly handle thousands of concurrent connections, making it suitable for scaling both vertically (on more powerful hardware) and horizontally (adding more servers).

- Flexibility: With support for various load balancing algorithms including Round Robin, Least Connections, and IP Hash, NGINX provides the flexibility to tailor the load balancing strategy to your specific needs.

- Advanced Features: NGINX offers a range of advanced features like SSL/TLS termination, health checks, session persistence, and more.

- Ease of Use: Despite its powerful capabilities, NGINX is known for its user-friendly configuration syntax, which simplifies the setup and maintenance processes.

Key Load Balancing Concepts

Before diving into the configuration, it’s essential to understand some fundamental load balancing concepts that will help you make informed decisions about your setup.

Load Balancing Algorithms

- Round Robin: Distributes client requests in a circular order, ensuring each server in the pool gets an equal share of traffic.

- Least Connections: Directs traffic to the server with the least number of active connections, helping to balance workloads more evenly across servers with varying capabilities.

- IP Hash: Uses the client’s IP address as a hashing key to consistently route requests from the same client to the same server, facilitating session persistence.

Upstream Servers

These are the backend servers that handle client requests. A load balancer can distribute requests to multiple upstream servers to ensure high availability and fault tolerance.

Health Checks

Health checks are used to monitor the operational status of upstream servers. If a server is detected to be unhealthy, it is temporarily removed from the pool, preventing traffic from being directed to a potentially downed server.

Session Persistence

Also known as sticky sessions, session persistence ensures that clients are consistently routed to the same backend server for the duration of their session. This is crucial for applications that rely on session-based data storage.

NGINX combines all of these concepts into a single, cohesive solution, making it a compelling choice for anyone looking to implement a robust load balancing strategy for their website.

In the following sections, we will delve deeper into the practical aspects of setting up and configuring NGINX for load balancing, starting with the installation process, moving through basic and advanced configurations, and culminating in performance tuning and security best practices. By the end of this guide, you’ll be well-equipped to leverage NGINX's full potential to maintain a highly available and efficiently balanced web infrastructure.

Installing NGINX

Before we dive into configuring NGINX for load balancing, we first need to install NGINX on your system. This section provides a step-by-step guide on how to install NGINX on various operating systems including Ubuntu, CentOS, and Windows.

Installing NGINX on Ubuntu

-

Update Package List:

First, update your package list to ensure you have the latest information about available packages.

sudo apt update

-

Install NGINX:

Next, install NGINX using the apt package manager.

sudo apt install nginx

-

Start and Enable NGINX:

Finally, start NGINX and ensure it runs on system startup.

sudo systemctl start nginx

sudo systemctl enable nginx

Installing NGINX on CentOS

-

Install EPEL Repository:

First, install the EPEL (Extra Packages for Enterprise Linux) repository as NGINX is part of this repository.

sudo yum install epel-release

-

Install NGINX:

Next, install NGINX using the yum package manager.

sudo yum install nginx

-

Start and Enable NGINX:

Finally, start NGINX and ensure it runs on system startup.

sudo systemctl start nginx

sudo systemctl enable nginx

Installing NGINX on Windows

While NGINX is primarily designed for Unix-based systems, you can still run it on Windows for test environments.

-

Download NGINX:

Download the latest stable release of NGINX for Windows from the official NGINX downloads page: NGINX Windows Downloads.

-

Unpack the Archive:

Once the download is complete, extract the contents of the zip file to a directory of your choice (e.g., C:\nginx).

-

Run NGINX:

Open the Command Prompt and navigate to the NGINX directory. Then, start NGINX by running the following command:

cd \nginx

start nginx

Verifying NGINX Installation

After installation, you can verify that NGINX is running by navigating to http://your_server_ip_address/ in your web browser. You should see the default NGINX welcome page if the installation was successful.

Useful Commands

Here are a few useful commands for managing your NGINX installation:

| Command |

Description |

sudo systemctl start nginx |

Start the NGINX service |

sudo systemctl stop nginx |

Stop the NGINX service |

sudo systemctl restart nginx |

Restart the NGINX service |

sudo systemctl enable nginx |

Enable NGINX to start at boot |

sudo systemctl status nginx |

Check the current status of NGINX |

nginx -s reload |

Reload NGINX configuration without downtime |

With NGINX successfully installed, you're now ready to proceed with the basic configuration. In the next section, we'll guide you through the initial setup and essential configuration options to get NGINX up and running as a web server.

Basic Configuration

In this section, we will cover the initial setup and essential configuration options to get NGINX up and running as a web server. Whether you're a seasoned pro or a novice, these steps will help ensure that your NGINX installation is configured correctly to serve your website efficiently.

Prerequisites

Before we start configuring NGINX, ensure you have:

- Installed NGINX on your system. If not, refer to the "Installing NGINX" section of this guide.

- Basic knowledge of accessing and editing configuration files, typically using a terminal or SSH.

Understanding NGINX Configuration Files

NGINX configurations are generally stored in /etc/nginx on Linux systems and in the directory where NGINX is installed on Windows. The main configuration file is usually named nginx.conf.

The Structure of nginx.conf

Let's start by examining the structure of nginx.conf. The file comprises several key sections:

- Main Context: This includes directives that affect the general operation of the NGINX server.

- HTTP Context: Contains HTTP-specific configurations.

- Server Blocks: Define individual virtual hosts to handle different domains.

- Location Blocks: Specify how to process requests for certain URIs.

Below is a basic example of an nginx.conf file:

user www-data;

worker_processes auto;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

server {

listen 80;

server_name example.com;

location / {

root /var/www/html;

index index.html index.htm;

}

error_page 404 /404.html;

location = /404.html {

root /var/www/html;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /var/www/html;

}

}

}

Key Configuration Directives

Main Context

- user: Defines which Unix user account will run the worker processes.

- worker_processes: Determines the number of worker processes to handle requests. Set to

auto to leverage the number of CPU cores.

Events Context

- worker_connections: Sets the maximum number of simultaneous connections that can be handled by a worker process.

HTTP Context

- include: Loads additional configuration files.

- default_type: Specifies the MIME type for files for which the MIME type is not determined.

- sendfile: Enables or disables the use of

sendfile(2) system call to transfer files.

- keepalive_timeout: Sets the timeout for keep-alive connections with clients.

Setting Up a Basic Server Block

A server block handles incoming HTTP requests for a specified domain. Here's a breakdown of the essential directives:

- listen: Specifies the port that the server will listen on, typically port 80 for HTTP.

- server_name: Defines the domain name the server block is responsible for.

- location: The location context is used to handle requests for specific URIs.

- root: Sets the root directory for the requests.

- index: Specifies the default index file to serve when a directory is requested.

server {

listen 80;

server_name example.com;

location / {

root /var/www/html;

index index.html index.htm;

}

error_page 404 /404.html;

location = /404.html {

root /var/www/html;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /var/www/html;

}

}

Testing Your Configuration

Before restarting NGINX, it is essential to test your configuration for any syntax errors. Use the following command:

sudo nginx -t

If the test is successful, reload NGINX to apply the changes:

sudo systemctl reload nginx

Verifying the Setup

To verify that NGINX is serving your web content, open a web browser and navigate to the domain, e.g., http://example.com. You should see the content from /var/www/html.

Conclusion

You've now completed the basic configuration of NGINX. This setup will allow you to serve static web content while providing a foundation for further customization. In subsequent sections, we will delve into more advanced configurations, such as setting up load balancing, SSL termination, and performance tuning.

Configuring NGINX for Load Balancing

Setting up NGINX as a load balancer equips your web infrastructure with enhanced scalability, reliability, and performance. In this section, we'll explore how to configure NGINX to distribute client requests across multiple backend servers, utilizing various load balancing methods including Round Robin, Least Connections, and IP Hash.

Load Balancing Methods

-

Round Robin: This is the simplest and default load balancing method. It distributes requests sequentially from the list of servers.

-

Least Connections: This method directs traffic to the server with the least number of active connections, which is useful when multiple servers have varying performance capacities.

-

IP Hash: This method assigns a client IP address to a specific server. It ensures that clients with the same IP address are always directed to the same server, making it useful for session persistence.

Setting Up NGINX as a Load Balancer

Step 1: Define Upstream Servers

First, define the backend servers in your NGINX configuration file. This is done using the upstream directive.

upstream backend {

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

Step 2: Configure the Load Balancing Method

Simply specifying the servers will default to Round Robin. To use different load balancing methods, add the corresponding directive:

- Round Robin (default, no additional configuration required):

upstream backend {

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

upstream backend {

least_conn;

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

upstream backend {

ip_hash;

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

Step 3: Direct Traffic to Upstream Servers

Once the upstream servers are defined and the load balancing method is set, direct traffic to the upstream group in your server block using the proxy_pass directive.

server {

listen 80;

location / {

proxy_pass http://backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

Additional Configuration Options

To further fine-tune your load balancing setup, consider these additional configurations:

- Failover Options: Specify backup servers that take over if the primary servers are unavailable.

upstream backend {

server backend1.example.com;

server backend2.example.com;

server backend3.example.com backup;

}

- Weight Assignment: Assign weights to servers to influence the load distribution.

upstream backend {

server backend1.example.com weight=3;

server backend2.example.com weight=2;

server backend3.example.com weight=1;

}

Summary

NGINX offers a versatile and robust solution for load balancing, capable of implementing various methods to optimize the distribution of client requests across multiple backend servers. By carefully choosing and configuring the appropriate load balancing method, you can enhance the availability, performance, and reliability of your web applications.

In the next sections, we will delve into setting up upstream servers, implementing health checks and monitoring, and many other vital configurations to ensure your load balancer is efficient and robust. Continue reading to get the most out of your NGINX load balancing setup.

Setting Up Upstream Servers

When configuring NGINX as a load balancer, defining your upstream servers—i.e., the backend servers that will handle client requests—is a fundamental step. This section will guide you through configuring multiple backend servers in your NGINX configuration file to effectively distribute the load.

Step 1: Create the Upstream Block

The upstream directive is used to define a cluster of backend servers. This block is placed within the http context of your NGINX configuration file, typically found at /etc/nginx/nginx.conf.

Here is a basic example of an upstream block:

http {

upstream backend_servers {

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

server {

listen 80;

location / {

proxy_pass http://backend_servers;

}

}

}

In this example, backend1.example.com, backend2.example.com, and backend3.example.com are the backend servers. Requests coming to the NGINX server are forwarded to these backend servers.

Step 2: Configuring Load Balancing Methods

NGINX supports different load balancing methods. By default, it uses the Round Robin method. Here’s how you can specify different methods:

-

Round Robin (Default):

The default method distributes requests sequentially across all servers in the upstream block.

-

Least Connections:

Distributes requests to the server with the least number of active connections.

upstream backend_servers {

least_conn;

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

-

IP Hash:

Ensures that requests from a single client always go to the same server, which helps with session persistence.

upstream backend_servers {

ip_hash;

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

Step 3: Define Upstream Servers with Parameters

You can also define additional parameters for your backend servers, such as weights, timeouts, and maximum number of connections.

-

Weighted Load Balancing:

Assign different weights to servers which influence the load distribution.

upstream backend_servers {

server backend1.example.com weight=3;

server backend2.example.com weight=1;

server backend3.example.com weight=2;

}

-

Failover and Backup Servers:

Define a server as a backup that will only be used when the primary servers are unavailable.

upstream backend_servers {

server backend1.example.com;

server backend2.example.com;

server backend3.example.com backup;

}

-

Timeouts and Max Connections:

Set parameters for connection timeout and limiting connections.

upstream backend_servers {

server backend1.example.com max_fails=3 fail_timeout=30s;

server backend2.example.com max_conns=100;

server backend3.example.com;

}

Step 4: Apply and Test Configuration

After adding your upstream configuration and adjusting it according to your needs, you should test the configuration to ensure it's syntactically correct:

sudo nginx -t

If the test is successful, reload NGINX to apply the changes:

sudo systemctl reload nginx

Conclusion

Configuring upstream servers in NGINX is essential for effective load balancing. You can define servers, specify load balancing methods, and fine-tune server parameters to best meet your requirements. By distributing incoming traffic across multiple backend servers, you enhance the availability, reliability, and scalability of your web application.

## Health Checks and Monitoring

Ensuring high availability is crucial when setting up NGINX as a load balancer. By implementing health checks, you can continuously monitor the status of your backend servers and seamlessly reroute traffic in case of server failures. This section will guide you through setting up health checks in NGINX and how to monitor your backend servers efficiently.

### Configuring Health Checks

Health checks in NGINX can be configured using the `upstream` module. This allows NGINX to detect unhealthy backend servers and exclude them from the load balancing pool temporarily. Below is a basic example of how to configure health checks in your `nginx.conf` file:

```nginx

http {

upstream backend {

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

# Enable health checks

health_check interval=5s fall=3 rise=2 timeout=2s;

}

server {

listen 80;

location / {

proxy_pass http://backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

}

Explaining the Parameters:

interval: Specifies the interval between consecutive health checks. Here, it's set to 5 seconds.fall: The number of consecutive failed health checks before a server is considered down. Set to 3 in this example.rise: The number of consecutive successful health checks before a server is considered up again. Set to 2 in this example.timeout: The timeout duration for a health check. Set to 2 seconds in this example.

Advanced Health Check Options

For more complex scenarios, such as custom health check request URIs or status codes, you can tweak additional parameters:

http {

upstream backend {

server backend1.example.com;

server backend2.example.com;

# Custom health check settings

health_check uri=/health_check.jsp match=service_ok;

}

server {

listen 80;

location / {

proxy_pass http://backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

# Define custom health check response match

match service_ok {

status 200-299;

}

}

Monitoring with NGINX Status Module

To monitor the status of your NGINX load balancer and backend servers, you can enable the NGINX status module. This module provides real-time metrics on your server’s performance. Here’s how to set it up:

http {

server {

listen 80;

location /nginx_status {

stub_status on;

allow 127.0.0.1; # Restrict access to localhost

deny all;

}

}

}

Once configured, accessing http://your-server-ip/nginx_status would provide output similar to:

Active connections: 291

server accepts handled requests

144391 144391 310704

Reading: 0 Writing: 12 Waiting: 279

Third-Party Monitoring Tools

For more advanced monitoring and analytics, consider integrating third-party tools like Prometheus, Grafana, or ELK (Elasticsearch, Logstash, Kibana) stack. These tools can offer in-depth insights and help you set up alerting mechanisms for anomalies.

Implementing Notifications

Lastly, tying your health checks and monitoring setup with a notification system helps in proactive management. For instance, using tools like PagerDuty, Slack, or custom scripts can trigger alerts if backend server health deteriorates.

By configuring health checks and robust monitoring, you ensure that your NGINX load balancer responds promptly to any backend server issues, maintaining high availability and optimal performance for your website.

Session Persistence

When using NGINX as a load balancer, session persistence (also known as sticky sessions) is crucial for maintaining consistent user experience across multiple backend servers. Session persistence ensures that a user's requests are always directed to the same server, which is particularly important for applications that rely on session data. Without sticky sessions, users might experience inconsistencies or disruption in their interactions with your website.

NGINX provides several options for implementing sticky sessions:

1. IP Hash

The IP Hash method uses the client's IP address to determine which server will handle the request. This method ensures that requests from the same IP address are always directed to the same server.

To configure IP Hash in your nginx.conf file:

http {

upstream backend {

ip_hash;

server backend1.example.com;

server backend2.example.com;

}

server {

location / {

proxy_pass http://backend;

}

}

}

2. Sticky Cookie

The Sticky Cookie method assigns a cookie to each client that stores information about which backend server to use. This is useful for applications requiring HTTPS because it does not depend on the client's IP address.

To configure Sticky Cookie in your nginx.conf file:

http {

upstream backend {

sticky cookie srv_id expires=1h domain=.example.com path=/;

server backend1.example.com;

server backend2.example.com;

}

server {

location / {

proxy_pass http://backend;

}

}

}

3. Sticky Route

The Sticky Route method involves configuring a session identifier that NGINX uses within the URL or a cookie to identify the backend server. This helps maintain the session even if the client's IP address changes.

To configure Sticky Route in your nginx.conf file:

http {

upstream backend {

server backend1.example.com;

server backend2.example.com;

# Example sticky route configuration

server backend1.example.com route=a;

server backend2.example.com route=b;

}

server {

location / {

set $route $cookie_route;

if ($route = "") {

set $route $request_uri;

}

proxy_pass http://backend;

proxy_cookie_path / "/; HttpOnly; Secure; SameSite=Lax";

}

}

}

Advantages and Considerations

Each method of implementing session persistence has its advantages and potential drawbacks:

- IP Hash: Simple to configure, but might cause uneven distribution if many users are behind the same proxy.

- Sticky Cookie: Works well with HTTPS and provides consistent stickiness, but requires cookie handling.

- Sticky Route: Offers flexibility and robustness, but requires initial setup and configuration of routes.

Best Practices

When using session persistence, consider the following best practices to ensure optimal performance and reliability:

- Monitor Session Distribution: Regularly monitor and ensure that the session distribution among backend servers is balanced to avoid server overload.

- Configure Proper Timeout: Ensure that session stickiness is configured with appropriate expiration settings to handle long-lived sessions efficiently.

- Plan for Failover: Implement strategies to handle server failures gracefully, redirecting sessions to other healthy backend servers without significant user disruption.

By implementing these options, you can maintain session persistence across multiple backend servers, providing a seamless and consistent user experience.

Explore the next section to understand how to set up SSL termination with NGINX.

### SSL Termination with NGINX

When it comes to enhancing the security and performance of your web application, SSL termination with NGINX is a crucial technique. By offloading SSL processing from your backend servers to NGINX, you reduce the computational overhead on your application servers and centralize SSL certificate management, thereby simplifying your infrastructure. In this section, we'll guide you through setting up SSL termination with NGINX.

#### What is SSL Termination?

SSL termination is the process of decrypting SSL-encrypted traffic at the load balancer level before passing unencrypted traffic to the backend servers. This method not only enhances security by centralizing SSL operations but also reduces the workload on backend servers.

#### Prerequisites

Before you begin, ensure that you have obtained a valid SSL/TLS certificate and private key from a trusted Certificate Authority (CA). You'll need these files to configure SSL termination.

#### Step-by-Step Guide

1. **Install OpenSSL (if not already installed):**

Depending on your operating system, you might need to install OpenSSL to manage your SSL certificates.

On Ubuntu:

```sh

sudo apt-get update

sudo apt-get install openssl

On CentOS:

sudo yum install openssl

-

Generate SSL Certificates:

If you haven't obtained an SSL certificate, you can generate a self-signed certificate for testing purposes:

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /etc/ssl/private/nginx-selfsigned.key -out /etc/ssl/certs/nginx-selfsigned.crt

-

Modify NGINX Configuration:

Open your NGINX configuration file (usually located at /etc/nginx/nginx.conf or within the /etc/nginx/sites-available/ directory) and add the following server block:

server {

listen 443 ssl;

server_name yourdomain.com;

ssl_certificate /etc/ssl/certs/nginx-selfsigned.crt;

ssl_certificate_key /etc/ssl/private/nginx-selfsigned.key;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_prefer_server_ciphers on;

ssl_ciphers "ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES256-GCM-SHA384";

location / {

proxy_pass http://backend_servers;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

Here's a breakdown of the critical directives:

listen 443 ssl;: Instructs NGINX to listen on port 443 for HTTPS traffic.server_name: Defines the domain name for the server.ssl_certificate and ssl_certificate_key: Specify the paths to your SSL certificate and private key.ssl_protocols: Specifies the SSL/TLS protocols to be allowed.ssl_ciphers: Defines the ciphers to be used for encryption.proxy_pass: Forwards the traffic to the backend servers defined in the upstream block.

-

Set Up Upstream Servers:

Define your upstream servers in the configuration file:

upstream backend_servers {

server backend1.example.com;

server backend2.example.com;

}

-

Enable HTTP to HTTPS Redirection (Optional):

To ensure all traffic is redirected to HTTPS, add the following server block:

server {

listen 80;

server_name yourdomain.com;

location / {

return 301 https://$host$request_uri;

}

}

-

Restart NGINX:

After making these changes, restart NGINX to apply the new configuration:

sudo systemctl restart nginx

Conclusion

Setting up SSL termination with NGINX not only enhances security but also optimizes the performance of your web application by offloading SSL processing from your backend servers. By following the steps outlined above, you can centralize SSL termination, thereby simplifying your infrastructure and improving the overall user experience.

Error Handling and Redirection

When setting up NGINX as a load balancer, managing how errors and redirections are handled is crucial for improving user experience and ensuring fault tolerance. This section will guide you through configuring custom error pages and setting up redirection rules within your NGINX configuration.

Custom Error Pages

Custom error pages help in presenting a more user-friendly interface when clients encounter errors such as 404 (Not Found) or 500 (Internal Server Error). Here's how to set up custom error pages in your NGINX configuration:

-

Create Custom Error Pages:

First, design and save your custom error pages (e.g., 404.html, 500.html) in your web root directory or a custom location.

-

Configure NGINX to Use Custom Error Pages:

Edit your NGINX configuration file, typically found at /etc/nginx/nginx.conf or within your site's configuration file within /etc/nginx/sites-available/. Add error_page directives under the relevant server block:

server {

listen 80;

server_name yourwebsite.com;

location / {

proxy_pass http://your_backend_servers;

# Other proxy settings

}

error_page 404 /custom_404.html;

error_page 500 502 503 504 /custom_50x.html;

location = /custom_404.html {

root /usr/share/nginx/html;

internal;

}

location = /custom_50x.html {

root /usr/share/nginx/html;

internal;

}

}

Here, internal ensures that these error pages are only accessible internally and cannot be directly accessed by clients from the internet.

Setting Up Redirection Rules

Redirection is often necessary for migrating content, managing traffic for SEO purposes, or handling application changes. Here are common redirection scenarios and their configurations in NGINX:

-

Permanent Redirect (301):

Use a 301 redirect when a URL has been permanently moved. This is useful for SEO as search engines will update their indexes with the new URL.

server {

listen 80;

server_name oldwebsite.com;

location / {

return 301 http://newwebsite.com$request_uri;

}

}

-

Temporary Redirect (302):

Use a 302 redirect when the move is temporary and you expect to use the original URL again in the future.

server {

listen 80;

server_name temporarypage.com;

location / {

return 302 http://anotherpage.com$request_uri;

}

}

-

Redirect Specific URL Patterns:

You might need to redirect specific pages or paths. Here’s an example of redirecting all /blog traffic to a new domain:

server {

listen 80;

server_name yourwebsite.com;

location /blog {

return 301 http://newblogwebsite.com$request_uri;

}

}

Catch-All Redirection

In some cases, you might want to ensure all traffic to a server is redirected. This might be part of a domain migration:

server {

listen 80 default_server;

server_name _;

location / {

return 301 http://newdomain.com$request_uri;

}

}

Conclusion

Effectively managing error handling and redirections in NGINX not only improves user experience but also aids in maintaining SEO value and ensuring seamless transitions during migrations or restructures. Having custom error pages and well-thought-out redirection rules can significantly mitigate disruptions and enhance your overall website reliability.

With these configurations, you can ensure your NGINX load balancer handles errors gracefully and directs your users precisely where you want them to go.

## Performance Tuning

Optimizing NGINX for performance is crucial to ensure it handles increased traffic seamlessly and efficiently. This section provides tips and techniques for tweaking critical parameters such as buffer sizes, connection limits, and worker processes for maximum performance.

### 1. Adjusting Worker Processes and Connections

NGINX uses worker processes to handle client requests, and each worker can handle multiple connections. The number of worker processes should ideally match the number of CPU cores:

```shell

worker_processes auto;

Additionally, you should configure the maximum number of simultaneous connections each worker can handle. This can be adjusted in the nginx.conf file:

events {

worker_connections 1024; # Adjust based on your system capability

}

2. Tuning Buffer Sizes

Buffers play a crucial role in maintaining effective data flow. Adjusting buffer sizes can significantly enhance performance, especially when dealing with large requests or responses.

Client Body Buffer Size

This setting limits the size of the client request body buffered on disk:

http {

client_body_buffer_size 16K;

}

Client Header Buffer Size

Adjusting the buffer size for client headers ensures they fit within the memory buffer:

http {

client_header_buffer_size 4k;

}

Large Client Header Buffers

Set the maximum number and size of buffers for large client headers:

http {

large_client_header_buffers 4 8k;

}

3. Optimizing Timeouts

Configuring appropriate timeouts can help avoid hanging connections, freeing up resources more efficiently.

Keepalive Timeout

The keepalive_timeout directive sets the timeout for persistent client connections:

http {

keepalive_timeout 65;

}

Client Body and Header Timeouts

Set timeouts for reading client request body and headers:

http {

client_body_timeout 12;

client_header_timeout 12;

}

4. Enabling Gzip Compression

Enabling Gzip compression reduces the size of transferred data, enhancing load times and reducing bandwidth usage:

http {

gzip on;

gzip_disable "msie6";

gzip_vary on;

gzip_proxied any;

gzip_comp_level 6;

gzip_buffers 16 8k;

gzip_http_version 1.1;

gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript;

}

5. Caching Optimization

Caching responses can dramatically increase the performance of your NGINX server. Use the following configuration to enable simple file caching:

http {

proxy_cache_path /data/nginx/cache levels=1:2 keys_zone=my_cache:10m max_size=10g inactive=60m use_temp_path=off;

server {

location / {

proxy_cache my_cache;

proxy_cache_valid 200 302 10m;

proxy_cache_valid 404 1m;

}

}

}

6. Fine-Tuning Logging

High logging levels can consume substantial disk I/O and CPU resources. Reduce the logging level to necessary minimums:

http {

access_log /var/log/nginx/access.log main buffer=16k flush=5m;

error_log /var/log/nginx/error.log warn;

}

7. Upstream Keepalive Connections

Keepalive connections to upstream servers can reduce latency and improve performance:

http {

upstream backend {

server backend1.example.com;

server backend2.example.com;

keepalive 32; # Adjust based on your needs

}

server {

location / {

proxy_pass http://backend;

proxy_http_version 1.1;

proxy_set_header Connection "";

}

}

}

By implementing these performance tuning techniques, you can significantly enhance the efficiency and response times of your NGINX load balancer. Remember to monitor the changes and adjust settings based on your specific requirements and workload.

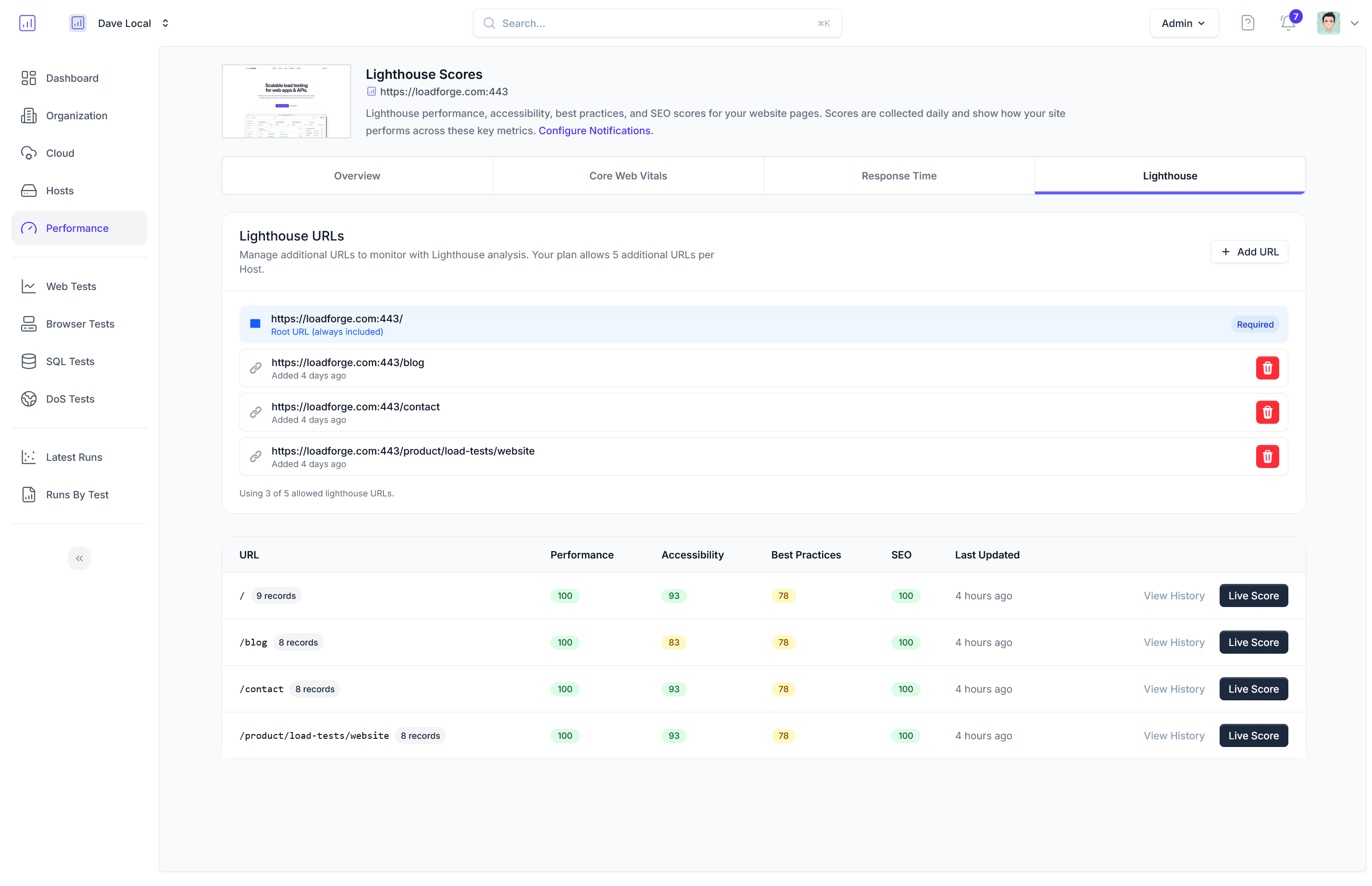

Load Testing with LoadForge

Load testing is an essential part of ensuring your NGINX load balancer is ready to handle high traffic and unexpected spikes in demand. In this section, we introduce LoadForge—a powerful tool designed to help you conduct thorough load testing. We will also provide step-by-step instructions on setting up and interpreting test results to gain valuable insights into your system's performance and stability.

Introduction to LoadForge

LoadForge offers a robust, easy-to-use platform for load testing your website's infrastructure. By simulating large volumes of traffic, LoadForge helps you understand how your NGINX load balancer and backend servers perform under stress. This empowers you to identify and rectify potential bottlenecks before they affect your users.

Setting Up LoadForge

Start by creating a LoadForge account if you haven't already. Once logged in, follow these steps to set up your first load test:

-

Create a New Test

- Navigate to the dashboard and click on "Create New Test."

- Enter a descriptive name for your test and specify the URL of your NGINX server.

-

Configure Test Parameters

- Define the number of virtual users (VU) to simulate. By default, you might start with 100 VUs.

- Set the duration of the test using the test duration slider or input field, typically starting with 10-15 minutes.

- Configure ramp settings to gradually increase the load over the test duration, e.g., starting with 10 users and ramping up to 100 over 10 minutes.

-

Specify Load Distribution

- Choose whether to distribute the load evenly or apply a custom distribution pattern. This helps mimic real-world traffic scenarios more accurately.

-

Advanced Settings (Optional)

- Customize headers, cookies, and authentication settings if needed.

- Configure scripts for complex user interactions and custom workflows.

-

Run the Test

- Review your settings and click "Run Test" to start the load testing process. LoadForge will begin simulating user traffic and monitor your NGINX load balancer.

Interpreting Test Results

Once your load test completes, LoadForge provides comprehensive analytics to help you interpret the results:

-

Overview Dashboard

- View high-level metrics such as total requests, request rate, and errors. This gives you a quick snapshot of your system's performance under load.

-

Response Times

- Examine detailed response time metrics, including average, median, and percentile response times. Identify patterns that indicate latency and potential bottlenecks.

-

Error Analysis

- Review error rates and types. Understanding specific error codes can help you pinpoint issues in your configuration or backend servers.

-

Resource Utilization

- Monitor CPU, memory, and network usage on your NGINX load balancers and backend servers. High resource consumption may indicate a need for scaling or optimization.

-

Visualizing Trends

- Use graphs and charts to visualize performance trends throughout the duration of the test. Look for spikes or dips in performance to diagnose transient issues.

Code Example

For a basic load test configuration, you might create a test script using LoadForge's built-in scripting capabilities. Here’s a simple example of how such a script might look:

import http from 'k6/http';

import { check } from 'k6';

export default function () {

const res = http.get('https://your-nginx-load-balancer.com');

check(res, {

'status is 200': (r) => r.status === 200,

});

}

This script performs an HTTP GET request to your NGINX load balancer URL and checks that the response status is 200 (OK).

Conclusion

LoadForge is an invaluable tool for load testing your NGINX load balancer. By rigorously testing and understanding your system's performance, you can ensure it meets the demands of your users and maintains high availability. Follow the steps outlined above to get started with LoadForge and interpret your test results effectively, paving the way for a robust, resilient web infrastructure.

Advanced Load Balancing Techniques

In this section, we'll delve into some advanced techniques for load balancing with NGINX. Specifically, we’ll cover weighted load balancing, dynamic reconfiguration of backend servers, and utilizing NGINX Plus features to enhance your load balancing strategy.

Weighted Load Balancing

Weighted load balancing allows you to distribute traffic unevenly across your backend servers. This is particularly useful if certain servers have more resources or better performance characteristics. By assigning weights, NGINX can direct a proportionate amount of traffic to each server based on their capacity.

Configuration Example

To set up weighted load balancing, you need to specify the weight parameter for each server in your upstream configuration:

http {

upstream myapp {

server backend1.example.com weight=3;

server backend2.example.com weight=2;

server backend3.example.com weight=1;

}

server {

location / {

proxy_pass http://myapp;

}

}

}

In this example, backend1 will receive 50% of the traffic, backend2 will receive 33%, and backend3 will receive 17%.

Dynamic Reconfiguration

Dynamic reconfiguration allows you to add or remove backend servers without needing to restart NGINX. This can be particularly useful for managing server pools in a highly dynamic environment, such as an auto-scaling cloud setup.

While open-source NGINX does not natively support dynamic reconfiguration, you can achieve this through third-party tools like nginx-upstream-dynamic-servers or using NGINX Plus.

Using NGINX Plus

NGINX Plus simplifies dynamic reconfiguration with its API and built-in health monitoring. Here is a basic example of how to add or remove servers dynamically using NGINX Plus:

Adding a Server

curl -X POST -d '{"server": "backend4.example.com"}' http://localhost:8080/api/4/http/upstreams/myapp/servers

Removing a Server

curl -X DELETE 'http://localhost:8080/api/4/http/upstreams/myapp/servers/backend3.example.com'

Utilizing NGINX Plus Features

NGINX Plus offers proprietary features that can significantly enhance your load balancing capabilities:

Active Health Checks

Active health checks allow NGINX Plus to remove unhealthy servers from the load balancing rotation automatically. This ensures high availability and reliability for your service.

http {

upstream myapp {

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

# Enable active health checks

health_check interval=5s fails=3 passes=2;

}

}

Session Persistence (Sticky Sessions)

Sticky sessions, or session persistence, can be crucial for stateful applications. NGINX Plus provides robust mechanisms for maintaining session persistence.

http {

upstream myapp {

server backend1.example.com;

server backend2.example.com;

# Enable session persistence

sticky cookie srv_id expires=1h domain=.example.com path=/;

}

server {

location / {

proxy_pass http://myapp;

}

}

}

Summary

Exploring these advanced load balancing techniques can greatly enhance the efficiency, reliability, and scalability of your web applications. Weighted load balancing allows for effective resource utilization, dynamic reconfiguration ensures seamless scalability, and NGINX Plus features provide robust health checks and session persistence options. Incorporating these advanced techniques will prepare your website for substantial traffic loads and complex deployment scenarios.

Security Best Practices

Implementing robust security measures for your NGINX load balancer is essential to protect your web infrastructure from common vulnerabilities and attacks. This section provides comprehensive guidance on securing your NGINX deployment, ensuring the integrity and availability of your web services.

1. Limit Access with IP Whitelisting

Restricting access to your NGINX server can prevent unauthorized users from reaching your backend servers. Use IP whitelisting to allow only trusted IP addresses.

http {

allow 192.168.1.0/24; # Allow local network

deny all; # Deny everything else

}

2. Use SSL/TLS Encryption

Encrypt data between clients and the load balancer to protect sensitive information. Implement SSL/TLS using a certificate from a trusted CA.

server {

listen 443 ssl;

server_name yourdomain.com;

ssl_certificate /etc/nginx/ssl/your_certificate.crt;

ssl_certificate_key /etc/nginx/ssl/your_certificate.key;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers HIGH:!aNULL:!MD5;

# Load balancing configuration

location / {

proxy_pass http://backend;

}

}

3. Implement HTTP Security Headers

Use HTTP security headers to reduce the risk of common vulnerabilities such as XSS, clickjacking, and MIME-type sniffing.

server {

add_header X-Content-Type-Options nosniff;

add_header X-Frame-Options DENY;

add_header X-XSS-Protection "1; mode=block";

# Load balancing configuration

location / {

proxy_pass http://backend;

}

}

4. Enable Rate Limiting

To mitigate DDoS attacks and abuse, configure rate limiting to control the number of requests allowed from a single IP address.

http {

limit_req_zone $binary_remote_addr zone=one:10m rate=10r/s;

server {

location / {

limit_req zone=one burst=5;

proxy_pass http://backend;

}

}

}

5. Protect Admin Interfaces

Restrict access to admin interfaces and management endpoints using authentication mechanisms. Basic authentication can add a layer of security.

server {

location /admin {

auth_basic "Administrator’s Area";

auth_basic_user_file /etc/nginx/.htpasswd;

proxy_pass http://backend;

}

}

Generate a .htpasswd file:

htpasswd -c /etc/nginx/.htpasswd username

6. Regularly Update NGINX and Modules

Ensure NGINX and its modules are up-to-date with the latest security patches. Regular updates help protect against newly discovered vulnerabilities.

# For Ubuntu

sudo apt-get update

sudo apt-get upgrade nginx

# For CentOS

sudo yum update

7. Disable Unused Modules

Minimize the attack surface by disabling any unused NGINX modules. Removing unnecessary modules can prevent potential exploits.

8. Log and Monitor Activity

Continuous monitoring and logging of server activity can help detect and respond to security incidents quickly. Use NGINX logging features to track requests and errors.

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

error_log /var/log/nginx/error.log warn;

}

Conclusion

By following these security best practices, you can significantly enhance the security posture of your NGINX load balancer. Implementing measures such as IP whitelisting, SSL/TLS encryption, HTTP security headers, and rate limiting will protect against a variety of threats while ensuring the reliability and integrity of your web services.

Troubleshooting Common Issues

While NGINX is a robust and reliable load balancer, you may encounter occasional issues that require troubleshooting. This section aims to provide a comprehensive guide to diagnosing and resolving common problems you may face while using NGINX as a load balancer.

1. NGINX Won't Start or Restart

Symptom: NGINX fails to start or restart after configuration changes.

Diagnosis:

Resolution:

- If the command outputs any errors, correct them in your configuration file.

- Ensure there are no permission issues with the NGINX log files or PID files.

2. Load Balancing Not Distributing Traffic

Symptom: Traffic isn't being evenly distributed among your backend servers.

Diagnosis:

-

Verify your load balancing method (Round Robin, Least Connections, IP Hash) is correctly configured in the nginx.conf file.

Example for Round Robin:

upstream backend_servers {

server backend1.example.com;

server backend2.example.com;

}

server {

listen 80;

location / {

proxy_pass http://backend_servers;

}

}

Resolution:

- Ensure all backend servers are operational and reachable from the NGINX server.

- Confirm that the DNS names or IP addresses for backend servers are correct.

3. Unresponsive Backend Servers

Symptom: NGINX fails to forward requests to unresponsive backend servers.

Diagnosis:

-

Check the health status of your backend servers.

-

Look for timeout or connection errors in your NGINX error logs.

View error logs:

sudo tail -f /var/log/nginx/error.log

Resolution:

-

Implement health checks to automatically disable unresponsive or unhealthy backend servers.

Example health check configuration:

upstream backend_servers {

server backend1.example.com;

server backend2.example.com;

health_check;

}

-

Ensure backend servers have sufficient resources and are not overloaded.

4. SSL Termination Issues

Symptom: HTTPS requests are failing or generating security warnings.

Diagnosis:

- Verify the SSL certificates and private keys are correctly referenced in your NGINX configuration.

Resolution:

-

Check the paths to your SSL certificate and key:

server {

listen 443 ssl;

server_name yourdomain.com;

ssl_certificate /path/to/ssl_certificate.crt;

ssl_certificate_key /path/to/private_key.key;

location / {

proxy_pass http://backend_servers;

}

}

-

Ensure the SSL certificate is not expired and is signed by a trusted Certificate Authority (CA).

5. High Latency and Slow Performance

Symptom: High latency and slow response times from your load balancer.

Diagnosis:

- Evaluate NGINX performance metrics such as worker processes, worker connections, and proxy buffering.

Resolution:

-

Adjust worker processes based on the number of CPU cores.

Example:

worker_processes auto;

-

Optimize buffer sizes:

http {

proxy_buffer_size 128k;

proxy_buffers 4 256k;

proxy_busy_buffers_size 256k;

}

-

Increase the number of worker connections:

worker_connections 1024;

6. Session Persistence Not Working

Symptom: Users are experiencing disrupted sessions while accessing your website.

Diagnosis:

- Check your session persistence (

sticky) configuration.

Resolution:

-

Configure session persistence in your upstream block.

Example for sticky sessions:

upstream backend_servers {

server backend1.example.com;

server backend2.example.com;

sticky;

}

Summary

Effective troubleshooting ensures your NGINX load balancer runs smoothly and efficiently. By following the steps outlined, you can quickly diagnose and resolve common issues, ensuring high availability and optimal performance for your website. Always remember to test changes in a controlled environment before applying them to your production servers.

## Conclusion

In this comprehensive guide, we have walked through the essential steps and considerations for setting up NGINX as a reliable and efficient load balancer for your website. Here's a recap of the key points we covered:

1. **Introduction:**

- NGINX is a robust, flexible, and high-performing solution for load balancing web traffic.

- Key load balancing concepts include distribution methods, health monitoring, and session persistence.

2. **Installing NGINX:**

- Step-by-step installation instructions were provided for Ubuntu, CentOS, and Windows platforms.

3. **Basic Configuration:**

- Initial setup and essential configuration options to run NGINX as a web server.

- Configuration file location and structure.

4. **Configuring NGINX for Load Balancing:**

- Detailed setup for different load balancing methods like Round Robin, Least Connections, and IP Hash.

5. **Setting Up Upstream Servers:**

- Defining multiple backend servers in the NGINX configuration file.

- Example configuration:

<pre><code>

upstream myapp {

server backend1.example.com;

server backend2.example.com;

}

server {

location / {

proxy_pass http://myapp;

}

}

</code></pre>

6. **Health Checks and Monitoring:**

- Importance of health checks to ensure high availability.

- Tools and techniques for monitoring backend server status.

7. **Session Persistence:**

- Implementing sticky sessions to ensure users are consistently directed to the same backend server.

- Example using IP Hash:

<pre><code>

upstream myapp {

ip_hash;

server backend1.example.com;

server backend2.example.com;

}

</code></pre>

8. **SSL Termination with NGINX:**

- Offloading SSL processing from backend servers to NGINX.

- Configuring SSL certificates and keys.

9. **Error Handling and Redirection:**

- Setting up custom error pages and redirection rules to enhance user experience and manage errors effectively.

10. **Performance Tuning:**

- Techniques for optimizing NGINX performance.

- Adjusting buffer sizes, connection limits, and worker processes.

11. **Load Testing with LoadForge:**

- Introduction to LoadForge for thorough load testing.

- Step-by-step instructions for setting up and interpreting test results.

12. **Advanced Load Balancing Techniques:**

- Exploring weighted load balancing, dynamic reconfiguration, and NGINX Plus features.

13. **Security Best Practices:**

- Implementing security measures to protect your NGINX load balancer.

- Defense against common vulnerabilities and attacks.

14. **Troubleshooting Common Issues:**

- Diagnosing and resolving common problems with NGINX load balancer configurations.

By following this guide, you should now be equipped with the knowledge and practical skills needed to implement and maintain a high-performance, secure, and reliable load balancing setup using NGINX.

### Additional Resources

For further learning and support, consider the following resources:

- [NGINX Official Documentation](https://nginx.org/en/docs/)

- [LoadForge Documentation](https://loadforge.com/docs)

- [NGINX Community Forums](https://forum.nginx.org/)

- [NGINX Plus Advanced Features](https://www.nginx.com/products/nginx/)

- [Security Guidelines for NGINX](https://www.nginx.com/resources/library/web-server-security-nginx/)

Always keep your NGINX server updated to the latest stable version to take advantage of new features and security patches. Happy load balancing!