Introduction to FastAPI and Load Testing

FastAPI is a modern, high-performance web framework for building APIs with Python 3.7 and newer, using standard Python type hints. Its key feature is its speed: FastAPI runs on Starlette for handling websockets and other async services and uses Pydantic for data validation which makes it exceedingly fast. This speed, combined with its automatic interactive API documentation and its robust design, has quickly made FastAPI a favorite among developers who want to create scalable and efficient web applications.

However, no matter how efficiently an API is designed and implemented, it might face issues under high load, which can adversely affect the user experience or lead to downtime. That’s where load testing comes into play. Load testing is a type of performance testing that simulates real-world load on any software, application, or website. This allows developers to analyze performance, identify bottlenecks, and ensure stability before the software is deployed to production.

Why Load Testing FastAPI?

Here are several key reasons why performing load testing on a FastAPI application is crucial:

- Performance Validation: Ensuring the API performs well under intense traffic conditions.

- Scalability Insights: Understanding how the system will scale as the number of requests increases, and determining the infrastructure needed to support such scaling.

- Reliability Check: Verifying that the application will not crash under heavy load conditions and can handle unexpected spikes in traffic.

- Endurance Testing: Determining if the application can sustain a high level of performance for a prolonged period.

FastAPI's Suitability for Load Testing

Given FastAPI’s design for high performance and asynchronous support, it is particularly suitable for load testing:

- Asynchronous Support: FastAPI’s ability to handle asynchronous tasks makes it ideal for handling multiple simultaneous user requests, mimicking real-world usage better.

- Type Hinting: This feature of FastAPI helps in preventing bugs and improving editor support and code completion, essentials for quickly troubleshooting performance testing scripts.

- Automatic Interactive Documentation: FastAPI automatically creates Swagger UI documentation that can help testers understand the API’s capabilities without digging through the code or requiring extensive documentation from the development team.

These features make FastAPI a robust candidate for load testing, ensuring that APIs built with FastAPI can handle real-world conditions, maintain performance, and delight users with their reliability and speed. In the next sections, we will set up a testing environment, create a simple FastAPI application, and perform a load test using Locust and LoadForge, detailing our processes and findings.

Setting Up Your Environment

To ensure that your load testing scenario runs smoothly, it's crucial to set up your environment correctly. This involves installing all the necessary software components, including FastAPI, Uvicorn (an ASGI server), and Locust, which will be used to simulate user traffic and conduct the test. Let's delve into the step-by-step setup process:

1. Install Python

Load testing with FastAPI and Locust requires Python 3.7 or later. Ensure you have Python installed by checking its version. You can install Python from the official Python website.

python --version

# or

python3 --version

2. Install FastAPI

FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.7+ that is based on standard Python type hints. Install FastAPI using pip:

pip install fastapi

3. Install Uvicorn

Uvicorn is an ASGI server necessary for serving your FastAPI application. It's lightning-fast and allows your application to handle high loads efficiently. Install Uvicorn using pip:

pip install uvicorn

4. Install Locust

Locust is an open-source load testing tool that will simulate users hitting your FastAPI application. It is crucial for defining how each user behaves and generating the test load:

pip install locust

5. Verify Installation

After installing the necessary packages, it's good practice to verify that everything is installed correctly. You can do this by checking the version of each package:

fastapi --version # This command might not exist; verification typically in Python script

uvicorn --version

locust --version

6. Setting Up Your Project Directory

Organize your workspace by creating a project directory. This directory will house your FastAPI application and the Locustfile:

mkdir fastapi_load_test

cd fastapi_load_test

In this directory, add two main files:

app.py: This will contain your FastAPI application code.locustfile.py: This will define the user behavior for the load test.

Conclusion

With all the components installed and your project directory set up, your environment is now prepared for building the FastAPI application and conducting load tests with Locust. The next steps involve crafting a simple FastAPI application and creating a locustfile to define how users will interact with your API. By establishing a properly configured environment, you set a strong foundation for robust load testing, ensuring everything operates seamlessly once the heavier loads are simulated.

Creating a Simple FastAPI Application

In this section, we will go through the steps to create a basic FastAPI application. This application will include a few routes that perform various functions, making it an ideal candidate for demonstrating different aspects of load testing with LoadForge.

Prerequisites

Before we begin, ensure that you have Python 3.7+ installed on your system. Additionally, you’ll need to install FastAPI and Uvicorn, which will serve as our ASGI server. You can install these packages using pip:

pip install fastapi uvicorn

Step 1: Create Your FastAPI Application

Start by creating a new Python file for your application. You can name this file app.py. In this file, you will define your FastAPI application and the routes it will handle.

- Import FastAPI: Begin by importing FastAPI from the fastapi module.

- Create an App Instance: Initialize your FastAPI application.

Initial Setup Code

from fastapi import FastAPI

app = FastAPI()

Step 2: Define Routes

A typical API might have various endpoints to handle different operations. For our simple application, we’ll define three routes:

- A root route that returns a welcome message.

- A route that performs a computation and returns the result.

- A route that accepts a parameter and returns a modified response.

Adding Routes to Your Application

@app.get("/")

async def read_root():

return {"message": "Welcome to our FastAPI application!"}

@app.get("/compute")

async def compute_value():

result = sum(range(10000)) # Simulate a simple computation

return {"result": result}

@app.get("/items/{item_id}")

async def read_item(item_id: int):

return {"item_id": item_id, "name": f"Item {item_id}"}

Step 3: Run Your Application

After defining your application and routes, it’s time to run the application using Uvicorn. Use the following command to start your server:

uvicorn app:app --reload

This command tells Uvicorn to run the application instance (app) in the file app.py. The --reload option enables automatic reloading of the server when changes are made to the code, which is useful during development.

Step 4: Verify Functionality

To ensure your application is working as expected, open a web browser or use a tool like curl to make requests to your API:

- Access the root route:

curl http://127.0.0.1:8000/

- Trigger the computation:

curl http://127.0.0.1:8000/compute

- Retrieve an item:

curl http://127.0.0.1:8000/items/5

This simple FastAPI application is now ready and can be used as a target for our LoadForge load tests. The varying routes we've set up will allow us to explore different load scenarios effectively, demonstrating how FastAPI handles different types of requests under pressure.

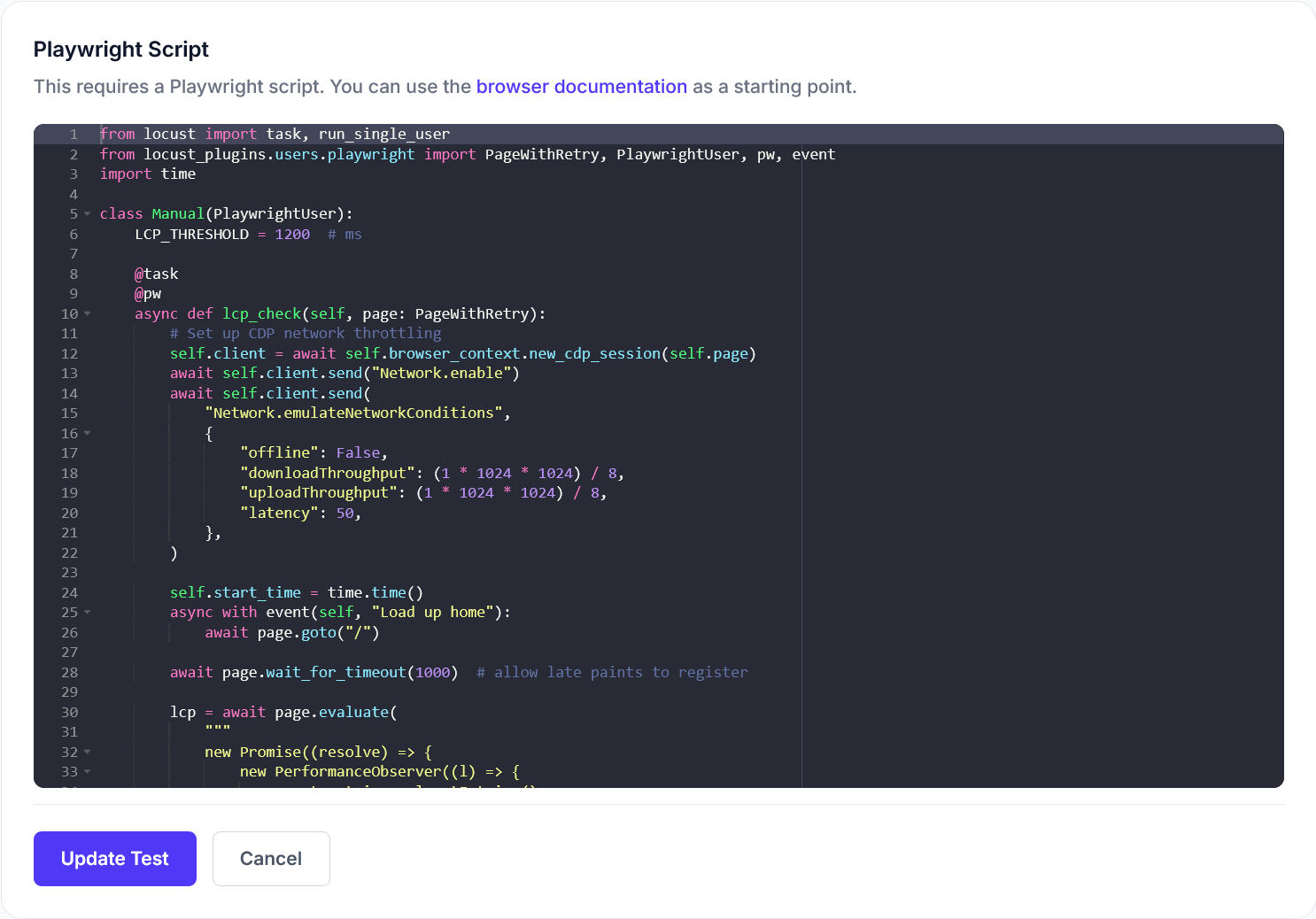

Writing the Locustfile

Creating an effective locustfile is essential for simulating real-world load scenarios on your FastAPI application. This section guides you through the essential steps to craft a locustfile specifically tailored for testing a FastAPI application. We'll explore how to define user behavior, make HTTP requests, and simulate various user tasks. Here's a breakdown of each component within the locustfile and how they interact with your FastAPI endpoints.

Setting Up Your Locustfile

First, ensure you have Locust installed in your environment. You can install it using pip:

pip install locust

Next, start by creating a new Python file named locustfile.py. This file will contain the code that defines the behavior of your simulated users.

Importing Necessary Modules

At the top of your locustfile.py, you’ll need to import the required classes and functions from the Locust library:

from locust import HttpUser, between, task

HttpUser: This class represents a simulated user who will make HTTP requests to your API.between: This function is used to specify the wait time between each task that the user will execute.task: A decorator to specify the different tasks that the simulated users will perform.

Defining User Behavior

Each user will perform a sequence of tasks, which are methods decorated with @task. Here’s an example of a simple user behavior class that targets a FastAPI application:

class ApiUser(HttpUser):

wait_time = between(1, 5)

@task

def get_items(self):

self.client.get("/items")

@task(3)

def create_item(self):

headers = {'Content-Type': 'application/json'}

self.client.post("/items", json={"name": "NewItem", "description": "A new item"}, headers=headers)

wait_time: Defines the wait time (in seconds) between each task execution to simulate real user behavior.get_items: A task where the user sends a GET request to the /items endpoint.create_item: A task with a higher weight (noted by @task(3)), indicating it should be executed three times as often as get_items. This task simulates the user creating a new item via a POST request. The headers variable includes necessary headers for JSON payload.

Simulating Complex User Behaviors

To make the simulation more realistic, consider adding more tasks that reflect typical user interactions with your API, such as updating or deleting items. Below is an extension of the previous example:

@task

def update_item(self):

self.client.put("/items/1", json={"name": "UpdatedItem", "description": "Updated description"})

@task

def delete_item(self):

self.client.delete("/items/1")

Each method defines a different API interaction, enhancing the richness of the simulated load scenario.

Running Your Locust Test Locally

Before deploying your locustfile on LoadForge, you may want to test it locally to ensure it behaves as expected. Run Locust using the following command:

locust -f locustfile.py

Visit http://localhost:8089 in your web browser to access the Locust web interface, start the test, and view real-time statistics.

Final Thoughts

The locustfile script is a powerful tool to simulate diverse user behaviors and interactions with your FastAPI application. By carefully designing each task, you can ensure that your load tests accurately mimic expected traffic patterns and help you uncover potential bottlenecks and performance issues in your application.

Setting Up Your LoadForge Test

After crafting a tailored locustfile for your FastAPI application, the next crucial step is to configure and deploy a load test using LoadForge. This phase involves defining the test's scale, duration, and the geographical distribution of the simulated users. Proper setup is vital to mimic real-world conditions accurately and to ensure the reliability of your test results.

Step 1: Defining the Test Parameters

First, log into your LoadForge account and navigate to the Create Test option. Here, you'll begin by uploading the locustfile you've prepared:

- Upload your locustfile: Click on 'Choose File' and select the locustfile from your local system.

- Name your test: Provide a descriptive name that helps you identify the test purpose easily.

Here is where you'll define key parameters of your load test:

-

Number of Users (Clients): This represents the total number of concurrent users that will be simulating traffic to your FastAPI application. Start with a baseline that you expect your application might handle and plan to scale up in subsequent tests.

Total Number of Users: 500

-

Spawn Rate: This is the speed at which new users are added to the test until the total number of specified users is reached.

Spawn Rate: 50 users/second

-

Test Duration: Specify how long the test should run. It is crucial to allow sufficient time for the API to exhibit its behavior under load.

Test Duration: 10 minutes

Step 2: Geographic Distribution

To ensure your test results reflect real-world scenarios, LoadForge allows you to simulate traffic from different geographical locations. Select the locations that best represent your user base. This feature is particularly useful for APIs expected to serve users globally or from specific regions.

Step 3: Advanced Configuration (Optional)

LoadForge provides advanced options to tailor your test even further:

-

HTTP Headers: Configure custom headers to simulate more authentic requests.

Authorization: Token abc123

Content-Type: application/json

-

Environment Variables: Set environment-specific variables if your test needs to switch between different configurations or stages.

Step 4: Launching Your Test

Once all parameters are set, review your settings:

- Go to the Review section to ensure all configurations are correct.

- Click Start Test to initiate the load testing.

Upon starting the test, LoadForge will begin to execute your locustfile against the designated FastAPI application. The platform's interface will display real-time graphs and metrics, allowing you to monitor the test as it happens.

Conclusion

Setting up your load test in LoadForge using a well-prepared locustfile is straightforward yet powerful. By carefully defining your test parameters and taking advantage of LoadForge's scalable infrastructure, you ensure that the deployed tests provide valuable insights into how well your FastAPI application performs under varied realistic conditions. Be sure to adjust your settings based on initial outcomes to progressively refine your API's performance and resilience.

Analyzing Test Results

Once you have executed your LoadForge load tests against your FastAPI application, the next critical step is to analyze the results. Proper interpretation of these results will provide insights into how your API handles increased traffic and help identify potential bottlenecks or performance issues. This section will guide you through understanding key metrics such as response times, request failure rates, and system resource usage.

Understanding the Key Metrics

Response Times

Response times are a primary indicator of your API's performance. They tell you how long it takes for your FastAPI application to respond to a request under various load conditions. In LoadForge reports, you will find metrics like:

- Average Response Time: The average time taken for requests to be served during the entire test.

- Median Response Time: Middle value of response times, providing a better sense of typical user experience as it's less affected by outliers.

- 95th Percentile: Indicates that 95% of the response times were faster than this value. This helps in understanding the experience of the majority of your users.

These response times should be analyzed to see if they meet your application's performance targets. Significant increases in response time as load increases can indicate that your application may need optimization or scaling.

Request Failure Rates

The request failure rate is crucial in understanding the reliability of your API under stress. A high rate of failures can indicate issues such as:

- Resource limits being reached (database connections, bandwidth limits, etc.)

- Bugs in the application that only surface under high loads

- Infrastructure or configuration issues

LoadForge provides a clear breakdown of HTTP status codes returned during the test. Analyzing these codes can help pinpoint the areas needing attention.

System Resource Usage

Understanding how your system resources are utilized during the test can highlight capability limits and misconfigurations. Key metrics to monitor include:

- CPU utilization

- Memory usage

- Disk I/O

- Network throughput

If any of these metrics are maxed out during the test, it could be a signal that your infrastructure needs to be scaled up or optimized for better performance.

Analyzing Load Test Results

Here is a step-by-step approach to analyzing your LoadForge test results:

-

Start with Summary Metrics: Review the overall statistics provided in the summary report. Pay close attention to the average, median, and 95th percentile response times.

-

Drill into Errors: Look into the error rates and types of errors encountered. Check the logs for exceptions or errors that occurred during the test.

-

Resource Review: Examine the system resource graphs provided by LoadForge. Identify any resources that are consistently high and could be contributing to bottlenecks.

-

Correlate Metrics: Try to correlate high-response times and error rates with spikes in resource usage. This can help identify what causes performance degradation.

-

Compare with Baselines: If you have baseline tests (previous tests under a lower load), compare the results to understand how additional load impacts performance.

Final Thoughts on Data Interpretation

Remember, the goal of load testing is not merely to determine if your system can handle the expected load but also to understand how it behaves under that load. Peaks in response times or failure rates during a test can guide further optimization and scaling efforts. Through thorough analysis of LoadForge test results, potential issues in your FastAPI application can be addressed before they impact your users.

Best Practices and Advanced Tips

When undertaking load testing for your FastAPI applications, following a structured approach can make a significant difference in the output and usefulness of your tests. This section highlights some best practices to adopt and offers advanced tips to leverage the capabilities of LoadForge more effectively.

Gradual Load Increases

It's crucial to avoid overwhelming your application with an unrealistic burst of traffic from the outset. Instead:

- Start with a low number of users and gradually increase the load.

- Use a step load pattern where the number of users or requests increases incrementally over specific intervals.

This approach helps identify at what load level the application begins to degrade or fail, providing actionable insights into its performance thresholds.

Monitoring and Logging

Effective monitoring and logging are essential for diagnosing issues and understanding the behavior of your application under stress:

- Implement comprehensive logging within your FastAPI application. Ensure that you log key actions, response times, and errors.

- Monitor application performance metrics such as CPU usage, memory consumption, and request latency in real-time.

- Use LoadForge's integration capabilities to connect with monitoring tools like Prometheus or Grafana for a deeper analysis.

Example configuration for logging in FastAPI could look like this:

import logging

from fastapi import FastAPI

app = FastAPI()

logging.basicConfig(level=logging.INFO)

@app.get("/")

async def read_root():

logging.info("Root endpoint accessed")

return {"Hello": "World"}

Performance Optimization Tips

Based on your test results, consider the following strategies for optimizing your FastAPI application:

- Database optimization: Use indexes, and optimized queries and consider read/write replicas if the database is a bottleneck.

- Asynchronous programming: FastAPI supports asynchronous request handlers, which can improve the performance under load by not blocking the server.

- Memory management: Profile your application to identify and fix any memory leaks or inefficient memory usage.

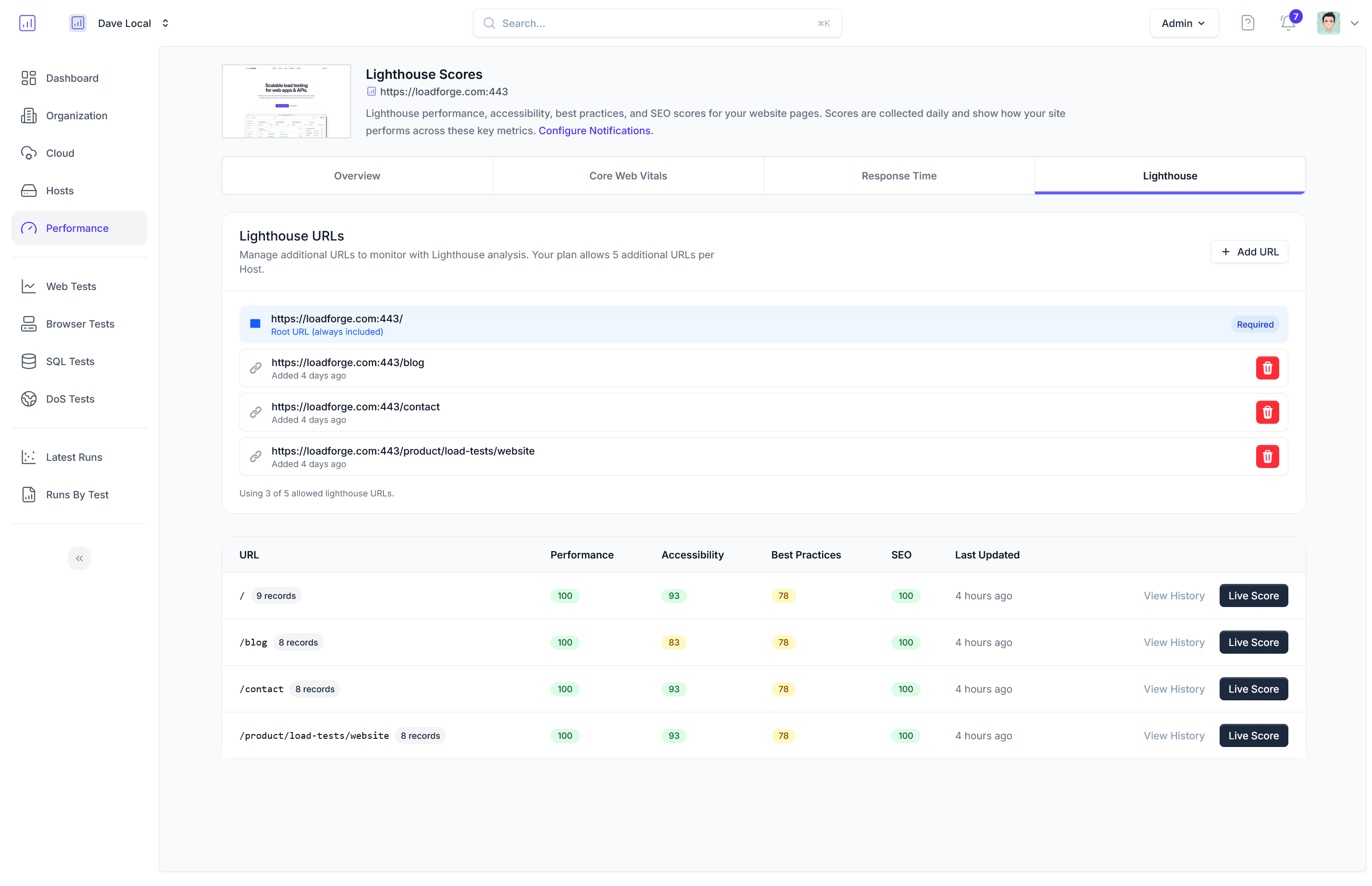

Advanced Features of LoadForge

Leverage LoadForge’s advanced features to gain deeper insights and effectively simulate more complex scenarios:

- Custom Load Scenarios: Use LoadForge’s capabilities to create detailed user scenarios that mimic real-world usage patterns. This could include varying think times, different user paths through the application, and randomizing input data.

- Distributed Testing: Run tests from multiple geographic locations to understand how location impacts performance and to simulate more realistic network conditions.

- Result Analysis: Utilize LoadForge's comprehensive analysis tools to dive deep into test results. Look for patterns or recurrent issues and correlate them with code or infrastructure changes.

By integrating these best practices and utilizing the advanced features of LoadForge, you can ensure a more effective load testing process that positions your FastAPI application to handle real-world use with reliability and stability.