Introduction to Load Testing Node.js Express Apps

Node.js, powered by the V8 JavaScript engine, has become a popular choice for developing scalable and fast network applications. Express, a minimal and flexible Node.js web application framework, provides a robust set of features to build single-page, multi-page, and hybrid web applications. However, like any web application, Node.js Express applications must undergo rigorous testing to ensure they can handle high traffic and deliver optimal user experiences.

Why Load Testing is Crucial

Load testing is a type of performance testing used to determine a system’s behavior under both normal and peak load conditions. For Node.js Express applications, which often handle a large number of simultaneous connections, it becomes crucial for several reasons:

- Scalability Testing: Determine if your application can handle the expected number of user requests without adverse effects on performance.

- Stability Testing: Identify application stability under varying loads to ensure reliable performance over extended periods.

- Bottleneck Identification: Pinpoint the specific parts of your application that limit the ability to scale and may cause performance degradation.

Common Performance Bottlenecks in Node.js Express Apps

Performance bottlenecks can drastically affect the usability and satisfaction of your application. Common issues include:

- Memory Leaks: Inefficient code can lead to increased memory usage over time, which can slow down the application or even crash the server due to out-of-memory errors.

- I/O Bottlenecks: As a backend often handles numerous I/O operations, Node.js applications can become I/O-bound. If not properly managed, I/O operations can block the main thread, leading to delayed responses.

- Database Interaction: Inefficient queries or high database loads can introduce significant latency, impacting user experience and potentially causing timeouts.

- Concurrency Management: Node.js runs on a single thread using non-blocking I/O calls. Issues often arise in properly handling asynchronous operations and managing concurrency, leading to poor performance under load.

How Load Testing Helps

By simulating user interactions and measuring response times and resource utilization, load testing provides a clear picture of how your application behaves when subjected to typical and peak conditions. Here's what it helps achieve:

- Performance Insights: Discover the maximum capacity your application can handle, and how it behaves under stress.

- Optimization Opportunities: Identify inefficient parts of your application to optimize, whether they're in your code, your database layer, or your server configuration.

- Confidence in Scalability: Provide assurances to stakeholders that the application can handle the expected load with room for growth.

Through effective load testing, you can ensure that your Node.js Express application is prepared to deliver the performance users expect, regardless of traffic spikes or heavy usage. This not only boosts customer satisfaction but also guarantees the stability and scalability crucial for successful modern web applications.

Understanding Locust for Load Testing

Locust is an open-source load testing tool designed to test the performance of your web applications. Written in Python, it allows developers to write scripting scenarios in Python code, which lends itself to excellent flexibility and programmability. Locust focuses on creating real-life user behavior patterns to simulate traffic and analyze the performance of your application under stress.

How Locust Integrates with LoadForge

LoadForge leverages the power of Locust to facilitate large-scale load tests across various organizational infrastructures, including Node.js Express applications. Here’s how Locust seamlessly integrates with LoadForge:

- Scalability: Locust supports the simulation of millions of concurrent users, making it especially effective for high-load testing scenarios. LoadForge utilizes this capability to distribute a load test across multiple cloud servers, effectively simulating internet scale traffic.

- User Simulation: Locust allows you to define user behavior in your tests. These user scenarios are written in Python and can be configured to mimic typical user interactions with your application.

- Resource Efficiency: Unlike many GUI-based tools, Locust runs tests in a headless mode which is less resource-intensive. This efficiency is critical when deploying multiple instances at scale, as facilitated by LoadForge.

Basics of Writing Locustfiles

A locustfile.py is a Python script where you define the behavior of your simulated users and the tasks they perform. Here’s a simple example of what a locustfile might look like for a Node.js Express application:

from locust import HttpUser, task, between

class WebsiteUser(HttpUser):

wait_time = between(1, 5)

@task

def index(self):

self.client.get("/")

@task(2)

def profile(self):

self.client.get("/profile")

In this example:

HttpUser: Represents a simulated user. This class defines how each user interacts with the application.task: A method decorator that indicates a function which simulates a specific type of user interaction, such as fetching a web page.wait_time: Defines the time between tasks, simulating a more realistic user behavior.

Key Features to Know When Writing locustfiles for LoadForge

- Task Weighting: As seen in the

@task(2), tasks can be assigned weights to simulate more frequent actions. For instance, visiting the profile page might be more common than visiting the home page.

- HTTP client:

self.client is used to make HTTP requests. Locust has built-in support for sessions, cookies, headers, and other necessary HTTP functionalities.

- Performance Metrics: Every time a task runs, Locust records the response time, number of requests, the number of failures, etc., which are essential metrics for load testing.

By integrating Locust with LoadForge, you can leverage advanced distributed load testing capabilities while maintaining the granularity and precision that Locust affords through its scripting capabilities. This combination is particularly potent for dynamic sites built with frameworks like Node.js Express, where user behavior can be diverse and unpredictable.

Setting Up Your Testing Environment

Properly setting up your testing environment is a crucial step in ensuring that your Node.js Express application can be effectively tested using LoadForge. This involves configuring both your application and the testing platform to capture and process data efficiently. Below, we'll walk you through essential preparations that include monitoring and logging for valuable real-time performance insights.

Configure Node.js Express for Monitoring and Logging

-

Enable Detailed Logging:

Ensure that your application's logging level is set to capture detailed informational logs. You can use middleware like morgan for HTTP request logging, which can help you understand traffic patterns and potential bottlenecks during the load test.

const morgan = require('morgan');

app.use(morgan('combined'));

-

Integrate Performance Monitoring Tools:

Tools like New Relic or Datadog can be integrated into your Express application to monitor performance metrics. These tools provide real-time insights into your app's operations, which can be invaluable during load testing.

require('newrelic');

Ensure to configure these monitoring tools according to their setup documentation to start tracking performance metrics.

Prepare Your Database

-

Optimize Database Connections: Verify that your database connection settings are optimized for high loads. Utilize connection pooling and appropriately configure timeouts to ensure stability during peak testing scenarios.

-

Monitor Slow Queries: Enable logging of slow queries in your database. This will help you identify database operations that could become bottlenecks under load.

Set Up Load Testing Scripts in LoadForge

-

Create or Import Locustfile:

You should have a locustfile.py that defines the behavior of your simulated users. Ensure that this script is accurately representing the typical user behavior on your Node.js Express app.

-

Configure Load Testing Parameters:

On LoadForge, configure your testing parameters including the number of users, spawn rate, and test duration. These should mimic your expected real-world scenario as closely as possible.

from locust import HttpUser, task, between

class QuickstartUser(HttpUser):

wait_time = between(1, 5)

@task

def index_page(self):

self.client.get("/")

Set Up Logging and Result Tracking on LoadForge

-

Monitoring on LoadForge:

LoadForge provides powerful tools for monitoring your load tests in real time. Configure dashboard widgets to display critical metrics such as response times, request rate, and failure rates.

-

Collecting and Storing Logs:

Set up mechanisms to collect and store logs and test results for post-test analysis. Ensure these are centrally located and easy to access for your team.

Test Connection and Initial Setup

-

Run a Smaller Scale Test:

Before going full-scale, conduct a smaller test to ensure everything operates as intended. This test run helps validate the setup of your Node.js application, LoadForge settings, and the overall testing environment.

-

Check Real-Time Monitoring Tools:

During the initial test, monitor the output of your integrated performance tools. Watch for unexpected behavior or poor performance and make adjustments as needed.

Conclusion

Setting up your testing environment correctly is fundamental to achieving meaningful and reliable results from your load tests. By ensuring a thorough setup process — from logging detail in your Node.js app to configuring LoadForge correctly — you are establishing a strong foundation for successful load testing of your Express application. This proactive preparation helps identify and mitigate issues, contributing significantly to the robustness and scalability of your application.

Writing Your First Locustfile

In this essential section, we'll walk you through creating your first Locustfile, which is a crucial component in load testing your Node.js Express applications using LoadForge. Below, you'll find clear, detailed instructions and example code snippets that will aid in defining user behaviors, outlining tasks, and simulating various traffic patterns relevant to your Node.js environment.

Understanding the Structure of a Locustfile

A Locustfile is a Python script used by Locust to define user behaviors and simulate traffic against your web application. At its core, a Locustfile should import the necessary modules from Locust, define user behavior classes, and may also include tasks that these users will execute.

Basic Components of a Locustfile

Here are the essential components you need to familiarize yourself with before crafting your Locustfile:

- Import Statements: Loading necessary classes and functions from the Locust library.

- TaskSet Classes: A collection of tasks representing one or more user behaviors.

- User Classes: Definitions of different user types that perform tasks.

- Tasks: Functions representing actions each user will execute.

Step-by-Step Guide to Writing a Locustfile for Node.js Express Apps

-

Setting Up Your Environment

Ensure you have Python and Locust installed. You can install Locust using pip:

pip install locust

-

Create a New Locustfile

Start by creating a new Python file named locustfile.py.

-

Import Necessary Modules

At the top of your file, write the necessary import statements:

from locust import HttpUser, task, between

-

Define User Behavior

Create a user class inheriting from HttpUser. This class will define how a user interacts with your application:

class WebsiteUser(HttpUser):

wait_time = between(1, 5)

@task

def view_homepage(self):

self.client.get("/")

@task(3)

def view_blog(self):

self.client.get("/blog")

In the above example:

wait_time denotes a random wait time between tasks to mimic real user behavior.@task decorator is used to declare methods as tasks that this user will execute. The numbers in the brackets (e.g., @task(3)) imply the task's weight in terms of frequency of execution.

-

Simulate More Complex Workflows

You can define more complex workflows by using the @task on methods within a class. For instance, performing a series of actions that create, read, update, and delete data:

@task

def create_and_manipulate_record(self):

with self.client.post("/create", json={"name": "example"}, catch_response=True) as response:

if response.status_code == 200:

self.client.get("/read")

self.client.put("/update", json={"name": "modified example"})

self.client.delete("/delete")

else:

response.failure("Failed to create record")

-

Configure and Run Your Test

With your locustfile.py ready, run Locust locally to see if your configuration works as expected:

locust -f locustfile.py

Finally, navigate to http://localhost:8089, where you can start your load test by specifying the number of users and spawn rate.

Conclusion

Creating a basic locustfile for Node.js Express applications involves defining user behaviors, tasks they perform, and simulating these tasks under load. This introduction should help you get started on customizing further to mirror the specific requirements of your applications. Remember, the key to effective load testing is not just creating realistic user simulations but also continually refining these scripts as your app evolves.

Executing Load Tests on LoadForge

Deploying your Locustfile and initiating a load test on LoadForge is a straightforward process designed to simulate user behavior under various scenarios and measure how your Node.js Express application responds under pressure. This section will guide you through uploading your Locust script, configuring your test parameters, and starting the test run.

Step 1: Upload Your Locustfile

Before executing any tests, you need to upload the Locustfile you've created. This file contains the definitions of the tasks and user behaviors that you intend to simulate:

- Sign in to your LoadForge account.

- Navigate to the Scripts section in the dashboard.

- Click on New Script and upload your Locustfile or directly copy and paste the code into the provided text editor.

Step 2: Configure Test Settings

Configuring the test settings properly is crucial for obtaining meaningful and realistic results. Here’s how to configure your test:

- Number of Users: This setting defines the total number of virtual users that will be simulated. Adjust this number based on the load you want to test against your application.

- Spawn Rate: This determines how fast the users are spawned. A slower spawn rate might be useful for gradually ramping up traffic.

- Test Duration: Specify how long the test should run. This could range from a few minutes to several hours depending on the test’s goal.

Here is an example of setting these parameters in LoadForge:

Users: 5000

Spawn Rate: 150 users per second

Test Duration: 1 hour

Step 3: Select Region

LoadForge allows you to select from multiple regions across the globe to initiate your test. This feature is useful for testing how your app performs from different geographical locations. Select the region closest to your user base or test multiple regions to gauge global performance.

Step 4: Execute the Test

After configuring all settings:

- Review your configuration to ensure everything is set according to your testing goals.

- Click on Start Test.

- Monitor the test’s progress through the real-time updated charts and logs provided by LoadForge.

Step 5: Scaling Options

For more intensive testing scenarios, LoadForge provides options to scale your tests. This is essential for stress testing and understanding how your system behaves under extreme conditions:

- Auto-scaling: Automatically increases the number of users based on pre-defined thresholds.

- Manual Scaling: Adjust the number of users during the test based on real-time performance data.

Consult the LoadForge documentation for detailed instructions on setting up scaling policies.

Conclusion

Executing a load test with LoadForge is both efficient and effective. By carefully setting up your test parameters and leveraging the platform's scaling abilities, you can simulate a wide range of scenarios. This process will help you identify potential bottlenecks and ensure that your Node.js Express application can handle high traffic loads, thereby enhancing overall performance and reliability.

Analyzing Test Results

After conducting a load test on your Node.js Express application using LoadForge, the next crucial step is to analyze the test results. This analysis will help you understand your application's performance characteristics under various load conditions and identify areas where improvements are needed.

Key Metrics to Focus on

When reviewing the results from your LoadForge test, there are several critical metrics that you should pay attention to:

-

Response Time: This is the duration it takes for a request to be processed by your application and for the response to be returned to the client. Monitoring average, median, and 95th percentile response times will give you a comprehensive view of your app's responsiveness.

-

Throughput: This metric indicates the number of requests that your application can handle per second. It helps in understanding the load capacity of your server.

-

Error Rate: It's crucial to note the number and type of failed requests during the test. An increase in errors under heavy loads might indicate bottlenecks or stability issues in your application.

-

Concurrent Users: This shows the number of users that can simultaneously interact with your application without notable performance degradation.

-

Resource Utilization: Metrics such as CPU usage, memory usage, and disk I/O are essential to identify which resources are the limiting factors.

These metrics are typically presented in a series of graphs and tables within the LoadForge interface. Here is an example of how you might find them represented:

-----------------------------------------

| Metric | Value |

-----------------------------------------

| Max Response | 1150ms |

| Average Response | 200ms |

| Error Rate | 0.5% |

| Throughput | 300 req/s |

| Concurrent Users | 250 |

-----------------------------------------

Interpreting the Data

To effectively interpret this data, compare it against the performance goals and benchmarks established for your application. For instance, if your target is an average response time of under 300ms and your load test shows an average of 200ms, your application is performing well. However, if the max response time spikes to more than a second under high loads, this might indicate some performance bottlenecks during peak times.

Troubleshooting Common Issues

Identifying problematic areas through load testing is only the first step; troubleshooting them effectively is key to optimization. Here are common issues typical in Node.js Express applications and ways to approach them:

-

High Response Time: This could be due to inefficient database queries, unoptimized code, or a lack of adequate resources (CPU, memory). Profiling the application to pinpoint slow functions and queries is an effective strategy to resolve these issues.

-

Increasing Error Rates Under Load: This symptom often points to stability issues. Check for unhandled exceptions and ensure that your middleware and database drivers are scalable under concurrent access.

-

Resource Saturation: If the CPU or memory usage is consistently high, consider scaling your resources vertically (more powerful server) or horizontally (more servers). Also, analyze your application for potential memory leaks.

Each of these areas can generally be diagnosed and resolved by detailed examination of your codebase and infrastructure, often with the help of additional profiling and monitoring tools.

Conclusion

Post-test analysis is critical as it transforms raw data into actionable insights. By continuing to monitor these metrics over time and following each load test, you can iteratively improve the performance and stability of your Node.js Express applications, ensuring that they can handle real-world usage scenarios efficiently.

Optimizing Your Node.js Express Application

With the load testing results from LoadForge at your fingertips, the next crucial step is to optimize your Node.js Express application based on these findings. Here, we'll explore strategies to enhance code efficiency, effective scaling of your application, and considerations for potential architectural changes. These enhancements are vital to ensure that your application can handle increasing loads and deliver a seamless user experience.

1. Enhancing Code Efficiency

Improving code efficiency directly influences your application's ability to handle more requests with fewer resources. Here are some actionable tips:

-

Refactor Inefficient Code: Look for any bulky or repeatedly executed code and consider refactoring it. Aim to reduce complexity and avoid unnecessary computations by caching results whenever possible.

-

Reduce Middleware Overhead: Express middleware can add unnecessary processing. Review your middleware usage and eliminate or optimize any that excessively impact performance.

-

app.use((req, res, next) => {

// Perform necessary actions or remove if not essential

next();

});

-

Streamline Database Interactions: Optimize the way your application interacts with databases. This could include indexing databases for quicker searches and using connection pooling to manage database connections efficiently.

-

Asynchronous Programming: Leverage Node.js's asynchronous capabilities to handle I/O operations. This prevents blocking of the main thread and allows the server to handle more requests. Use async/await or Promises where applicable.

-

async function fetchData() {

const data = await database.query("SELECT * FROM users");

return data;

}

2. Scaling Your Solution

Scaling your application involves preparing it to handle loads beyond your current needs. Here are approaches to consider:

-

Horizontal Scaling: Increase your application's capacity by adding more servers. This method is highly effective for handling more traffic and can be facilitated by Load Balancers.

-

Vertical Scaling: Enhance the capabilities of your existing server with better hardware specifications. While this is less cost-effective than horizontal scaling, it might serve short-term needs.

-

Implement Caching Solutions: Use caching mechanisms like Redis to store frequently accessed data. This can dramatically reduce response times and decrease the load on your servers.

3. Architectural Changes

Sometimes, load tests might reveal that minor optimizations aren’t enough, and more significant architectural changes are needed:

-

Microservices Architecture: Consider breaking your monolithic application into microservices. This approach allows specific components to scale independently and improves fault isolation.

-

Offloading Tasks: Use message queues like RabbitMQ or Kafka to handle long-running or resource-intensive tasks asynchronously. This keeps your main application thread free and responsive.

-

const queue = require('queue');

const myQueue = queue();

myQueue.push(() => {

// Long running task here

});

myQueue.start();

Conclusion

After implementing these optimizations, it's vital to run another series of load tests to compare the before and after scenario. This iterative process helps you understand the impact of the changes and guides further optimizations. Always aim for a balance between cost and performance, adjusting your approach as your application and user base grow.

Best Practices for Continuous Load Testing

In modern software development practices, continuous integration and deployment play a vital role in ensuring the timely release of features and updates. Similarly, continuous load testing is essential to guarantee that your Node.js Express applications can handle real-world traffic and stressors effectively. Below, we explore strategic practices to seamlessly incorporate load testing into your development lifecycle, automate processes, and maintain test relevance and accuracy over time.

Integrate Load Testing into Continuous Integration Pipelines

Automate the execution of load tests by integrating them into your CI/CD pipeline. This ensures that performance tests are run automatically every time code is pushed to your repository, helping to catch performance regressions early in the development cycle. Tools like Jenkins, GitLab CI, and GitHub Actions can be configured to trigger LoadForge tests using simple scripts.

Example CI Pipeline Configuration with LoadForge:

# Trigger Load Test using LoadForge API in a CI/CD pipeline step

curl -X POST -H 'Authorization: Token YOUR_API_TOKEN' \

-d 'test_id=YOUR_TEST_ID¬es=CI build $(Build.Id)' \

https://app.loadforge.com/api/tests/run/

Employ Canary Releases and Blue/Green Deployments

Use canary releases or blue/green deployments to slowly roll out changes to a small subset of users before a full deployment. This allows you to perform load tests in production-like environments under controlled conditions, reducing risks associated with new releases.

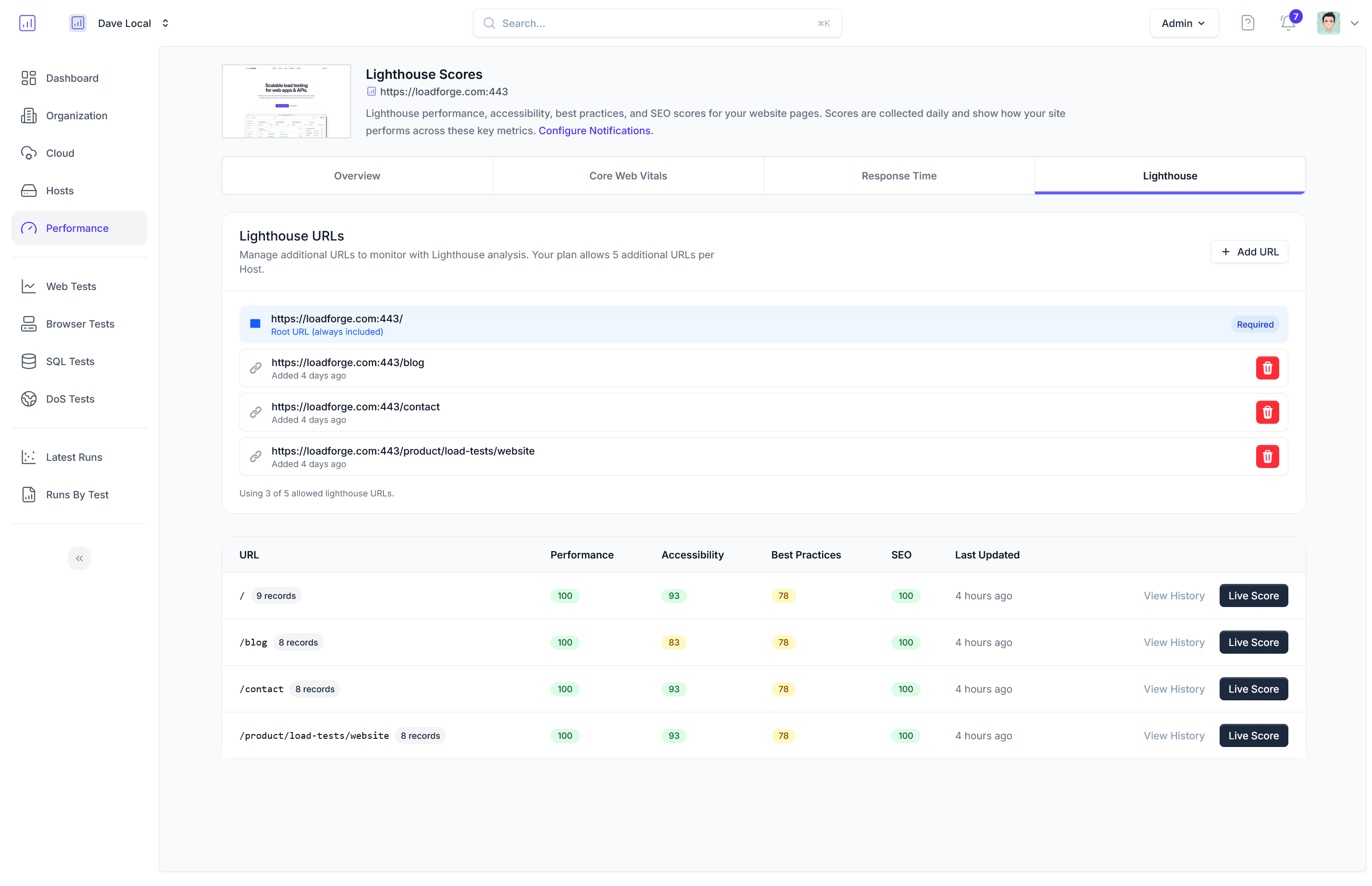

Utilize Automated Performance Monitoring

Automatically collect and analyze performance data after each deployment or test. Tools like New Relic, Datadog, or Prometheus can be useful to track your application's performance metrics continuously. Set alerts for anomalous behaviors to rapidly address issues that might not have been caught during pre-deployment testing.

Maintain Load Test Accuracy and Relevance

-

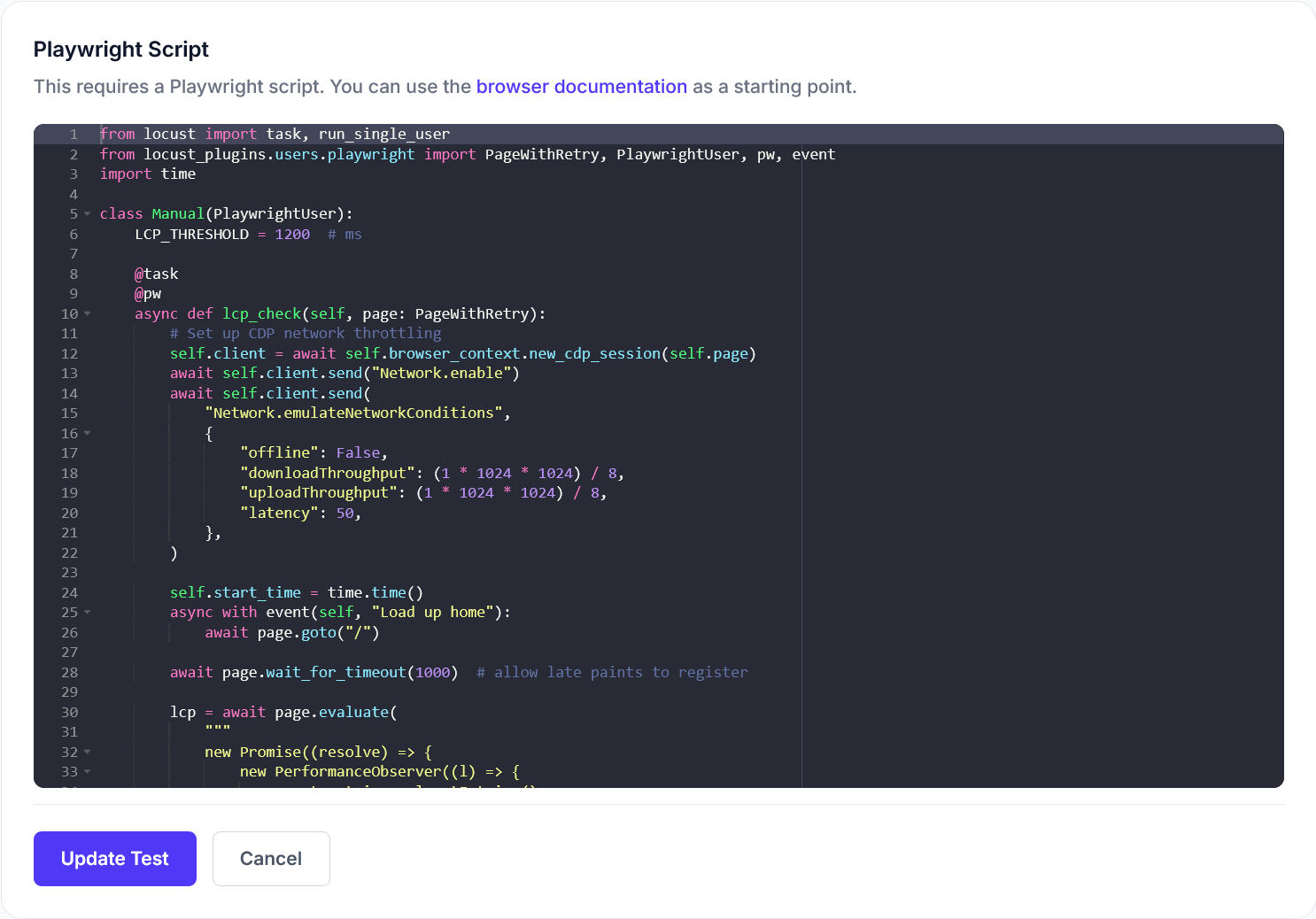

Update Your Locustfiles Regularly: As your application evolves, so should your tests. Regularly review and update your Locustfiles to reflect new features, endpoints, and user behaviors to ensure your testing scenarios remain realistic and cover all critical paths of your application.

-

Scale Tests Over Time: As usage patterns change and your application scales, your load tests should also scale to simulate accurate real-world usage. Increase the volume and complexity of tests progressively to match your growth.

-

Diverse Testing Scenarios: Include diverse testing scenarios to cover various user behaviours and peak traffic periods. This helps ensure your application can handle different load types and volumes.

Implement Performance Budgets

Set performance budgets for key metrics such as response time, throughput, and error rates. Enforce these budgets within your testing and deployment pipelines. If a new feature or release exceeds these budgets, it should automatically flag a performance degradation and warrant further optimization before deployment.

Continuous Learning and Adaptation

Regularly review the outcomes of load tests to identify trends and areas for improvement. Hold periodic retrospectives with your development, testing, and operations teams to discuss test results, performance changes, and necessary improvements in both application code and infrastructure.

Continuous load testing is not just about finding bottlenecks; it's about proactively improving application performance and user satisfaction. By following these best practices, your Node.js Express applications can remain robust, responsive, and scalable, regardless of user load or data volume.