Introduction to Load Testing Caddy

Load testing is an essential part of any robust web development and deployment strategy, particularly when using efficient and high-performance web servers like Caddy. Caddy, renowned for its simplicity and speed, automatically uses HTTPS and is often praised for its minimal configuration needs. However, even the most efficiently designed servers can falter under unexpected loads. This section elucidates why stress-testing your Caddy server is indispensable, showcasing the advantages and potential enhancements that effective load testing brings to the table.

Why Load Test Your Caddy Server?

Load testing your Caddy server assists in identifying how well your application will perform under significant strain. This encompasses a range of benefits:

- Performance Benchmarking: Establish how many requests per second your Caddy server can handle before performance begins to degrade.

- Scalability Insights: Determine whether your current infrastructure is adequate to handle expected traffic volumes, and identify scaling strategies for peak times.

- Reliability Assurance: Stress tests can reveal points of failure in your server's configuration that could cause downtime during critical periods.

- Resource Utilization Analysis: Understand how effectively your server uses resources like CPU, memory, and network bandwidth under different load conditions.

- Enhanced User Experience: By ensuring that your server responds quickly even under heavy loads, you contribute directly to a smoother, more responsive user experience.

How Load Testing Translates to Real-world Benefits

Implementing regular load testing sessions for your Caddy server not only helps in reinforcing your server's performance metrics but also aids in several strategic areas:

- Proactive Problem Identification: Before deploying updates to production, load testing can help predict how changes will affect performance. This proactive approach can prevent potential problems post-deployment.

- Informed Decision-making: Data from load tests can guide infrastructure investments, showing precisely where upgrades are needed (whether hardware or software).

- Customer Trust and Satisfaction: Servers that perform well even under heavy loads are less likely to go down unexpectedly, thus reducing negative user experiences and building trust over time.

Conclusion

Understanding the critical role load testing plays in preparing a Caddy server to handle real-world traffic and strain effectively is the first step towards sustainability and superior performance. Early identification of potential pitfalls and continuous performance enhancements ensures that your server can not only meet but exceed both your business needs and customer expectations, forming a foundational aspect of a high-quality web service deployment.

By integrating regular load testing into your development cycle, particularly using a comprehensive solution like LoadForge, you arm yourself with the knowledge and capability to optimize your Caddy deployment continually.

In the following sections, we will delve deeper into how to configure LoadForge, write appropriate locustfiles for Caddy, and leverage the resulting data to fine-tune your server's performance.

Understanding the Basics of Caddy

Caddy is an open-source web server designed for simplicity and usability with minimal setup required. It is widely acclaimed for its automatic HTTPS by default, serving your sites over SSL without any additional configurations. Caddy's modern architecture supports HTTP/2 out of the box, making it an attractive choice for high-performance web applications.

Key Features of Caddy

- Automatic HTTPS: Caddy obtains and renews SSL certificates via Let's Encrypt and configures your site to use HTTPS automatically.

- HTTP/2 Support: It supports HTTP/2 by default, which allows a faster, more secure, and more efficient web browsing experience.

- Easy Configuration: Caddy's configuration is file-based and designed to be intuitive. It can also be administered with zero downtime.

- Extensible: Through its robust plugin system, Caddy can be extended with plugins to add functionality such as authentication, caching, rate limiting, and more.

Why Caddy is Popular

- Simplicity: One of Caddy's standout features is its simplicity in configuration and operation. It does not require extensive knowledge of web server administration to deploy a secure, high-performance web server.

- Built-in Security: With automatic HTTPS and updates, users can trust that their web server is secure by default.

- Scalability: Although easy for beginners, Caddy is robust enough for production environments, effortlessly serving tens of thousands of requests per second.

- Cross-Platform: Caddy runs equally well on Windows, macOS, Linux, and BSD systems which makes it versatile for various deployment scenarios.

Importance of Optimal Configuration Under Load

While Caddy is designed to handle loads efficiently out of the box, certain high-traffic situations require advanced configuration. These configurations can help Caddy handle more requests per second, manage new connections more smoothly, and utilize system resources more efficiently. For instance, adjusting timeouts, enabling rate limits, or optimizing cache settings can significantly impact the server's performance under load. Proper load testing ensures that these configurations are tailored perfectly to meet the specific demands of your application in production environments.

By understanding these fundamental aspects of Caddy, it's clear why it's crucial to conduct comprehensive load testing. Ensuring your Caddy server is optimally configured enhances not only performance but also the reliability and security of your web services. This sets the stage for a deeper exploration into preparing and executing effective load tests with LoadForge.

Setting Up Your LoadForge Test

Before diving into the specifics of creating and running a locustfile for your Caddy server, let's start by setting up a basic load test scenario using LoadForge. This will involve configuring several critical parameters such as the number of simulated users, the duration of the test, and the geographical distribution of load generators. Each parameter plays a crucial role in understanding how your server behaves under different stress conditions.

Selecting the Number of Users

The number of users you simulate in a load test critically impacts how your Caddy server responds during peak periods. To configure this:

- Log in to your LoadForge account.

- Navigate to the Create Test section.

- Under the Users field, specify the desired number of virtual users you want to simulate. This can range from a few hundred for smaller, internal applications to many thousands for robust, customer-facing sites.

Setting the Test Duration

The duration of the test determines how long the simulated users will interact with your Caddy server. A longer duration can provide more comprehensive insights, especially for observing how the server handles prolonged stress.

- Within the same Create Test section, locate the Duration field.

- Enter the time in seconds or minutes, depending on your test requirements. Common durations include short bursts (1-5 minutes) to test server responsiveness, or extended periods (30-60 minutes) to check for issues like memory leaks or performance degradation over time.

Configuring Geographic Distribution

LoadForge allows you to distribute your simulated load across various geographical regions. This is particularly useful to understand how network latency affects user experience in different parts of the world.

- Scroll down to the Regions section in your test setup.

- Choose from available locations to simulate users from different areas. Common selections include regions where your primary user base is located, or areas known for slower internet speeds to test under adverse conditions.

Finalizing Your Test Configuration

After setting your user count, duration, and geographic distribution, you’ll be ready to move forward with writing a locustfile that will define the specific actions these users will undertake. Here’s a quick checklist to ensure you’re set:

- Review the number of users and test duration: Make sure these figures realistically simulate your expected traffic.

- Confirm the geographic locations: Ensure they align with your user demographic or testing hypothesis.

- Save your test configuration: Use a descriptive name that allows you to easily identify the test in future.

Here's what your basic test setup might look like in LoadForge's interface:

Number of Users: 5000

Test Duration: 300 seconds

Regions: USA-West, Europe-West

Now that your basic load test configuration is complete, you can move on to creating a tailored locustfile that will precisely define how these users interact with your Caddy server.

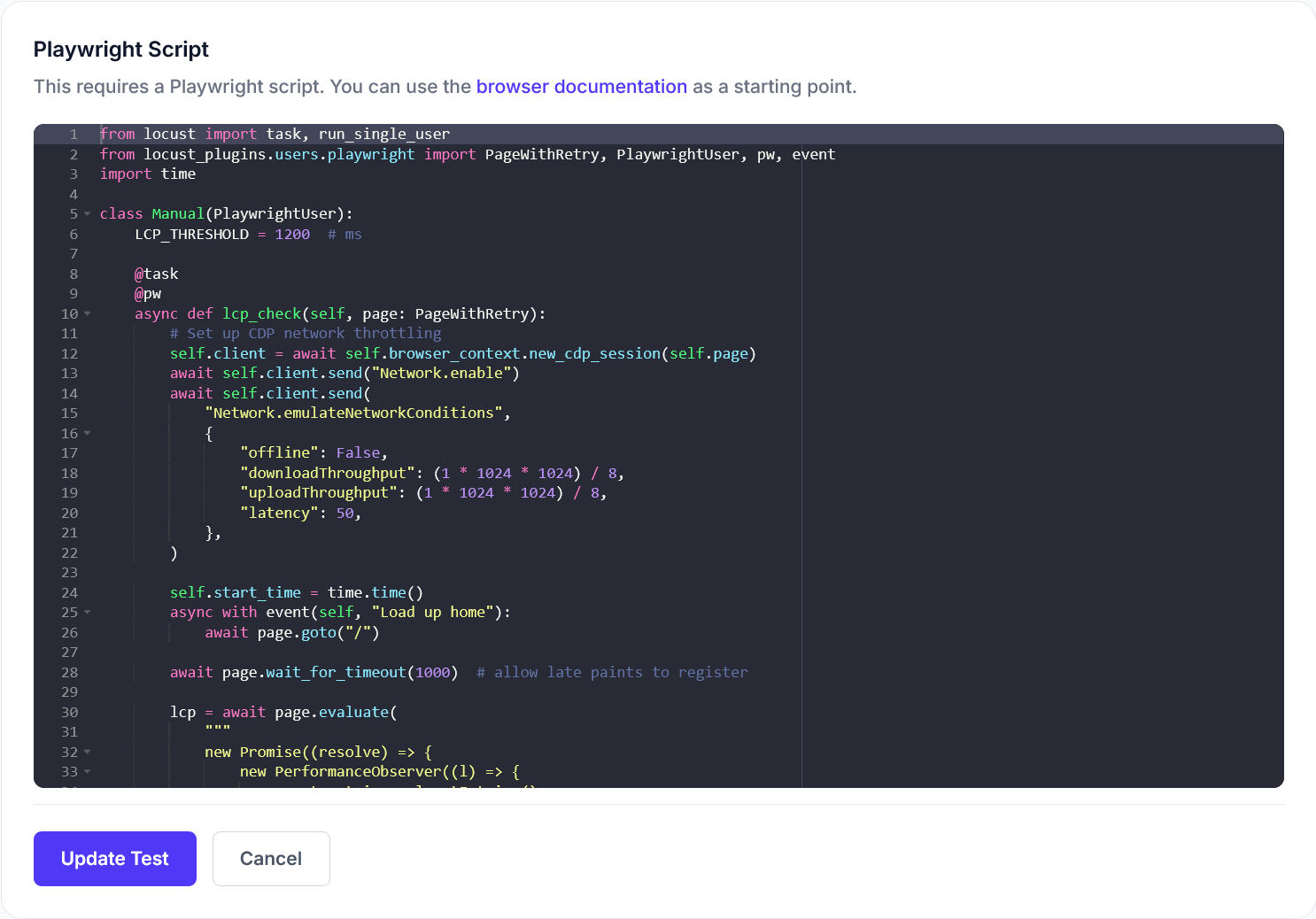

Writing Your Locustfile for Caddy

In this section, we'll walk you through the process of creating a Locustfile specifically designed for load testing a Caddy server. This file will contain Python scripts that simulate user behaviors, such as accessing multiple URLs that your Caddy server hosts. Whether you're testing the responsiveness of static pages, dynamic content, or a RESTful API, the principles covered here can be adapted to suit those needs.

Understanding the Structure of a Locustfile

A Locustfile is essentially a Python script that defines the behavior of simulated users (typically referred to as "Locusts") within your test environment. Each user type can perform tasks, which are functions that simulate specific actions a real user might take, such as requesting a webpage.

Setup

Before we get started, ensure you've installed Locust by running:

pip install locust

Basic Locustfile Example

Here's a simple example that defines a single user type which accesses the homepage of a website served by Caddy.

from locust import HttpUser, task, between

class WebsiteUser(HttpUser):

wait_time = between(1, 5)

@task

def view_homepage(self):

self.client.get("/")

Adding Complexity to Test Multiple URLs

Let's enhance this script to include multiple pages. This is useful for mimicking a more realistic usage pattern where users visit different parts of your website.

from locust import HttpUser, task, between

class WebsiteUser(HttpUser):

wait_time = between(1, 5)

@task(3)

def view_homepage(self):

self.client.get("/")

@task(2)

def view_blog(self):

self.client.get("/blog")

@task(1)

def view_contact_page(self):

self.client.get("/contact")

In this example, the @task decorator can take a weight as an argument, which influences how often that task is picked relative to others. Here, view_homepage is three times as likely to be executed as view_contact_page.

Simulating Advanced User Interactions

Suppose you want to simulate a user who logs into a dashboard. This scenario involves multiple steps — accessing the login page, submitting credentials, and then interacting with the dashboard. Here's how you could script that:

from locust import HttpUser, task, between, SequentialTaskSet

class LoginUser(HttpUser):

wait_time = between(1, 3)

class LoginBehavior(SequentialTaskSet):

@task

def login(self):

self.client.post("/login", {"username": "user", "password": "password"})

@task

def access_dashboard(self):

self.client.get("/dashboard")

tasks = [LoginBehavior]

This script uses a SequentialTaskSet from Locust, allowing us to define multiple tasks that execute in a specific order. This is particularly useful for simulating workflows where the order of actions matters.

Conclusion

Writing an effective Locustfile for testing a Caddy server involves understanding both the basic and more complex user behaviors that your application needs to support. By starting with simple tasks and progressively adding complexity, you can simulate realistic traffic patterns and interactions, providing valuable insights into how well your Caddy server performs under various conditions. Keep experimenting with different user scenarios to cover all aspects of your application’s functionality.

Advanced Configuration Options

When load testing a Caddy server using LoadForge and a custom locustfile, understanding how to utilize advanced configuration options can significantly enhance the accuracy and relevancy of your tests. This section dives into some of these advanced settings, including customization of HTTP headers, session data maintenance, and simulation of complex user interactions, which are crucial for mimicking realistic traffic scenarios on your Caddy server.

Customizing HTTP Headers

Custom HTTP headers can be crucial for tests where the server response might depend on specific header values. For example, if your Caddy server delivers different content based on user-agent or authentication tokens, you would need to mimic this in your locustfile. Here’s how you could add custom headers in your locustfile:

from locust import HttpUser, task, between

class MyUser(HttpUser):

wait_time = between(1, 5)

def on_start(self):

self.headers = {

"User-Agent": "LoadForgeBot/1.0",

"Authorization": "Bearer YOUR_ACCESS_TOKEN"

}

@task

def load_main_page(self):

self.client.get("/", headers=self.headers)

Maintaining Session Data

Maintaining session data across requests is important for simulating a user who performs a series of actions during their visit. This can be handled using the HttpUser class in Locust, which automatically manages cookies and session headers after login. An example simulation might look like this:

class AuthenticatedUser(HttpUser):

wait_time = between(1, 5)

def on_start(self):

self.client.post("/login", data={"username": "user", "password": "password"})

@task

def view_secret_page(self):

# Following uses the session cookie set during login automatically

self.client.get("/secret-page")

Simulating Complex User Interactions

For more complex scenarios where a user might interact with multiple parts of the application in a session, chaining tasks together can simulate this behavior. You can define tasks that perform multiple actions, and by using the @task decorator, you can assign different weights to different behaviors to control their frequency.

from locust import task

class UserBehavior(HttpUser):

wait_time = between(1, 3)

@task(3)

def browse_products(self):

self.client.get("/products")

@task(1)

def post_review(self):

self.client.post("/submit-review", data={"product_id": 42, "review_text": "Great product!"})

In this example, the user browses products three times more frequently than they post reviews.

Advanced LoadForge Options

Beyond the locustfile, LoadForge provides additional configuration settings directly from its interface such as:

- Geographic Distribution: Setting up test origins from different locations to simulate global traffic.

- Custom Load Profiles: Adjust ramp-up times and peak user counts to model different load scenarios, like flash sales or product launches.

Make sure you leverage these advanced options to tailor your load testing efforts very closely to the real-world scenarios that your Caddy server will face. This will provide the insights needed to ensure optimal performance under diverse conditions.

Executing the Load Test

Once you have your locustfile written and ready, the next step is to launch your load test using LoadForge. This section will guide you through the process of running the test, monitoring it in real-time, and understanding which metrics are crucial during the test phase.

Step 1: Launching the Test

To start the load test on your Caddy server:

-

Log in to your LoadForge account: Navigate to the LoadForge dashboard.

-

Select Your Test Options:

- Choose the locustfile you have prepared.

- Specify the number of simulated users.

- Set the duration for the test.

- Choose the geographic distribution of load generators if necessary.

-

Start the Test: Click on the ‘Run Test’ button. LoadForge will distribute the load according to your specifications and begin the test.

<pre><code>

// Example button interaction

<button onclick="startLoadTest()">Run Test</button>

</code></pre>

Step 2: Monitoring Real-Time Results

As the test runs, you can watch the progress in real-time on the LoadForge dashboard. Key metrics to monitor include:

- User Load: The number of users at any given moment.

- Response Times: Average, median, and max response times.

- Request Rate: The number of requests per second.

- Failure Rate: The percentage of failed requests.

It is crucial to keep an eye on these metrics as they provide immediate feedback on how your Caddy server is handling the load.

Step 3: What to Watch For

Key Metrics:

- Response Time: Longer response times can indicate a bottleneck in server performance.

- Errors and Failures: High error rates may reveal issues with server configuration or capacity.

Performance Thresholds:

- Establish performance thresholds before the test. For instance, you may decide that any response time above 2 seconds requires investigation.

Server Resource Utilization:

- Monitor the CPU, memory, and network usage on the server where Caddy is running. High resource usage might indicate a need for server optimization or scaling.

Making Adjustments

In case you notice problematic metrics during the test, consider stopping the test to make necessary configuration adjustments to your Caddy server or the test itself. This flexibility helps in optimizing the test without waiting for its scheduled completion.

By closely watching these metrics and understanding their implications, you can gain valuable insights into how well your Caddy server stands up under pressure. Next, we will look at analyzing these results in detail in the following section.

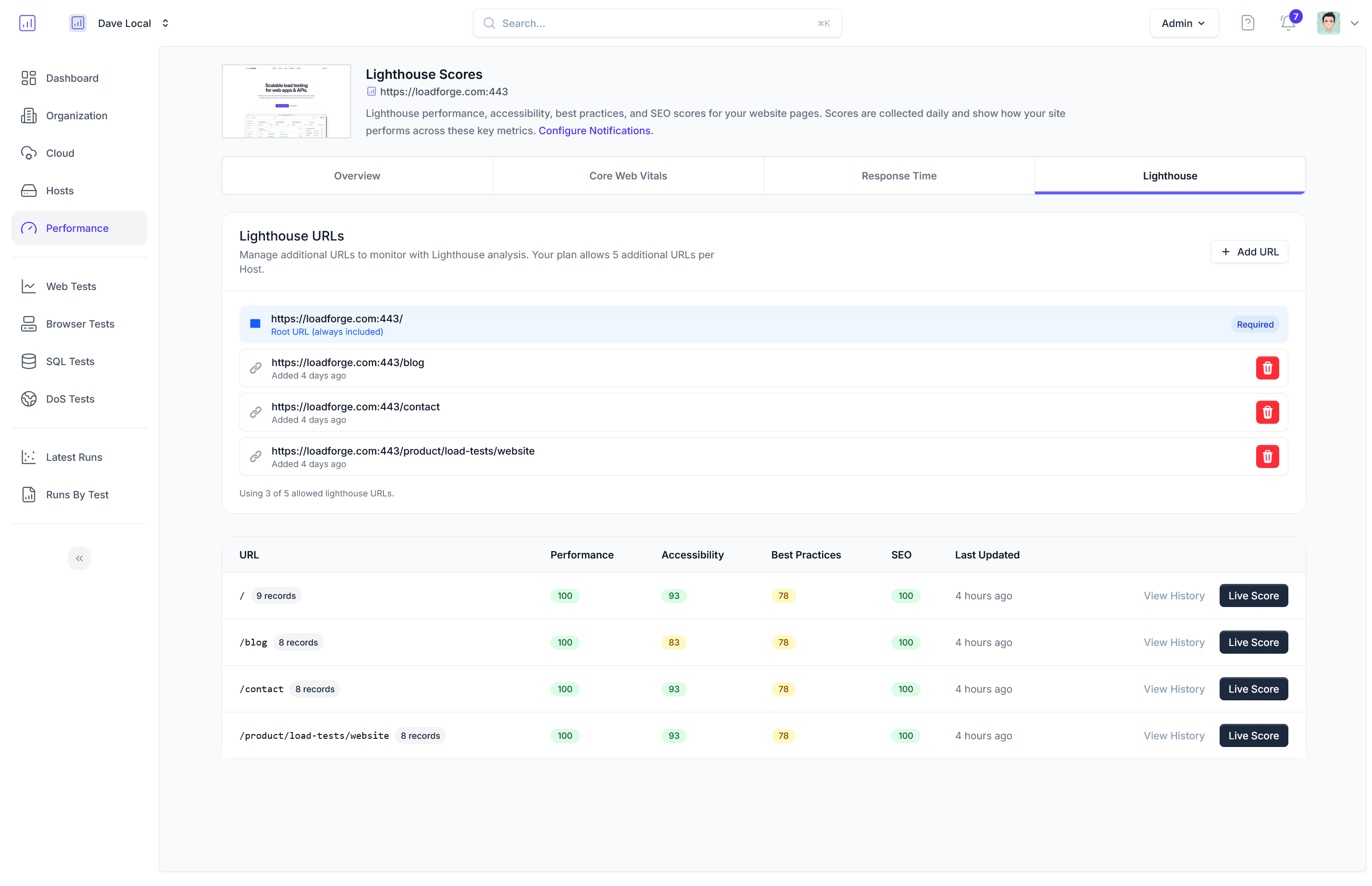

Analyzing Test Results

After conducting your load test on your Caddy server with LoadForge, the next crucial step is analyzing the results. This analysis will help you understand how your server behaves under stress, identify any critical bottlenecks, and measure the performance limits under various load conditions. Here, we'll guide you through the process of interpreting the load test data effectively.

Understanding the Metrics

LoadForge provides several metrics that are key indicators of your server's performance:

- Requests Per Second (RPS): This is the number of requests your server can handle each second. It's a direct indicator of the throughput of your server.

- Response Time: This includes the average, median, and 95th percentile response times. These metrics give insights into how long it takes for your server to respond to requests under different conditions.

- Error Rate: The percentage of failed requests. A high error rate may indicate problems with server configuration or insufficient resources.

Identifying Bottlenecks

To identify potential bottlenecks, pay close attention to these areas:

- Spikes in Response Time: Look for sudden increases in response times at certain user loads. This indicates that your server might be starting to struggle.

- Increased Error Rates: An increase in error rates along with increased load is a clear indicator that the server is failing to cope with the number of requests.

- CPU and Memory Usage: Monitor the server’s CPU and memory usage. High utilization might suggest the server is resource-constrained.

Analyzing the Load Distribution

Examine the distribution of load across different endpoints. This can be seen from the number of requests per second each endpoint is handling:

Endpoint | Requests Per Second

---------------- | -------------------

/api/data | 500

/api/login | 200

/static/images | 450

This table helps understand which parts of your system are under the most stress, and where optimizations may need to concentrate.

Performance Limits

To determine the performance limits of your Caddy server, observe the point at which the increase in load does not result in a proportional increase in handled requests (saturation point) or where the system metrics such as response time and error rate start to degrade significantly.

Visualizing the Data

Using the LoadForge director, visualize the test data through graphs for a better understanding. You might observe patterns and trends more clearly with a visual representation:

- Response time graph: Helps in observing how response times vary with increased load.

- Throughput graph: Shows how requests per second are sustained or vary as the load increases.

Conclusion

By thoroughly analyzing your load test results, you can gain valuable insights into how your Caddy server performs under various conditions. Identifying the bottlenecks and understanding the limits of your current configuration are critical steps in optimizing your server's performance and ensuring a smooth experience for your users. Remember, the goal is not just to handle peak load, but to do so efficiently while maintaining a good user experience.

Optimizing Your Caddy Configuration

Once you have completed a load test on your Caddy server using LoadForge, the next crucial step is to analyze the data obtained and optimize your Caddy configuration. Effective optimization will help ensure that your server can handle increased traffic while maintaining good performance and delivering optimal user experiences. Here, we provide practical tips and strategies based on typical insights gained from load test results.

1. Adjust Resource Allocation

The load test might show that your Caddy server is running out of CPU or memory during peak loads. Consider increasing the resources available to your server, especially if it's running in a virtualized environment:

- CPU: Allocate more CPU cores if you notice high CPU usage.

- Memory: Increase memory allocation if your server is frequently hitting memory limits.

2. Optimize Caddy's Configuration File

Your Caddyfile controls how the server behaves. Based on the test results, you might want to make several adjustments:

3. Implement Caching Strategies

Caching can significantly improve response times and reduce load. Use Caddy's caching options to cache static content and even some dynamic content:

- Static File Caching: Enable caching for static assets like images, CSS, and JavaScript files.

- Dynamic Caching: Consider caching dynamic content that does not change frequently.

4. Fine-Tune Load Balancing

If you're running multiple instances of Caddy as part of a load-balanced setup, tuning your load balancing strategy based on the test results can distribute traffic more efficiently:

5. SSL/TLS Optimization

SSL/TLS configuration can be tweaked to enhance performance:

- Session Resumption: Enable TLS session resumption to reduce the SSL/TLS handshake time for returning visitors.

tls {

session_tickets

}

- Ciphers: Optimize cipher suites to balance security and performance.

6. Regularly Update Caddy

Keep your Caddy server updated to benefit from the latest features, performance improvements, and security patches. Regular updates can often resolve underlying issues identified during load testing.

7. Monitor and Continuously Improve

Post-optimization, it's essential to continue monitoring your server’s performance and conduct regular load tests to ensure the optimizations have the desired effect and to detect new areas for improvement.

By implementing these tips and continuously monitoring your Caddy server's performance, you can significantly enhance its ability to handle high traffic loads effectively. Regular load testing and optimization are key to maintaining an efficient and robust server infrastructure.

Conclusion

In this guide, we have explored the critical role that load testing plays in ensuring that your Caddy server can efficiently handle increasing volumes of traffic and maintain optimal performance under stressful conditions. Through the practical examples and methodologies discussed, you've learned not only how to set up and execute a comprehensive load test using LoadForge, but also how to analyze the results to pinpoint potential bottlenecks and areas for improvement.

Load testing is not a one-time task but rather an integral part of a continuous improvement process for maintaining server health and performance. Regular load testing allows you to:

- Detect Regression and Performance Drift: Regularly scheduled load tests can help detect any performance degradations that may occur due to code changes, updates, or new features. This proactive approach helps ensure that your server maintains its performance standards over time.

- Plan For Scalability: As user demand grows and traffic patterns evolve, ongoing load testing is critical to scaling your infrastructure effectively. It helps you to understand the maximum capabilities of your current setup and plan resource allocation more efficiently.

- Enhance User Experience: By continuously monitoring and enhancing the performance of your Caddy server, you can directly contribute to a smoother and faster user experience. This is crucial for retaining users and improving engagement rates.

- Reduce Risk of Downtime: Regular load testing can significantly minimize the risk of unexpected server failures by identifying critical stress points that could lead to downtime.

Here's a simple periodic review plan you might consider implementing:

- Monthly Load Tests: Conduct standard load tests monthly to ensure consistent performance.

- After Major Updates: Always perform a load test after significant updates to your software or infrastructure.

- Annual Capacity Test: Conduct an extensive capacity test annually or biannually to determine if your current infrastructure can handle projected future loads.

# Example: Scheduling Load Tests with Cron Jobs

0 0 1 * * /path/to/loadforge_test_script.sh # Monthly test

By integrating these practices into your development cycle, you can keep your Caddy server not just functioning, but excelling under varied loads. Nurturing a routine of regular testing and monitoring fosters a culture of performance awareness and responsiveness that can significantly differentiate your services in today's competitive landscape. Remember, a well-maintained server is the backbone of any successful digital service, and ongoing load testing is a cornerstone of good server maintenance.