Introduction

Efficient database querying is fundamental to the performance and scalability of any web application built with Phoenix. Phoenix is known for its high throughput and low latency, thanks in part to the robust Elixir ecosystem and the power of Ecto, its database wrapper and query generator. However, even the most well-designed Phoenix application can suffer from performance degradation if database interactions are not optimized.

Why Efficient Database Querying Matters

Database queries often constitute a significant portion of the latency in web applications. Inefficient queries can cause slow response times, increased server load, and a poor user experience. By optimizing your database queries, you can achieve:

- Lower Latency: Fast query execution translates to quicker response times for end-users.

- Higher Throughput: Efficient queries allow your server to handle more requests per second.

- Reduced Resource Consumption: Optimized queries consume fewer CPU and memory resources, which can lead to cost savings, especially in cloud environments.

- Improved Scalability: Efficient queries are essential for scaling your application to meet increasing traffic demands, ensuring a smooth user experience even under heavy load.

The Impact of Optimized Queries

Optimized database queries affect every layer of your application. From rendering web pages more quickly to ensuring data integrity and consistency, efficient queries are crucial for maintaining high performance. Here's how optimized queries can transform your application:

- Enhanced User Experience: Users expect fast and responsive applications. Quick query responses significantly contribute to a seamless user experience.

- Better Resource Utilization: Efficient queries reduce the load on your database server, free up resources, and ensure that your infrastructure can cope with higher traffic without requiring a proportional increase in hardware.

- Cost Efficiency: Lower resource usage translates directly to cost savings, especially if you are running your infrastructure in the cloud.

- Scalability and Reliability: As your application grows, optimized queries help maintain performance, making your application more scalable and reliable.

Goals of This Guide

In the sections that follow, we will explore various aspects of database querying within a Phoenix application. You'll learn about:

- The role of Ecto and Repo in database interactions.

- Selecting the right data types for efficient storage and retrieval.

- Implementing effective indexing strategies.

- Writing efficient SQL queries using Ecto.

- Addressing the N+1 query problem with Ecto associations and preloads.

- Leveraging caching mechanisms to reduce database load and improve response times.

- Managing database connection pools effectively.

- Monitoring database performance in real-time.

- Conducting load testing using LoadForge to identify and address performance bottlenecks.

By the end of this guide, you will have a deep understanding of how to write efficient database queries in Phoenix, ensuring that your application remains performant and scalable as it grows.

Let's get started!

## Understanding Ecto and Repo

Efficient database querying in Phoenix requires a solid understanding of its underlying mechanisms for interacting with the database. At the core of Phoenix's database capabilities lies **Ecto**, an Elixir library for working with databases, and **Repo**, the module responsible for executing database queries.

### What is Ecto?

Ecto is a domain-specific language (DSL) for interacting with databases in Elixir. It provides a comprehensive suite of tools for defining schemas, writing queries, and managing multi-level data transformations. Unlike raw SQL, Ecto offers a more Elixir-styled approach to database interactions, which allows for cleaner and more maintainable code.

**Key Features of Ecto:**

- **Schema Definitions:** Ecto allows you to define schemas that map to database tables, complete with field types and constraints.

- **Query DSL:** Ecto provides a powerful and expressive query DSL that can compile complex queries.

- **Changesets:** It includes a system for generating and validating changesets, which help ensure data integrity.

- **Multi-Database Support:** Ecto can connect and interact with different types of databases using various adapters.

### The Role of Repo

The **Ecto.Repo** module acts as the interface between your application and the database. It's responsible for executing queries, managing transactions, and handling connections.

**Repo Functions:**

- `insert/2`, `update/2`, `delete/2`: Perform insertions, updates, and deletions.

- `get/3`, `get_by/3`: Retrieve records by primary key or custom conditions.

- `all/2`: Fetch all records matching a given query.

- `one/2`: Fetch a single result from a query (or `nil` if no match is found).

### Basic Usage Examples

Here are some basic examples to illustrate how to define a schema, insert records, and query the database using Ecto and Repo.

#### Defining a Schema

First, let's define a schema for a `users` table:

<pre><code>

defmodule MyApp.User do

use Ecto.Schema

schema "users" do

field :name, :string

field :email, :string

field :age, :integer

timestamps()

end

end

</code></pre>

#### Inserting a Record

To insert a new user into the database, you use the `Repo.insert/2` function along with a changeset:

<pre><code>

defmodule MyApp.User do

use Ecto.Schema

import Ecto.Changeset

schema "users" do

field :name, :string

field :email, :string

field :age, :integer

timestamps()

end

def changeset(user, params) do

user

|> cast(params, [:name, :email, :age])

|> validate_required([:name, :email])

end

end

# Usage

user_params = %{name: "Jane Doe", email: "jane@example.com", age: 30}

changeset = MyApp.User.changeset(%MyApp.User{}, user_params)

case MyApp.Repo.insert(changeset) do

{:ok, user} -> IO.puts("User inserted successfully: #{user.name}")

{:error, changeset} -> IO.puts("Error inserting user")

end

</code></pre>

#### Querying the Database

To retrieve data, you can use functions like `Repo.get/3`, `Repo.all/2`, or the powerful query DSL:

<pre><code>

import Ecto.Query

alias MyApp.Repo

alias MyApp.User

# Retrieve all users

users = Repo.all(User)

# Retrieve a user by ID

user = Repo.get(User, 1)

# Complex querying using Ecto.Query

query = from u in User,

where: u.age > 18,

select: u.name

adult_names = Repo.all(query)

</code></pre>

### Conclusion

Understanding the role of **Ecto** and **Repo** is fundamental to efficient database querying in Phoenix. Ecto provides the schema definitions and query DSL, while Repo executes the queries and manages transactions. Mastering these tools will enable you to write more efficient, maintainable, and expressive database interactions in your Phoenix applications.

## Choosing the Right Data Types

When designing your database schema in Phoenix with Ecto, selecting the right data types is crucial for efficient storage and retrieval of data. Proper data types can significantly improve query performance and ensure that your application scales effectively. Here's a comprehensive guide to help you make informed decisions on data types:

### Understanding Data Types

Data types dictate how the data is stored, how much space it consumes, and how quickly it can be retrieved. Here are the commonly used data types in Ecto and PostgreSQL, which is the default database for Phoenix applications:

- **Integer**: Used for small to large integer values.

- **Float**: For floating-point numbers.

- **Decimal**: For precise fixed-point numbers.

- **String**: For text data.

- **Binary**: For binary data, including files.

- **Boolean**: For true/false values.

- **Date/Time**: For dates and times.

- **UUID**: For universally unique identifiers.

### Guidelines for Selecting Data Types

1. **Use the Smallest Appropriate Data Type**

- Choose the most space-efficient type that can accommodate your data. For instance, if you only need to store numbers between 1 and 100, use `:smallint` instead of `:integer`.

```elixir

field :age, :smallint

```

2. **Prefer Fixed-Size Types for Performance**

- Fixed-size types (e.g., `:integer`, `:float`) are generally faster to process than variable-size types (e.g., `:string`, `:text`). Use fixed-size types when possible.

3. **Use `String` for Short Text and `Text` for Long Text**

- Use `:string` for short, fixed-length text fields, and `:text` for long, variable-length text.

```elixir

field :username, :string

field :bio, :text

```

4. **Leverage `UUID` for Unique Identifiers**

- Using `:uuid` can be advantageous for unique identifiers, ensuring global uniqueness without sequential limitations.

```elixir

field :id, :uuid, primary_key: true

```

5. **Store Binary Data with `Binary`**

- Use `:binary` for data such as files or binary blobs. Ensure you have strategies for handling binary data efficiently.

```elixir

field :avatar, :binary

```

6. **Use `Decimal` for Monetary Values**

- For financial applications, use `:decimal` to handle precise monetary values and avoid floating-point precision issues.

```elixir

field :price, :decimal

```

7. **Optimize Dates and Times**

- For timestamps, prefer `:utc_datetime` or `:utc_datetime_usec` for precision. Use `:date` or `:time` for storing only dates or times.

```elixir

field :inserted_at, :utc_datetime

field :birthday, :date

```

### Common Pitfalls and Solutions

- **Overestimating Data Size**: Avoid using unnecessarily large data types (e.g., `:bigint` for small numbers). It wastes storage space and can slow down queries.

- **Using `String` for Everything**: While `:string` is versatile, misuse can lead to inefficient storage and queries. Choose more specific types whenever possible.

- **Ignoring Index Impact**: The choice of data type can impact index size and performance. Smaller and fixed-size data types lead to faster index operations.

### Practical Example

Let's consider a typical Ecto schema for a blog post. Notice how appropriate data types are chosen for efficiency:

<pre><code>defmodule MyApp.Blog.Post do

use Ecto.Schema

schema "posts" do

field :id, :uuid, primary_key: true

field :title, :string

field :body, :text

field :views, :integer

field :published, :boolean

field :published_at, :utc_datetime_usec

end

end

</code></pre>

### Conclusion

Selecting the right data types for your schema is foundational for achieving efficient database operations. By adhering to these guidelines, you can optimize storage, enhance query performance, and build a scalable Phoenix application. Always consider the nature of your data and choose the most appropriate types to ensure your application runs smoothly.

## Indexing Strategies

Indexes are a crucial aspect of database performance optimization that can significantly improve the speed of data retrieval in your Phoenix application. While they can enhance query performance, improper indexing can lead to increased storage usage and slower write operations. This section will delve into best practices for indexing tables to boost query performance, with detailed examples of when and how to create indexes effectively.

### Why Indexing Matters

Indexes function like the table of contents in a book, allowing the database to quickly locate rows without scanning the entire table. By efficiently narrowing down the search space, indexes can drastically reduce query execution time, especially for large datasets.

### When to Create Indexes

Creating too many indexes or unnecessary indexes can be counterproductive. Here are key scenarios where indexes are most beneficial:

- **Primary Keys and Unique Constraints**: These are indexed by default. Ensure that primary keys are used for their performance and data integrity benefits.

- **Foreign Keys**: Index foreign keys to expedite joins and improve query performance involving related tables.

- **Columns frequently used in WHERE clauses**: Index columns often queried with filters to accelerate data retrieval.

- **Columns frequently used in ORDER BY or GROUP BY clauses**: Indexing these columns can make sorting and grouping operations more efficient.

- **Composite Indexes**: Use these when queries frequently filter by multiple columns.

### Creating Indexes with Ecto

In Phoenix projects, you manage migrations using Ecto. Below are examples illustrating how to create indexes in your Ecto migrations.

For a single column index:

<pre><code>

defmodule MyApp.Repo.Migrations.AddIndexToUsersEmail do

use Ecto.Migration

def change do

create index(:users, [:email])

end

end

</code></pre>

For a composite index:

<pre><code>

defmodule MyApp.Repo.Migrations.AddIndexToPosts do

use Ecto.Migration

def change do

create index(:posts, [:user_id, :inserted_at])

end

end

</code></pre>

### Best Practices for Indexing

- **Avoid Over-Indexing**: Indexes speed up read operations but slow down writes (insert, update, delete) since the index itself needs to be updated. Aim for a balanced approach.

- **Consider Index Selectivity**: High cardinality (unique values) columns benefit more from indexing. Low cardinality columns may not provide significant performance gains.

- **Monitor Index Usage**: Regularly review query performance and index usage to ensure indexes are benefiting query performance. Remove unused or redundant indexes.

- **Use Covering Indexes**: When practical, create indexes that include all columns retrieved by a query to eliminate the need to access the table data itself.

### Example: Improving Query Performance with Indexes

Suppose you need to optimize the following query frequently executed on a `posts` table:

<pre><code>

SELECT * FROM posts WHERE user_id = 1 AND inserted_at > '2023-01-01';

</code></pre>

Creating a composite index on `user_id` and `inserted_at` can significantly improve this query's performance:

<pre><code>

defmodule MyApp.Repo.Migrations.AddCompositeIndexToPosts do

use Ecto.Migration

def change do

create index(:posts, [:user_id, :inserted_at])

end

end

</code></pre>

### Conclusion

Effective indexing requires a thoughtful approach tailored to your application's specific needs and query patterns. Remember, the goal is to enhance read performance without excessively compromising write efficiency. By carefully planning and regularly reviewing your indexing strategies, you can maintain an optimal balance, ensuring your Phoenix application remains performant and scalable.

## Optimizing Query Performance

Efficient querying is a cornerstone of high-performance applications built with Phoenix and Elixir. By optimizing SQL queries through Ecto, you can significantly affect your app's speed and scalability. Below are essential tips and techniques to ensure your queries are as efficient as possible.

### Understand Your Query Plans

Before you can optimize a query, you need to understand how it operates:

1. **Inspect Query Plans:** Utilize the `EXPLAIN` statement to understand your queries better. This helps you see how the database executes your queries, identifying potential bottlenecks.

```elixir

Repo.query("EXPLAIN ANALYZE SELECT * FROM your_table WHERE column = value")

```

2. **Analyze the Execution Time:** Pay attention to the execution time and the cost. If an operation is slow, it usually means there's room for optimization through indexing or query restructuring.

### Write Efficient Joins

Joins can be both a blessing and a curse; effective use of them is critical for performance:

1. **Use the Correct Join Type:** Use `inner_join` for cases where all rows must have matching rows in the joined table. Use `left_join` sparingly, as it can be more expensive.

```elixir

from(p in Post,

join: c in Comment,

on: p.id == c.post_id,

where: c.approved == true

)

```

2. **Avoid Unnecessary Columns:** Be selective about the columns you include in your query. Fetching fewer columns reduces the amount of data transferred and processed.

```elixir

from(p in Post,

select: %{id: p.id, title: p.title}

)

```

### Minimize Database Calls

The fewer the database calls, the better your application will perform:

1. **Batch Inserts and Updates:** Combine multiple inserts or updates into a single query when possible to reduce the overhead of multiple database calls.

```elixir

Repo.insert_all(Post,

[%{title: "Post 1", body: "Content 1"},

%{title: "Post 2", body: "Content 2"}]

)

```

2. **Use Transactions Wisely:** Group multiple operations that make sense under a single transaction. This not only ensures data integrity but can also improve performance.

```elixir

Repo.transaction(fn ->

Repo.insert!(%Post{title: "Title 1"})

Repo.insert!(%Post{title: "Title 2"})

end)

```

### Efficient Query Patterns

Certain patterns in query writing can yield better performance:

1. **Filter Early:** Apply filters early in the query to minimize the dataset as soon as possible. This reduces the amount of data processed in subsequent operations.

```elixir

from(p in Post,

where: p.published == true,

select: %{id: p.id, title: p.title}

)

```

2. **Leverage Preloads:** Use `preload` for associated data to avoid N+1 query problems and to fetch related records in a single query.

```elixir

Repo.all(

from p in Post,

preload: [:comments]

)

```

### Optimize Aggregations

Aggregation functions can heavily impact query performance if not written efficiently:

1. **Index Aggregated Columns:** When performing frequent aggregations, consider indexing the columns involved. This can significantly speed up operations like `SUM`, `COUNT`, and `AVERAGE`.

```elixir

from(p in Post,

select: count(p.id)

)

```

2. **Use Efficient Grouping:** When using `group_by`, ensure you group only by necessary columns and that those columns are indexed if possible.

```elixir

from(c in Comment,

group_by: c.post_id,

select: {c.post_id, count(c.id)}

)

```

By applying these techniques, you can make your Phoenix application's database interactions more efficient, resulting in faster response times and the ability to handle larger loads. Always monitor and profile your queries as part of your regular development cycle to catch and address performance issues early.

## Avoiding N+1 Query Problem

The N+1 query problem is a common issue in database querying, where the initial query (the “1”) is followed by N additional queries to fetch related data. This can lead to significant performance degradation, especially in applications with large datasets or high concurrency. In Phoenix, utilizing Ecto associations and preloads effectively can help mitigate the N+1 query problem by batching data retrieval into fewer, more efficient queries.

### Understanding the N+1 Query Problem

The N+1 query problem typically occurs when we fetch a collection of records and then individually fetch associated records for each item in the collection. Imagine we have a `Post` model and each post has many `Comments`. Fetching all posts and their comments with improper data retrieval mechanisms can result in the N+1 query problem.

Example scenario:

1. Fetch all posts with a single query.

2. For each post, fetch its comments with a separate query.

Consider this code snippet:

<pre><code>

posts = Repo.all(Post)

for post <- posts do

Repo.all(from c in Comment, where: c.post_id == ^post.id)

end

</code></pre>

Here, if we have 10 posts, the above code will result in 1 query to fetch all posts and 10 additional queries (one for each post) to fetch comments, making it 11 queries in total. This is the N+1 query problem.

### Solving N+1 with Ecto Preloads

Ecto provides a powerful way to preload associations, allowing us to fetch related data efficiently in a single query. Using the `preload` function, we can instruct Ecto to retrieve associated data together with the primary data.

#### Example of Preloading Associations

Using the same `Post` and `Comment` example, we can utilize the `preload` function to fetch all posts and their comments in two queries, dramatically reducing the number of database queries.

<pre><code>

posts = Repo.all(from p in Post, preload: [:comments])

</code></pre>

With this approach, Ecto will perform just two queries:

1. A query to fetch all posts.

2. A query to fetch all comments for those posts, which Ecto will automatically associate with the corresponding posts.

#### Preloading Nested Associations

Ecto also supports nested associations, allowing you to preload multiple levels of associations efficiently. Suppose each `Comment` has an associated `User` who wrote the comment. You can preload these nested associations as follows:

<pre><code>

posts = Repo.all(

from p in Post,

preload: [comments: :user]

)

</code></pre>

This query ensures that the posts are loaded with their comments, and each comment is loaded with its associated user in a minimal number of queries.

### Using Ecto's `join` and `select_merge`

While preloading is a straightforward way to tackle the N+1 query problem, sometimes leveraging `join` and `select_merge` in Ecto can offer more control over the queries and data being fetched.

Example using `join`:

<pre><code>

query = from p in Post,

join: c in Comment, on: c.post_id == p.id,

preload: [comments: c],

select_merge: %{comments_count: count(c.id)}

posts = Repo.all(query)

</code></pre>

In this example, we join the `Comment` table with the `Post` table, preload the comments and also count the number of comments per post, all within a single query.

### Summary

Avoiding the N+1 query problem is crucial for maintaining optimal performance and scalability in your Phoenix applications. By leveraging Ecto's `preload`, `join`, and `select_merge` functionalities, you can reduce the number of database queries and batch data retrieval efficiently. Always evaluate the data access patterns in your application and use profiling tools to identify and address potential N+1 query issues.

In the next sections, we'll explore more strategies for optimizing database query performance, including caching techniques, connection pool management, and using LoadForge for effective load testing.

## Using Caching Wisely

One of the most effective ways to reduce database load and improve response times in Phoenix applications is through intelligent caching. Caching can dramatically accelerate your application by storing expensive queries and frequent access data in memory, thus reducing the need to hit the database repeatedly. In this section, we'll explore strategies for implementing caching in Phoenix, along with examples of cache stores and cache invalidation techniques.

### Why Caching?

Caching offers numerous benefits including:

1. **Reduced Latency**: By retrieving data from a faster cache store instead of the database.

2. **Lower Database Load**: By decreasing the number of direct database queries.

3. **Improved Scalability**: By allowing the application to handle more requests efficiently.

### Selecting a Cache Store

Phoenix supports a variety of caching solutions. Two popular options are:

1. **ETS (Erlang Term Storage)**: Built into the Erlang VM, offering in-memory storage and fast access.

2. **Redis**: An in-memory data structure store used as a database, cache, and message broker, known for its high performance.

#### Example: Setting Up ETS

To use ETS for caching in Phoenix, we can create an ETS table and use it to store and retrieve query results.

```elixir

defmodule MyApp.Cache do

@table :my_app_cache

# Create an ETS table

def init_cache do

:ets.new(@table, [:set, :public, :named_table])

end

# Store data in the cache

def put(key, value) do

:ets.insert(@table, {key, value})

end

# Retrieve data from the cache

def get(key) do

case :ets.lookup(@table, key) do

[{^key, value}] -> {:ok, value}

[] -> :error

end

end

end

Implementing Caching Logic

Integrate caching logic within your context or controller functions. Here’s an example of how you could cache expensive database queries:

defmodule MyApp.UserContext do

alias MyApp.Repo

alias MyApp.Cache

alias MyApp.User

# Ensure the cache is initialized

Cache.init_cache()

def get_user(id) do

case Cache.get({:user, id}) do

{:ok, user} -> user

:error ->

# Query the database only if the cache miss occurs

user = Repo.get(User, id)

Cache.put({:user, id}, user)

user

end

end

end

Cache Invalidation

Effective cache invalidation strategies are crucial for ensuring data consistency. Here are common methods:

- Time-Based Expiration (TTL): Automatically expire cache entries after a specific duration.

- Event-Based Invalidation: Invalidate cache entries based on certain events, like data modifications.

Example: TTL with ETS

ETS does not support TTL natively, but you can implement it by managing timestamps.

defmodule MyApp.Cache do

@table :my_app_cache

@ttl 300_000 # Cache TTL in milliseconds (5 minutes)

def init_cache do

:ets.new(@table, [:set, :public, :named_table])

end

def put(key, value) do

:ets.insert(@table, {key, value, :erlang.system_time(:milli_seconds)})

end

def get(key) do

case :ets.lookup(@table, key) do

[{^key, value, timestamp}] when :erlang.system_time(:milli_seconds) - timestamp < @ttl ->

{:ok, value}

_ ->

:ets.delete(@table, key)

:error

end

end

end

Conclusion

Properly implemented caching can significantly enhance the performance of your Phoenix application by reducing database load and improving response times. Always remember to tailor your caching strategy to your specific use case, considering both the type of data and how often it changes. Regularly review and refine your caching logic to maintain a balance between cache hit ratio and data consistency.

## Connection Pool Management

Effective management of the database connection pool is crucial for maintaining optimal resource utilization and achieving high performance in a Phoenix application. The connection pool acts as a cache for database connections, allowing your Phoenix application to reuse connections rather than creating new ones for each request. This section provides guidance on configuring and managing the database connection pool in Phoenix to ensure your application runs efficiently under different loads.

### Understanding Connection Pools

A connection pool maintains a pool of open database connections to be reused across requests. This reduces the overhead associated with establishing new connections and helps manage the database's capacity to handle concurrent requests. In Phoenix, connection pooling is managed by `DBConnection.Poolboy` by default, providing a robust solution for most use cases.

### Configuring the Connection Pool

To configure the connection pool in your Phoenix application, you will update the `config/config.exs` file with specific parameters that determine the behavior of the pool. Key configuration options include:

- `:pool_size`: The maximum number of database connections maintained in the pool.

- `:timeout`: The duration (in milliseconds) to wait for an available connection before timing out.

- `:queue_target` and `:queue_interval`: Parameters influencing how connections queue up and how long they're held.

Here's an example configuration:

<pre><code>

config :my_app, MyApp.Repo,

username: "postgres",

password: "postgres",

database: "my_app_db",

hostname: "localhost",

pool_size: 10, # Maximum number of connections in the pool

timeout: 5000, # Time to wait for a connection before timeout (5 seconds)

queue_target: 50, # Number of microseconds to accept queuing before considering connections saturated

queue_interval: 1000 # Number of microseconds to wait for a connection in the queue

</code></pre>

### Setting the Optimal Pool Size

The optimal `:pool_size` depends on several factors:

1. **Database Server Resources**: Allocate connections based on the CPU and memory resources of your database server.

2. **Application Load**: Higher loads generally require more connections to prevent queuing and timeouts.

3. **Latency Sensitivity**: Applications requiring low latency will benefit from a higher pool size to minimize wait times for acquiring connections.

A good starting point is usually 10-20 connections, but you should perform load testing to identify the optimal number for your specific application.

### Monitoring Pool Performance

Monitoring the performance of your connection pool is essential for identifying bottlenecks and optimizing settings. Phoenix provides several tools and libraries for this purpose:

- **Telemetry**: Instrumentation library for collecting real-time performance metrics.

- **AppSignal** or **New Relic**: External monitoring services that provide comprehensive insights into application performance, including database connections.

Here's an example of setting up basic telemetry for Ecto:

<pre><code>

defmodule MyApp.Repo do

use Ecto.Repo,

otp_app: :my_app,

adapter: Ecto.Adapters.Postgres

# Attach telemetry events

@impl true

def start_link(opts) do

:telemetry.attach(

"repo-query-handler",

[:my_app, :repo, :query],

&MyApp.Telemetry.handle_event/4,

nil

)

super(opts)

end

def handle_event(_event_name, measurements, metadata, _config) do

# Log or handle telemetry data here

IO.inspect({measurements, metadata})

end

end

</code></pre>

### Troubleshooting Common Issues

- **Timeouts**: If you encounter frequent timeouts, consider increasing the `:timeout` value or scaling up your `:pool_size`.

- **High Wait Times**: Long wait times for connections indicate an undersized pool. Monitor queue times and adjust `:pool_size` and `:queue_target` accordingly.

- **Resource Exhaustion**: Too many connections can exhaust your database or server resources. Balance the pool size with available resources and consider horizontally scaling your database to handle more connections.

Proper connection pool management ensures that your Phoenix application maintains consistent performance and scalability, particularly under high load conditions. Regular monitoring and adjustment of pool parameters, guided by insights from load testing and real-time performance metrics, will help keep your application running smoothly.

## Database Performance Monitoring

Monitoring your database performance is crucial to maintaining the efficiency and scalability of your Phoenix application. Early detection of potential bottlenecks can help you take corrective actions before they impact your users. This section will cover various tools and techniques to monitor your database performance in real-time, identify bottlenecks, and apply the necessary optimizations.

### Tools for Real-time Monitoring

Several tools can help you monitor the performance of your database in real-time:

1. **PostgreSQL Built-in Tools**:

- **pg_stat_statements**: This extension tracks execution statistics of all SQL queries executed by a server. It can provide comprehensive insights into query performance.

<pre><code>

-- Enable the pg_stat_statements extension

CREATE EXTENSION pg_stat_statements;

-- Query the pg_stat_statements view

SELECT

query,

calls,

total_time,

rows,

100.0 * total_time / sum(total_time) OVER() AS percentage

FROM

pg_stat_statements

ORDER BY

total_time DESC;

</code></pre>

2. **Telemetry with Phoenix**:

- Phoenix provides a telemetry system that can be integrated with tools like Prometheus and Grafana for real-time monitoring.

<pre><code>

# Add dependencies in mix.exs

defp deps do

[

{:telemetry, "~> 1.0"},

{:prometheus_ex, "~> 3.0"},

{:prometheus_phoenix, "~> 1.3"},

{:prometheus_ecto, "~> 1.4"}

]

end

# In your endpoint.ex

plug Prometheus.PhoenixInstrumenter

# In your repo.ex

use Prometheus.EctoInstrumenter

</code></pre>

- Use Grafana dashboards to visualize metrics collected by Prometheus.

3. **Third-party Tools**:

- **New Relic**: An application performance monitoring tool that supports Elixir and provides comprehensive insights into database performance.

- **AppSignal**: Provides performance monitoring and error tracking for Elixir applications, including detailed database query performance analysis.

### Identifying Bottlenecks

To effectively identify and analyze performance bottlenecks, follow these methodologies:

- **Slow Queries**:

- Use tools like `pg_stat_statements` to identify slow queries and understand their impact on performance.

- Implement query logs with detailed timing in Phoenix to track slow operations.

- **Lock Contention**:

- Monitor lock statistics to detect queries that are causing contention. PostgreSQL’s `pg_locks` view can be helpful here.

<pre><code>

SELECT

pid,

locktype,

relation::regclass,

mode,

granted

FROM

pg_locks;

</code></pre>

- **Connection Pooling**:

- Ensure your connection pool size is optimal. Monitor pool usage to avoid overloading or under-utilizing the database connections.

- Use tools like Ecto’s built-in Telemetry events to track connection pool metrics.

### Taking Corrective Actions

Once bottlenecks are identified, you can take several corrective actions:

- **Query Optimization**:

- Refactor slow queries by analyzing their execution plans using `EXPLAIN ANALYZE`.

<pre><code>

EXPLAIN ANALYZE

SELECT * FROM users WHERE email = 'user@example.com';

</code></pre>

- **Indexing Strategies**:

- Add or adjust indexes based on the slow queries identified and analyzed.

- Review and remove unused or redundant indexes to optimize write performance.

- **Caching**:

- Implement caching strategies to reduce the load on your database and improve response times. Utilize tools like Redis or Memcached where appropriate.

By actively monitoring your database performance and identifying bottlenecks, you can ensure your Phoenix application remains responsive, efficient, and scalable.

In the next section, we will explore how to load test your database queries using LoadForge to determine their performance under peak load conditions.

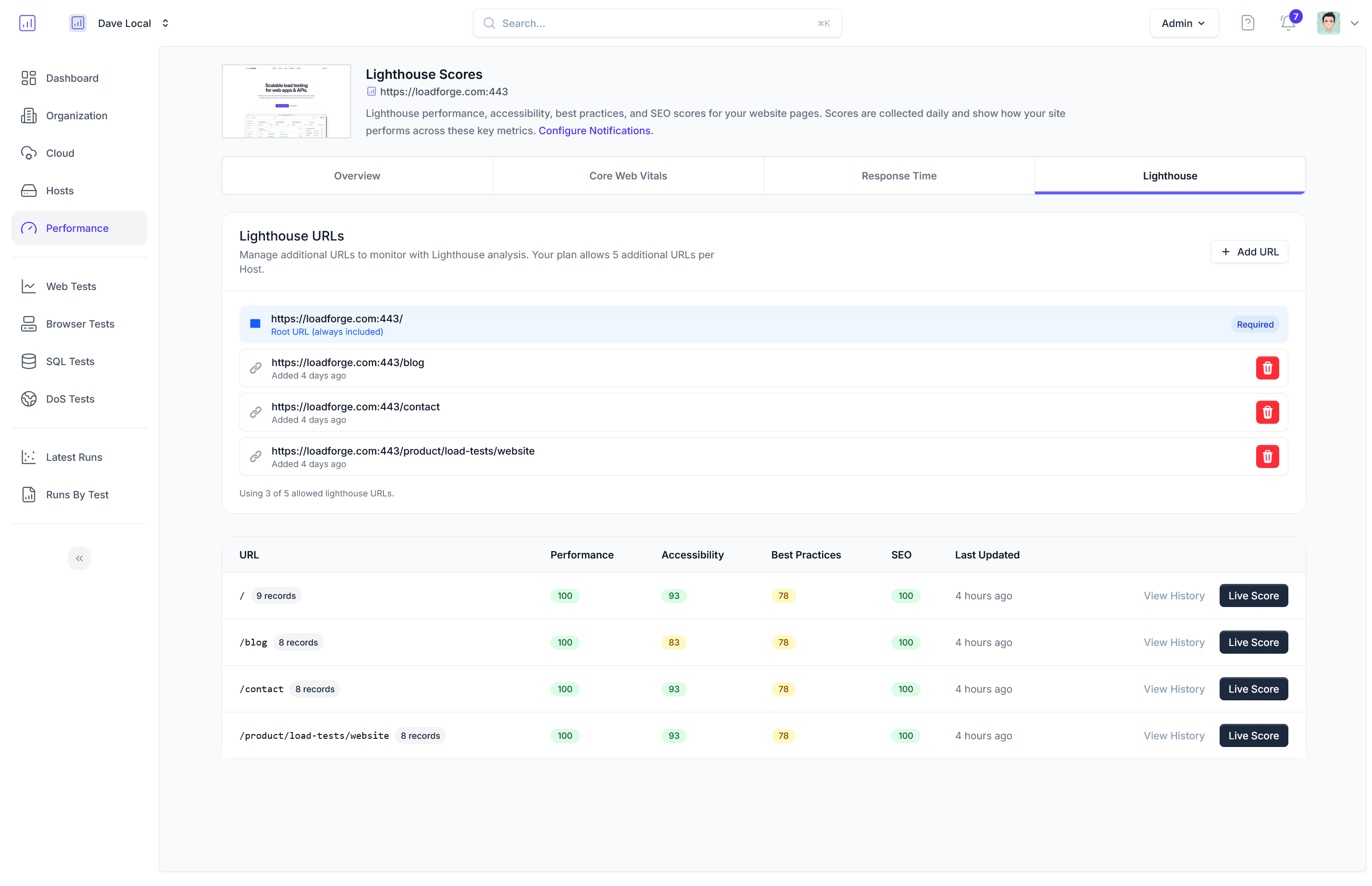

Load Testing Your Database

Load testing is essential for determining how your Phoenix application handles high traffic and database query loads. Ensuring that your database queries perform efficiently under peak conditions will prevent your application from slowing down or becoming unresponsive. In this section, we will discuss best practices for load testing your database queries using LoadForge, helping you identify and rectify performance issues.

Steps to Load Test Your Database Using LoadForge

-

Identify Critical Transactions:

Identify the most critical database transactions and queries in your application. These may include user login, data retrieval, and data modification operations. Focus your load testing on these critical areas.

-

Create Test Scenarios:

Develop realistic test scenarios that simulate typical and peak user behaviors. LoadForge allows you to create a variety of test scenarios that can mimic real-world usage patterns.

-

Setup LoadForge:

Configure LoadForge to target your Phoenix application. This setup should include details such as the target URLs, request payloads, and the expected user behavior.

defmodule MyAppWeb.PageControllerTest do

use MyAppWeb.ConnCase

test "loads homepage", %{conn: conn} do

conn = get(conn, "/")

assert html_response(conn, 200) =~ "Welcome to Phoenix!"

end

end

Best Practices for Load Testing

-

Start Small and Scale:

Begin with small loads and gradually increase the number of concurrent users to understand how your application and database behave under stress.

-

Focus on Database-Intensive Operations:

Load test operations that are known to be database-intensive. For example, complex queries or operations involving large datasets should be prioritized.

-

Measure and Monitor:

Use LoadForge's built-in monitoring tools to measure key performance metrics such as response time, throughput, and error rates. Monitoring these metrics will help identify bottlenecks and performance degradation.

-

Analyze Query Performance:

Pay close attention to how your database queries perform under load. Use the EXPLAIN ANALYZE SQL command to understand the execution plan of your queries and identify slow operations.

query =

from(u in User, where: u.email == ^email)

|> Repo.one!()

IO.inspect(query)

-

Simulate Network Latency:

LoadForge can simulate network latency to give a more accurate picture of how your database queries perform under actual network conditions.

-

Evaluate Connection Pooling:

Analyze how your database connection pool behaves under load. Ensure that the pool is neither too large nor too small, as both can lead to performance issues.

Example Load Test Configuration

Here's an example of how to set up a basic load test using LoadForge:

config :loadforge,

target: "http://yourphoenixapp.com",

scenarios: [

%{

"name": "Home Page Load Test",

"method": "GET",

"url": "/",

"load_pattern": [concurrent_users: 10, duration: "5m"]

},

%{

"name": "User Login Load Test",

"method": "POST",

"url": "/login",

"payload": %{"email": "test@example.com", "password": "password123"},

"load_pattern": [concurrent_users: 5, duration: "10m"]

}

]

Interpreting Load Test Results

-

Response Time: Monitor how long it takes for your queries to execute under different loads. High response times may indicate issues with query efficiency or database performance.

-

Throughput: Check the number of requests processed by the database per second. A sudden drop in throughput could be a sign of a bottleneck.

-

Errors and Timeouts: Identify any errors or timeouts that occur during the load test. These issues can point to potential weak spots in your querying logic or database configuration.

Taking Corrective Actions

Based on the results of your load testing, make necessary optimizations to your queries, indexes, and database configuration. Regularly revisiting load testing with LoadForge will ensure your Phoenix application remains performant and scalable.

In conclusion, efficient load testing using LoadForge can help you identify and address performance issues before they impact your users. By following best practices and continuously optimizing your database queries, you can ensure your Phoenix application performs efficiently under all load conditions.

Conclusion

Efficient database querying in Phoenix is pivotal for achieving high performance and scalability in your web applications. Throughout this guide, we have explored several essential techniques and best practices that can significantly impact your application's capacity to handle substantial traffic and complex data operations.

Key Takeaways

-

Understanding Ecto and Repo: We started with a foundational understanding of Ecto and Repo, the core components for database interactions in Phoenix. Ecto's ability to generate SQL queries and manage data lifecycles with ease directly influences query performance.

-

Choosing the Right Data Types: Selecting appropriate data types for your schema is crucial for database efficiency. Proper data types not only save space but also speed up data retrieval.

-

Indexing Strategies: Indexing is one of the most effective ways to enhance query performance. By creating the right indexes, developers can dramatically reduce query execution times. Remember to index columns that are frequently searched, filtered, or joined.

-

Optimizing Query Performance: Writing efficient SQL queries using Ecto involves employing techniques such as query planning and minimizing the number of database calls. Utilizing joins appropriately and understanding Ecto’s query capabilities can prevent performance bottlenecks.

-

Avoiding N+1 Query Problem: The N+1 query problem can be a significant performance drain. Using Ecto associations and preloads helps batch data retrieval, effectively reducing the number of database hits and optimizing loading times.

-

Using Caching Wisely: Caching can substantially reduce database load and improve response times. Implementing cache strategically—and ensuring cache invalidation mechanisms are robust—can lead to notable performance gains.

-

Connection Pool Management: Proper configuration and management of the database connection pool ensure optimal resource utilization. Adjusting pool sizes based on your application’s needs can prevent connection exhaustion and maintain high performance.

-

Database Performance Monitoring: Continuous monitoring allows for real-time insights into database performance. Using the right tools to identify and address bottlenecks promptly is crucial for maintaining a healthy database system.

-

Load Testing Your Database: Before deploying to production, performing load tests with LoadForge can reveal potential issues under peak load conditions. This proactive approach helps in tuning the performance and ensuring robustness in real-world scenarios.

Continuous Performance Optimization

As application demands evolve, it's imperative to engage in ongoing performance optimization. Regularly revisiting and refining your database queries, indexes, and configuration settings ensures sustained efficiency and scalability. Here are some final actionable steps:

- Continuously monitor query performance and adapt your strategies based on collected data.

- Stay abreast of updates and new features in Phoenix, Ecto, and PostgreSQL (or your chosen database system) that could offer performance improvements.

- Engage in routine load testing with LoadForge to anticipate and mitigate performance issues before they impact your users.

By adhering to these guidelines and maintaining a proactive approach, you will be well-positioned to leverage the full potential of Phoenix, delivering fast, reliable, and scalable applications.