Explorer reports addition

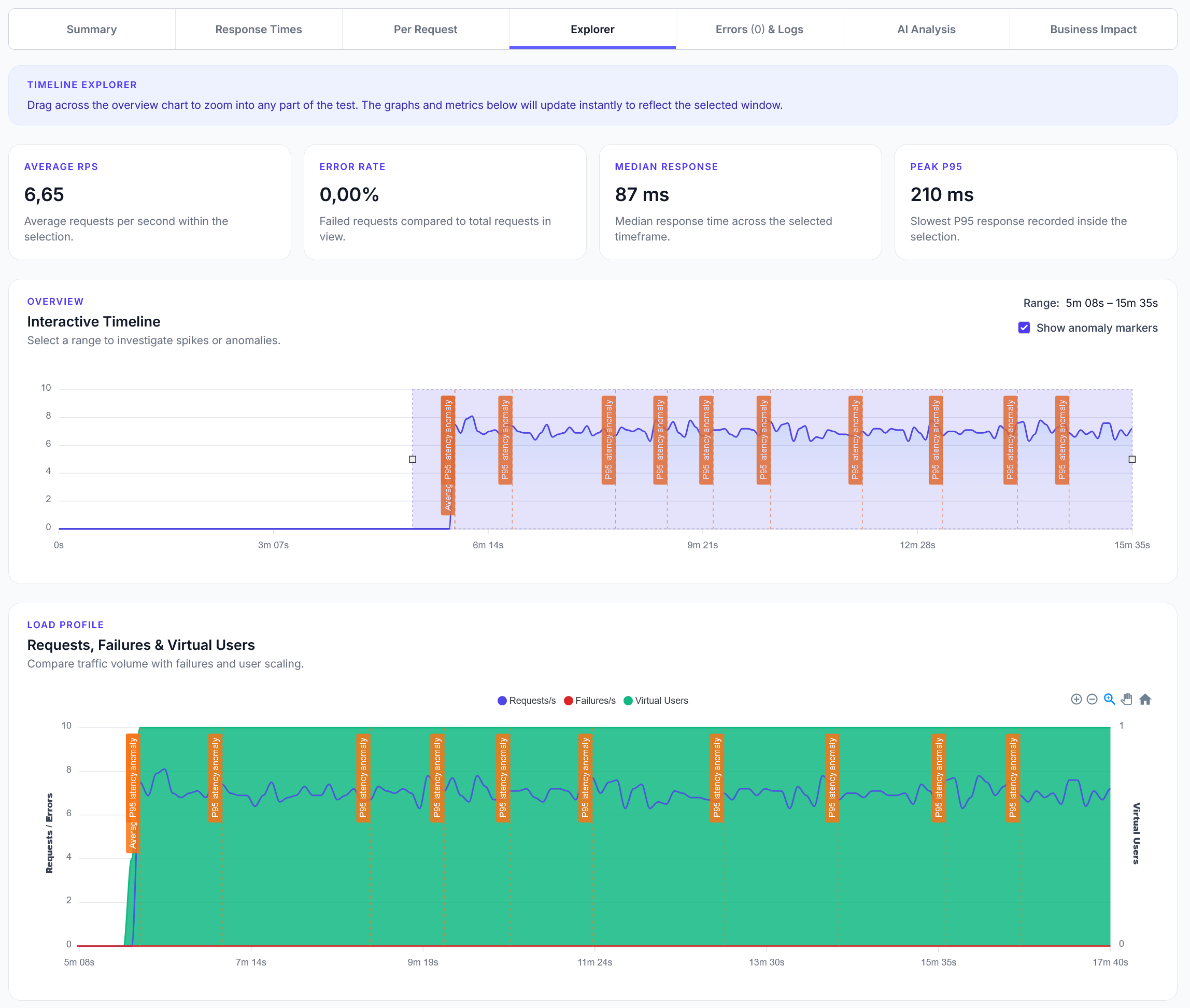

We have added a new Explorer feature to reports, with a timeline scrubber and easy anomaly detection.

Test your application's performance across different geographic regions to ensure consistent user experience worldwide

LoadForge can record your browser, graphically build tests, scan your site with a wizard and more. Sign up now to run your first test.

Test your application's performance across different geographic regions to ensure consistent user experience worldwide. This locustfile simulates users from various global locations accessing your services.

from locust import HttpUser, task, between

import random

import time

import json

from datetime import datetime, timezone

class MultiRegionUser(HttpUser):

"""Simulate users from different geographic regions"""

wait_time = between(1, 3)

def on_start(self):

"""Initialize region-specific configuration"""

# Simulate different regional characteristics

self.region_profiles = {

'us_east': {

'timezone': 'America/New_York',

'language': 'en-US',

'currency': 'USD',

'expected_latency': 50, # ms baseline

'peak_hours': [9, 10, 11, 14, 15, 16]

},

'us_west': {

'timezone': 'America/Los_Angeles',

'language': 'en-US',

'currency': 'USD',

'expected_latency': 80,

'peak_hours': [9, 10, 11, 14, 15, 16]

},

'europe': {

'timezone': 'Europe/London',

'language': 'en-GB',

'currency': 'EUR',

'expected_latency': 120,

'peak_hours': [8, 9, 10, 13, 14, 15]

},

'asia_pacific': {

'timezone': 'Asia/Singapore',

'language': 'en-SG',

'currency': 'SGD',

'expected_latency': 200,

'peak_hours': [9, 10, 11, 14, 15, 16]

}

}

# Select random region profile

self.current_region = random.choice(list(self.region_profiles.keys()))

self.profile = self.region_profiles[self.current_region]

# Set region-specific headers

self.client.headers.update({

'Accept-Language': self.profile['language'],

'X-User-Region': self.current_region,

'X-Currency': self.profile['currency'],

'X-Timezone': self.profile['timezone']

})

# Track latency measurements

self.latency_measurements = []

print(f"User initialized for region: {self.current_region}")

@task(3)

def test_api_latency(self):

"""Test API response times and validate against regional expectations"""

start_time = time.time()

with self.client.get(

"/api/v1/data",

name=f"API Latency - {self.current_region}",

catch_response=True

) as response:

latency = (time.time() - start_time) * 1000 # Convert to ms

self.latency_measurements.append(latency)

if response.status_code == 200:

# Validate latency against regional expectations

expected_max = self.profile['expected_latency'] * 2 # Allow 2x baseline

if latency <= expected_max:

response.success()

print(f"Good latency from {self.current_region}: {latency:.1f}ms")

else:

response.failure(f"High latency from {self.current_region}: {latency:.1f}ms (expected < {expected_max}ms)")

else:

response.failure(f"API request failed: {response.status_code}")

@task(2)

def test_cdn_performance(self):

"""Test CDN content delivery performance"""

# Test different types of content

content_types = [

{'path': '/static/images/hero.jpg', 'type': 'image'},

{'path': '/static/css/main.css', 'type': 'css'},

{'path': '/static/js/app.js', 'type': 'javascript'},

{'path': '/api/v1/config.json', 'type': 'json'}

]

content = random.choice(content_types)

start_time = time.time()

with self.client.get(

content['path'],

name=f"CDN {content['type']} - {self.current_region}",

catch_response=True

) as response:

download_time = (time.time() - start_time) * 1000

if response.status_code == 200:

# Check for CDN headers

cdn_headers = ['X-Cache', 'CF-Cache-Status', 'X-Served-By', 'X-CDN']

cdn_hit = any(header in response.headers for header in cdn_headers)

if cdn_hit:

print(f"CDN hit for {content['type']} from {self.current_region}: {download_time:.1f}ms")

response.success()

else:

print(f"CDN miss for {content['type']} from {self.current_region}: {download_time:.1f}ms")

response.success() # Still successful, just noting CDN status

else:

response.failure(f"CDN content failed: {response.status_code}")

@task(2)

def test_regional_content(self):

"""Test region-specific content delivery"""

regional_endpoints = [

f"/api/v1/content/regional?region={self.current_region}",

f"/api/v1/pricing?currency={self.profile['currency']}",

f"/api/v1/localization?lang={self.profile['language']}"

]

endpoint = random.choice(regional_endpoints)

with self.client.get(

endpoint,

name=f"Regional Content - {self.current_region}",

catch_response=True

) as response:

if response.status_code == 200:

try:

data = response.json()

# Validate region-specific content

if 'currency' in data and data['currency'] == self.profile['currency']:

print(f"Correct currency for {self.current_region}: {data['currency']}")

if 'language' in data and data['language'] == self.profile['language']:

print(f"Correct language for {self.current_region}: {data['language']}")

response.success()

except json.JSONDecodeError:

response.success() # Content might not be JSON

else:

response.failure(f"Regional content failed: {response.status_code}")

@task(1)

def test_regional_failover(self):

"""Test regional failover mechanisms"""

# Simulate accessing primary and backup regional endpoints

endpoints = [

f"/api/v1/health/region/{self.current_region}",

f"/api/v1/status/regional"

]

for endpoint in endpoints:

with self.client.get(

endpoint,

name=f"Regional Failover - {self.current_region}",

catch_response=True

) as response:

if response.status_code == 200:

try:

health_data = response.json()

if health_data.get('status') == 'healthy':

print(f"Regional health check passed for {self.current_region}")

response.success()

break # Primary region is healthy

else:

print(f"Regional health degraded for {self.current_region}")

continue # Try backup

except json.JSONDecodeError:

response.success() # Health check might return simple text

break

else:

print(f"Regional endpoint failed: {response.status_code}")

continue # Try next endpoint

def on_stop(self):

"""Report latency statistics for this region"""

if self.latency_measurements:

avg_latency = sum(self.latency_measurements) / len(self.latency_measurements)

min_latency = min(self.latency_measurements)

max_latency = max(self.latency_measurements)

print(f"Region {self.current_region} latency stats:")

print(f" Average: {avg_latency:.1f}ms")

print(f" Min: {min_latency:.1f}ms")

print(f" Max: {max_latency:.1f}ms")

print(f" Expected baseline: {self.profile['expected_latency']}ms")

class RegionalLoadPatternUser(MultiRegionUser):

"""Simulate realistic regional load patterns based on business hours"""

def on_start(self):

super().on_start()

# Adjust request frequency based on regional business hours

current_hour = datetime.now().hour

if current_hour in self.profile['peak_hours']:

self.wait_time = between(0.5, 1.5) # Higher frequency during peak

print(f"Peak hours for {self.current_region} - increased load")

else:

self.wait_time = between(2, 5) # Lower frequency during off-peak

print(f"Off-peak hours for {self.current_region} - reduced load")

@task(4)

def simulate_business_hours_activity(self):

"""Simulate typical business hours activity patterns"""

current_hour = datetime.now().hour

if current_hour in self.profile['peak_hours']:

# Peak hours - more intensive operations

operations = [

'/api/v1/dashboard',

'/api/v1/reports/generate',

'/api/v1/transactions',

'/api/v1/analytics'

]

else:

# Off-peak - lighter operations

operations = [

'/api/v1/status',

'/api/v1/notifications',

'/api/v1/settings'

]

operation = random.choice(operations)

with self.client.get(

operation,

name=f"Business Hours Activity - {self.current_region}",

catch_response=True

) as response:

if response.status_code == 200:

response.success()

else:

response.failure(f"Business hours operation failed: {response.status_code}")

Update these settings for your multi-region testing:

# Regional Configuration

REGIONS = {

'us_east': {'timezone': 'America/New_York', 'language': 'en-US', 'currency': 'USD'},

'us_west': {'timezone': 'America/Los_Angeles', 'language': 'en-US', 'currency': 'USD'},

'europe': {'timezone': 'Europe/London', 'language': 'en-GB', 'currency': 'EUR'},

'asia_pacific': {'timezone': 'Asia/Singapore', 'language': 'en-SG', 'currency': 'SGD'}

}

# Latency Expectations (milliseconds)

EXPECTED_LATENCY = {

'us_east': 50,

'us_west': 80,

'europe': 120,

'asia_pacific': 200

}

LoadForge allows you to select specific DigitalOcean regions for your load generators:

This locustfile provides comprehensive multi-region testing capabilities, leveraging LoadForge's DigitalOcean region selection to simulate realistic global user patterns and validate geographic performance consistency.