Introduction

In the rapidly evolving landscape of containerized applications, Docker has emerged as a pivotal technology that streamlines development and deployment processes. By encapsulating applications and their dependencies into lightweight, portable containers, Docker revolutionizes the efficiency and scalability of modern software solutions. However, an often underappreciated aspect of Docker container performance is the network configuration. Proper network configuration is a cornerstone in ensuring high-performance, reliable, and scalable containerized applications.

Achieving optimal performance in Docker containers involves more than just resource allocation for CPU and memory. The network configuration plays a critical role in how containers communicate with each other, external services, and users. Misconfigured or suboptimal network settings can lead to significant performance bottlenecks, latency issues, and even application failures. Therefore, understanding and implementing advanced network configurations are essential for unlocking the full potential of your Dockerized applications.

In this comprehensive guide, we delve into the importance of various network configuration techniques and how they can vastly improve the performance of Docker containers. From foundational knowledge about Docker's networking model to advanced optimization methods, this guide provides you with actionable insights and practical tips to elevate your container network performance.

To lay the groundwork for advanced techniques, we'll start by exploring Docker's networking model and the various network drivers available. Whether you're managing a small microservice or a complex multi-host setup, selecting the right network driver can significantly impact performance. Bridging, host networking, overlay networks, and macvlan each have unique characteristics, benefits, and use cases.

In the subsequent sections, we will cover a range of practical network optimization strategies. Understanding how to adjust network settings like Maximum Transmission Unit (MTU), manage DNS settings, and leverage network namespaces can deliver noticeable performance improvements. Additionally, creating user-defined networks in Docker allows for better control over IP addressing and isolation, which in turn enhances performance and security.

Furthermore, we will highlight how Docker Compose can be utilized for optimizing network configurations across multi-container applications. More advanced techniques, such as configuring multi-host networking with Docker Swarm, service discovery, and load balancing, will also be discussed to provide a holistic view of optimizing network performance.

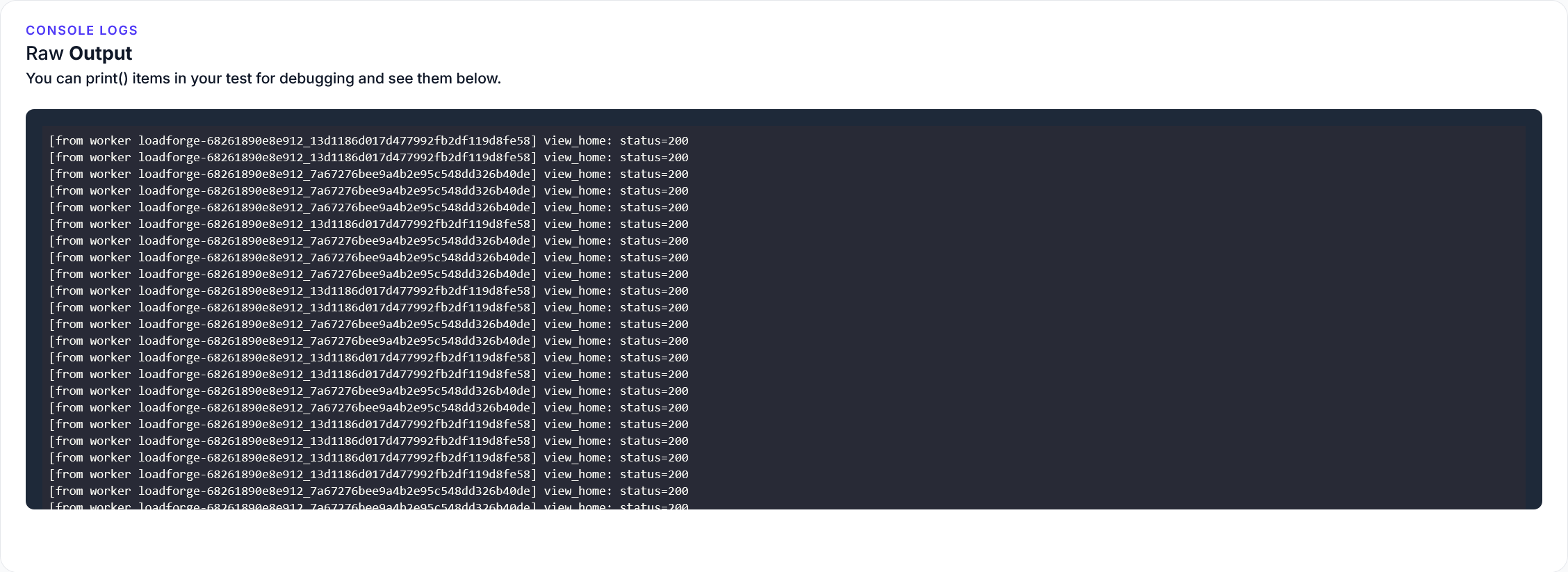

We will conclude by discussing monitoring and troubleshooting strategies to ensure continuous network performance in Docker environments. Tools and commands for performance monitoring and diagnostics are crucial for identifying and resolving network-related issues. Finally, we will explore how to leverage LoadForge for load testing Docker containers, providing you with a robust methodology for stress-testing and performance validation.

By the end of this guide, you will have a comprehensive understanding of the significance of network configuration in Docker and a suite of techniques and best practices to enhance the performance of your containerized applications. Remember, a well-configured network is not just about speed; it’s about reliability, scalability, and efficient resource utilization. Dive in to transform your Docker deployments into high-performance powerhouses.

Feel free to proceed to the next section to delve deeper into the intricacies of Docker's networking model, laying the foundation for advanced network configuration techniques.

Understanding Docker Networking

To optimize the performance of your Docker containers, a solid understanding of Docker's networking model and its various network drivers is essential. This foundational knowledge will help you make informed decisions when applying advanced network configuration techniques. In this section, we'll break down Docker's networking architecture and explore the different network drivers available: bridge, host, overlay, and macvlan, along with their specific use cases.

The Docker Networking Model

Docker provides several networking options to connect containers to each other and to the outside world. Here's an overview of Docker's built-in networking modes:

- Bridge Mode (default)

- Host Mode

- Overlay Mode

- Macvlan Mode

- None Mode

Each network mode serves different purposes, offering varying levels of performance, isolation, and complexity.

Bridge Network

The bridge network is Docker's default networking driver. When you create a new container, it is connected to the default bridge network unless specified otherwise.

Host Network

In host networking mode, a container shares the host’s network stack. This means there is no network isolation between the container and the host, resulting in improved network performance at the cost of isolation.

Overlay Network

Overlay networking enables containers running on different Docker hosts to communicate, forming a virtual network that spans multiple physical hosts.

Macvlan Network

Macvlan networks assign a MAC address to each container, making them appear as physical devices on the network. This mode provides the advantage of bypassing the Docker bridge network, which reduces overhead and can lead to improved network performance.

Comparison of Network Drivers

Here is a quick comparison of the primary network drivers discussed:

| Driver |

Performance |

Isolation |

Complexity |

Use Case |

| Bridge |

Moderate |

High |

Low |

Single-host setups, default configurations |

| Host |

High |

None |

Low |

High-performance, single-host applications |

| Overlay |

Moderate |

High (multi-host) |

High |

Multi-host deployments, Docker Swarm |

| Macvlan |

High |

Moderate |

Moderate |

Direct network integration, high throughput |

Understanding the characteristics and appropriate use cases for each of these network drivers ensures you select the optimal configuration for your Docker containers. This foundational knowledge sets the stage for deeper exploration and implementation of advanced networking techniques, detailed in subsequent sections of this guide.

Choosing the Right Network Driver

Selecting the appropriate network driver for your Docker containers is crucial for achieving optimal performance tailored to your specific use case. Docker offers several network drivers, each designed to address different networking scenarios. In this section, we will compare the bridge, host, overlay, and macvlan drivers in terms of speed, flexibility, and complexity, providing guidance on when to use each driver.

Overview of Docker Network Drivers

Docker supports multiple network drivers, each with distinct characteristics:

- Bridge

- Host

- Overlay

- Macvlan

Bridge Network Driver

The bridge network driver is the default setting when you create a container. It is suitable for container-to-container communications on the same host.

Use Case:

- Small to medium-sized deployments

- Simple network setups within a single host

Performance Considerations:

- Moderate performance

- Suitable for most development and testing environments

Example:

docker network create --driver bridge my-bridge-network

Host Network Driver

The host network driver provides the highest performance by removing network isolation between the Docker container and the Docker host. The container shares the network namespace of the host.

Use Case:

- Applications requiring high network throughput and low latency

- Scenarios requiring direct access to the host network

Performance Considerations:

- Highest performance due to no network isolation

- Limited scalability on multi-host setups

Example:

docker run --network host my-container

Overlay Network Driver

The overlay network driver allows you to connect containers across multiple Docker hosts, forming a cluster of Docker nodes.

Use Case:

- Scalable, multi-host and cloud native applications

- When using Docker Swarm or Kubernetes

Performance Considerations:

- Moderate to high latency due to network overlay

- Suitable for distributed microservices architectures

Example:

docker network create --driver overlay my-overlay-network

Macvlan Network Driver

The macvlan network driver assigns a MAC address to each container, making it appear as a physical device on the network. This allows the container to access the network directly.

Use Case:

- High-performance applications requiring direct network access

- Legacy applications expecting direct network interface access

Performance Considerations:

- High performance, low latency

- Complex setup and maintenance

Example:

docker network create -d macvlan \

--subnet=192.168.1.0/24 \

--gateway=192.168.1.1 \

-o parent=eth0 my-macvlan-network

Performance Comparison Table

| Network Driver |

Speed |

Flexibility |

Complexity |

| Bridge |

Moderate |

High |

Low |

| Host |

High |

Low |

Low |

| Overlay |

Moderate-High |

Very High |

Medium-High |

| Macvlan |

High |

Moderate |

High |

Selecting the Right Driver

When choosing a network driver, consider the following:

- Deployment Scale: Use bridge or host networks for single-host deployments, and overlay networks for multi-host or cloud-native applications.

- Performance Needs: Host and macvlan drivers offer superior performance with low latency, ideal for high-demand applications.

- Complexity of Setup: Bridge networks are straightforward to set up, while macvlan networks require more configuration and maintenance.

- Flexibility Requirements: Overlay networks provide the flexibility required for distributed applications and microservices deployment.

By understanding the characteristics and use cases of each network driver, you can make a well-informed decision that aligns with your application's performance requirements and architectural needs. This decision is fundamental for achieving high performance and efficient resource utilization in your Docker environment.

Optimizing Network Settings

Optimizing network settings within Docker containers is essential for ensuring high performance, particularly in environments with high network traffic or complex networking requirements. Below, we provide practical tips for tuning Docker's built-in network settings, including adjusting MTU sizes, managing DNS settings, and effectively leveraging network namespaces.

Adjusting MTU Sizes

The Maximum Transmission Unit (MTU) size determines the maximum packet size for communication over a network. Ensuring your Docker containers have an optimal MTU size can mitigate fragmentation and enhance network performance.

-

Identifying the Optimal MTU Size:

To identify the appropriate MTU size for your environment, you can use the ping command with the -M do (don't fragment) and -s (packet size) options:

ping -M do -s 1472 google.com

Adjust the packet size until you find the largest packet that does not fragment.

-

Configuring the MTU Size in Docker:

You can set the MTU size for Docker's network configuration using the daemon configuration file (/etc/docker/daemon.json). For example:

{

"mtu": 1472

}

After modifying this file, restart the Docker daemon:

sudo systemctl restart docker

Managing DNS Settings

Efficient DNS management is crucial for ensuring that your Docker containers resolve domain names promptly to avoid network bottlenecks.

-

Using a Reliable DNS Server:

By default, Docker uses the DNS server configured on the host. However, you can specify custom DNS servers in the Docker daemon configuration:

{

"dns": ["8.8.8.8", "8.8.4.4"]

}

-

Per-Container DNS Configuration:

If you need specific containers to use different DNS settings, use the --dns option when starting the container:

docker run --dns=8.8.8.8 --dns=8.8.4.4 mycontainer

Leveraging Network Namespaces

Network namespaces provide isolation and flexibility for configuring custom network stacks within Docker containers. This can lead to performance enhancements by isolating network resources.

-

Creating and Assigning Network Namespaces:

Create a network namespace using the ip command:

ip netns add mynamespace

-

Connecting Containers to Network Namespaces:

Utilize the --network flag to connect a container to an existing network namespace:

docker run --network container:mycontainer myimage

Alternatively, you can use a network namespace created externally:

ip link set veth1 netns mynamespace

Practical Example

Here is a practical example that combines these optimizations:

# Adjust MTU size in daemon.json

echo '{

"mtu": 1400,

"dns": ["8.8.8.8", "8.8.4.4"]

}' > /etc/docker/daemon.json

sudo systemctl restart docker

# Create a network namespace

sudo ip netns add custom_namespace

# Run a container using a specific DNS

docker run --dns=8.8.8.8 --dns=8.8.4.4 my_app_image

# Connect a container to custom network namespace

docker run --network container:custom_namespace another_app_image

By fine-tuning these settings, you can significantly enhance the network performance of your Docker containers, making them more efficient and responsive under various workloads. Up next, we will delve into the benefits of creating user-defined custom networks in Docker and their associated performance improvements.

Utilizing User-Defined Networks

Creating user-defined custom networks in Docker is a powerful technique that allows you to gain better control over IP addressing, enhance network isolation, and ultimately improve the performance of your Docker containers. Unlike default bridge networks, user-defined networks offer greater configurability and enable advanced networking features that are essential for high-performance applications.

Benefits of User-Defined Networks

-

Better Control Over IP Addressing:

- In user-defined networks, you have the option to assign static IP addresses to your containers. This static allocation simplifies network management and integrates more seamlessly with other networking components.

- With custom subnets and gateways, user-defined networks enable easier integration with existing infrastructure, improving inter-container communications and avoiding IP conflicts.

-

Enhanced Isolation and Security:

- User-defined networks provide better isolation compared to default networks. Containers in different user-defined networks cannot communicate with each other unless explicitly configured to do so.

- This isolation helps in creating secure multi-tenant environments where different services or applications run in their isolated network spaces.

-

Automatic DNS Resolution:

- Docker’s embedded DNS server works seamlessly with user-defined networks, providing automatic name resolution. This means containers can resolve each other by name, reducing the need for hard-coded IP addresses and facilitating smoother inter-container communication.

-

Simplified Network Policies:

- User-defined networks allow you to create network policies tailored to specific needs. For instance, you can restrict which containers can communicate with each other, enhancing security and performance by reducing unnecessary network traffic.

Creating User-Defined Networks

Creating a user-defined network in Docker is straightforward. Below is a basic example where we create a user-defined bridge network and connect containers to it.

# Create a user-defined bridge network

docker network create --driver bridge my_custom_network

You can then run containers within this network:

# Run a container while attaching it to the user-defined network

docker run -dit --name my_container --network my_custom_network nginx

You can also assign specific IP addresses to containers within the user-defined network:

# Run a container with a specific IP address

docker run -dit --name my_container2 --network my_custom_network --ip 172.18.0.22 nginx

Enhanced Network Isolation

Here is a practical demonstration of enhanced isolation achieved through user-defined networks:

# Create two separate user-defined networks

docker network create --driver bridge network_one

docker network create --driver bridge network_two

# Run containers in different networks

docker run -dit --name container_one --network network_one nginx

docker run -dit --name container_two --network network_two nginx

# Try to ping from container_one to container_two (this will fail due to isolation)

docker exec -it container_one ping container_two

Performance Improvements

The performance enhancements through user-defined networks are multi-faceted:

- Reduced Latency: Optimized routing paths provided by custom IP addressing reduce latency.

- Efficient Resource Usage: Isolated custom networks help segregate traffic efficiently, minimizing unnecessary data flows and reducing bottlenecks.

- Optimized DNS: Built-in Docker DNS optimizes inter-container communication, reducing overhead associated with traditional DNS resolution methods.

By leveraging user-defined networks, you can significantly enhance the manageability, security, and performance of your Docker containers, paving the way for a more stable and efficient deployment environment.

Using Docker Compose for Network Optimization

Docker Compose is a powerful tool for defining and running multi-container Docker applications, and it provides significant flexibility when it comes to network configuration. By properly optimizing your Docker Compose files, you can ensure that your application’s containers communicate efficiently and perform robustly. In this section, we will cover detailed instructions on how to configure Docker Compose files to specify and optimize networks. This will help you achieve seamless multi-container application performance.

Defining Custom Networks in Docker Compose

When you create a Docker Compose file (docker-compose.yml), you can define custom networks to gain better control over your container's networking environment. Here’s a basic example of how to define and use custom networks in your Docker Compose file:

version: '3.8'

services:

app:

image: myapp:latest

networks:

- app-network

db:

image: mysql:latest

networks:

- db-network

networks:

app-network:

driver: bridge

db-network:

driver: bridge

In this example:

- We define two custom networks:

app-network and db-network.

- Each service (container),

app and db, is attached to its respective custom network.

Network Driver Selection

Docker Compose allows you to specify different network drivers to better suit your use case. The most common drivers are bridge, host, overlay, and macvlan. Depending on your performance requirements, you can choose the appropriate driver. Here’s how you can specify different drivers:

networks:

high-performance-net:

driver: host

isolated-net:

driver: overlay

host driver removes network isolation between the container and the host, providing the best performance for certain scenarios.overlay driver enables multi-host communication, crucial for Docker Swarm setups where containers need to communicate across different nodes.

Configuring Network Options

You can also configure various network options to optimize performance further. For example, adjusting the Maximum Transmission Unit (MTU) size, which can have a significant impact on network performance, especially in environments with varying network conditions. Here’s an example of setting MTU size in your Docker Compose file:

networks:

custom-net:

driver: bridge

driver_opts:

com.docker.network.driver.mtu: 1500

Using Aliases

Aliases are useful for creating extra names for the containers within a network. This can simplify service discovery and enhance readability within your Compose file. Here’s how to add aliases:

services:

frontend:

image: frontend:latest

networks:

custom-net:

aliases:

- web

networks:

custom-net:

driver: bridge

Example: Full Multi-Container Setup with Network Optimization

Let’s look at a more comprehensive example that showcases a multi-container setup with network optimization:

version: '3.8'

services:

frontend:

image: frontend:latest

depends_on:

- backend

networks:

- frontend-backend-net

backend:

image: backend:latest

depends_on:

- db

networks:

- front-end-backend-net

- db-net

db:

image: mysql:latest

networks:

- db-net

networks:

frontend-backend-net:

driver: overlay

driver_opts:

encrypted: "true"

db-net:

driver: bridge

ipam:

config:

- subnet: 172.16.238.0/24

Key points in this example:

- Containers

frontend and backend share the frontend-backend-net network for optimized communication.

- The

db service has its network db-net, ensuring isolation yet allowing backend access.

- The

overlay driver with encryption enabled is used for secure, multi-host networking.

- Custom IPAM configurations for better control over IP addressing.

With these configurations, you can optimize the network performance of your multi-container applications, leading to smoother and more efficient container interactions.

Conclusion

In this section, we discussed how to leverage Docker Compose for network optimization by defining custom networks, selecting appropriate network drivers, configuring network options, and using aliases. Properly configuring these elements in your docker-compose.yml file can significantly enhance the performance and reliability of your Dockerized applications, ensuring they run smoothly and efficiently in a multi-container environment.

Advanced Network Configuration Techniques

In this section, we take a deeper dive into advanced network configuration techniques to elevate the performance and flexibility of your Docker containers. From multi-host networking with Docker Swarm to integrating third-party network solutions, these techniques will enable you to craft a robust, scalable Docker environment.

Configuring Multi-Host Networking with Docker Swarm

Docker Swarm is a native clustering and orchestration tool for Docker containers. It transforms a pool of Docker hosts into a single, virtual Docker host. Swarm mode is crucial for deployments that span multiple hosts, as it provides seamless multi-host networking.

Steps to Enable Docker Swarm:

-

Initiate a Swarm:

Initialize a new swarm on the manager node.

docker swarm init --advertise-addr <MANAGER-IP>

-

Join Worker Nodes:

On each worker node, run the following command to join the swarm:

docker swarm join --token <SWARM-TOKEN> <MANAGER-IP>:2377

-

Create an Overlay Network:

Overlay networks enable services to communicate across multiple Docker daemon hosts.

docker network create -d overlay my-overlay-network

-

Deploy Services:

Deploy services using the overlay network.

docker service create --name my-service --network my-overlay-network my-image

Using Service Discovery and Load Balancing

One of the powerful features of Docker Swarm is its built-in service discovery and load balancing. Docker automatically assigns DNS names to services, enabling seamless service discovery. Additionally, Docker Swarm provides internal load balancing by distributing the requests equally across the available service replicas.

version: '3.7'

services:

web:

image: web-app

deploy:

replicas: 3

restart_policy:

condition: on-failure

networks:

- webnet

redis:

image: redis:alpine

deploy:

replicas: 2

networks:

- webnet

networks:

webnet:

driver: overlay

Integrating Third-Party Network Solutions

For advanced networking requirements, Docker can integrate with third-party network solutions like Calico, Weave, and Cilium. These tools provide enhanced network security, monitoring, and scalability features.

Integration Example with Calico:

-

Install Calico on Each Node:

Follow the Calico installation guide specific to your operating system and orchestration tool.

-

Configure Docker to Use Calico:

Edit the Docker daemon configuration to utilize Calico for networking.

{

"bridge": "none",

"ip-forward": true,

"iptables": false,

"ipv6": false

}

-

Create a Calico Network:

Use the calicoctl command to create a Calico network.

calicoctl create -f - <<EOF

apiVersion: projectcalico.org/v3

kind: Network

metadata:

name: calico-net

spec:

cidr: 192.168.0.0/16

EOF

4. **Deploy Containers on the Calico Network:**

Ensure your containers are deployed on the Calico-configured network.

```bash

docker network create --driver calico calico-net

docker run --net=calico-net my-container

Conclusion

Advanced network configuration techniques such as configuring multi-host networking with Docker Swarm, leveraging built-in service discovery and load balancing, and integrating with third-party network solutions offer powerful ways to enhance the performance and scalability of your Docker containers. By carefully implementing these techniques, you can ensure that your Dockerized applications run efficiently in complex, multi-host environments.

Monitoring and Troubleshooting Network Performance

Ensuring high-performance network operations within your Docker environment necessitates effective monitoring and timely troubleshooting. This section outlines the essential tools and techniques to help you identify bottlenecks and resolve common network issues. By following these guidelines, you can maintain optimal performance for your Docker containers.

Tools for Monitoring Network Performance

To monitor network performance effectively, you can leverage several built-in Docker commands and third-party tools:

-

Docker Stats:

- The

docker stats command provides real-time metrics for all running containers, including CPU, memory, and network I/O usage.

- Usage example:

docker stats

- This command helps you quickly spot containers that are consuming excessive network resources.

-

Netstat:

- The

netstat command is invaluable for examining network statistics and identifying active connections and listening ports.

- Usage example to check active connections:

netstat -plant

-

Ifstat:

- The

ifstat command allows for the monitoring of network interface statistics.

- Usage example to display continuous updates:

ifstat -t

-

Tcpdump:

- For in-depth packet analysis,

tcpdump is a powerful tool.

- Usage example to capture packets on a specified network interface:

tcpdump -i eth0

-

Wireshark:

- Wireshark provides a graphical interface for comprehensive network protocol analysis and debugging.

- It can be used alongside Docker to capture and analyze packet data in real-time.

Identifying Network Bottlenecks

Here's a step-by-step approach to identifying and resolving common network bottlenecks:

-

Monitor Network Interfaces:

- Use

ifstat to monitor traffic on relevant interfaces. High traffic on a specific interface may indicate a bottleneck.

- Example:

ifstat eth0

-

Examine Container Metrics:

-

Analyze Network Latency:

- Use

ping or traceroute to measure latency within your network. High latency could indicate congestion or suboptimal routing.

- Examples:

ping google.com

traceroute google.com

- Inspect Packet Loss:

Troubleshooting Common Network Issues

When troubleshooting, focus on common network issues and apply targeted solutions:

-

Container Networking Issues:

-

Network Driver Mismatches:

- Ensure you're using the appropriate network driver for your use case. Incorrect drivers can lead to suboptimal performance.

- Validate with:

docker network ls

-

DNS Resolution Problems:

- Resolve DNS issues by reviewing and configuring Docker’s DNS settings correctly.

- Example of DNS configuration in Docker Compose:

services:

my_service:

dns:

- 8.8.8.8

- 8.8.4.4

- Overloaded Network Resources:

- If a network is overloaded with too many containers, consider redistributing the load across multiple networks or hosts.

- Use

docker stats to identify and redistribute containers causing high network traffic.

Best Practices for Network Performance Maintenance

-

Regular Monitoring:

- Continuously monitor network performance using the above-mentioned tools to detect and address issues proactively.

-

Capacity Planning:

- Implement capacity planning to anticipate and manage network load efficiently, avoiding unexpected performance hits.

-

Performance Testing:

- Regularly perform load tests using tools like LoadForge to validate network performance under different workloads and make necessary adjustments before issues arise.

By adhering to these guidelines, you can maintain high-performance network operations within your Docker environment, ensuring smooth and efficient container management.

Load Testing with LoadForge

In order to ensure your Docker containers perform optimally under high load, effective load testing is crucial. LoadForge provides a comprehensive platform to simulate realistic traffic patterns, identify bottlenecks, and make necessary adjustments for improved performance. This section will guide you through the process of leveraging LoadForge for performing load tests on your Docker containers.

Setting Up Load Tests with LoadForge

-

Create a LoadForge Account: Begin by signing up for a LoadForge account if you haven't already. This will give you access to the dashboard where you can manage your tests.

-

Define Your Load Test Plan: Determine the parameters for your load test, such as the number of users, test duration, and endpoints to be tested. This will help in creating a test plan that mimics your expected traffic.

-

Prepare Your Docker Environment: Ensure your Docker containers are running and accessible. Below is an example Docker setup for a basic web application:

version: '3'

services:

web:

image: nginx:alpine

ports:

- "80:80"

networks:

- webnet

networks:

webnet:

-

Create a New Test in LoadForge:

- Login to LoadForge and navigate to the 'Create New Test' section.

- Provide a name for your test and select the appropriate HTTP method (GET, POST, etc.).

- Enter the URL endpoints corresponding to your Docker services.

- Configure the number of virtual users and the duration for the test.

For example:

- Number of Users: 1000

- Duration: 10 minutes

- URL:

http://your-docker-ip-or-domain/

-

Run the Test: Start the load test by clicking on the 'Run Test' button. LoadForge will simulate the defined user load and provide real-time analytics on performance metrics such as response time, error rates, and throughput.

Interpreting Load Test Results

Once your test has completed, review the detailed results provided by LoadForge:

- Response Time: Monitor the average, minimum, and maximum response times to understand how quickly your service responds under load.

- Error Rates: Identify any error responses during the test, which can indicate issues such as server overload or network timeouts.

- Throughput: Analyze the number of requests handled per second to gauge the capacity of your Docker setup.

Making Necessary Adjustments

Based on the insights gained from LoadForge, consider the following adjustments to enhance your Docker container performance:

-

Scaling Services: If your containers are underperforming under load, consider scaling out your services. Modify your docker-compose.yml to add more replicas:

version: '3'

services:

web:

image: nginx:alpine

ports:

- "80:80"

networks:

- webnet

deploy:

replicas: 3

networks:

webnet:

-

Optimizing Configuration: Refine the configurations of your services for better performance. For instance, adjust the worker_processes and worker_connections settings in Nginx to handle more concurrent connections.

-

Network Improvements: As previously discussed in this guide, fine-tune your Docker network settings based on the observed bottlenecks. This might include tweaking MTU sizes, leveraging faster network drivers, or using user-defined networks for better isolation.

Continuous Monitoring and Re-testing

Load testing is not a one-time task. Continuously monitor your Docker container performance and regularly re-test with LoadForge to ensure optimal performance under varying load conditions. This proactive approach helps in maintaining a high-performance environment and promptly addressing any emerging issues.

By leveraging LoadForge for load testing, you can systematically profile your Docker containers, identify performance bottlenecks, and make data-driven decisions to optimize your services. These steps will ensure that your Docker environment remains robust, scalable, and capable of handling high traffic with ease.

Case Studies and Practical Examples

In this section, we will explore real-world examples and case studies that illustrate the successful application of advanced network configurations in Docker containers. These examples will highlight specific challenges, the implemented solutions, and the tangible performance improvements achieved.

Case Study 1: Improving Microservices Communication Performance

Problem: A SaaS company faced latency issues in communication between microservices running across different Docker containers. These latencies were particularly noticeable under high-load conditions, causing slow response times and user dissatisfaction.

Solution: The company decided to switch from the default bridge network to a macvlan network driver for direct container-to-container communication, bypassing the Docker virtual switch. This change allowed the containers to appear as physical devices on the network, significantly reducing the communication overhead.

Implementation:

docker network create -d macvlan \

--subnet=192.168.1.0/24 \

--gateway=192.168.1.1 \

-o parent=eth0 macvlan_net

docker run -d --network macvlan_net --name service1 my_microservice_image

docker run -d --network macvlan_net --name service2 my_microservice_image

Results:

- Latency Reduction: Reduced inter-service communication latency by 50%.

- Performance Improvement: Overall response time improved by 35%, resulting in a more responsive application.

Case Study 2: High Traffic E-Commerce Platform

Problem: An e-commerce platform experienced degraded performance during peak shopping seasons due to the high traffic hitting its Docker container-based infrastructure. The primary issue was network congestion and suboptimal load balancing across multiple containers.

Solution: The engineering team implemented Docker Swarm to create an overlay network that could span multiple Docker hosts. They also integrated built-in Swarm load balancing and DNS-based service discovery to efficiently manage container traffic.

Implementation:

docker network create -d overlay --attachable ecomm_overlay_net

docker service create --replicas 5 --network ecomm_overlay_net --name web_server my_ecommerce_image

Results:

- Network Congestion: Network congestion was reduced by effectively distributing traffic across multiple nodes.

- Scalability: The platform could seamlessly scale up during peak loads without performance bottlenecks.

- Improved User Experience: Enhanced load balancing led to a 40% improvement in page load times.

Case Study 3: Optimizing DNS Resolution in a Multi-Container Setup

Problem: A financial services application running in Docker containers encountered delays due to slow DNS resolution, impacting critical data-fetching operations between containers.

Solution: The team decided to optimize DNS settings by deploying a local DNS server within their Docker network. They also utilized Docker's internal DNS capabilities for faster resolution and implemented user-defined networks to isolate DNS traffic.

Implementation:

docker run -d --network my_custom_net --name dns_server my_dns_image

docker run -d --network my_custom_net --dns 127.0.0.11 --name app_container my_app_image

Results:

- DNS Resolution: DNS lookup times were reduced by 60%.

- Data Fetching Speed: Data retrieval operations sped up by 30%, leading to more efficient processing.

- Isolation and Security: User-defined networks provided better isolation, enhancing overall network security.

Conclusion

These case studies demonstrate that thoughtful and well-executed network configurations can lead to substantial performance gains in Docker containerized environments. Whether it's through employing different network drivers, leveraging Docker Swarm for load balancing, or optimizing DNS settings, there are multiple avenues to explore for enhancing network performance. By continually monitoring and adjusting these configurations, as shown in the practical examples above, it's possible to achieve high-performance Docker containers suitable for production workloads.

The practical insights and metrics provided in these case studies underscore the importance of proper network configuration and its direct impact on the efficiency and responsiveness of containerized applications.

Conclusion

Proper network configuration is pivotal to achieving high-performance Docker containers. Throughout this guide, we have discussed various strategies and techniques that can significantly enhance the networking performance of your Docker environment. By understanding and implementing these methods, you can ensure that your applications are running efficiently and are capable of handling increased loads seamlessly.

Key Points Covered:

-

Importance of Network Configuration:

- Network configuration is not just about connectivity; it directly impacts the performance and reliability of your containerized applications.

- Misconfigured networks can lead to bottlenecks, increased latency, and overall degraded performance.

-

Docker Networking Model:

- Docker provides multiple network drivers such as bridge, host, overlay, and macvlan, each with its use cases and performance implications.

- Understanding how these drivers work and when to use them sets the foundation for advanced network configuration.

-

Choosing the Right Network Driver:

- The selection of the appropriate network driver depends on the specific requirements of your application.

- We compared the network drivers in terms of speed, flexibility, and complexity to help you make informed decisions.

-

Optimizing Network Settings:

- Fine-tuning settings like MTU sizes, DNS configurations, and leveraging network namespaces can lead to substantial performance improvements.

- Practical tips were provided to adjust these settings effectively.

-

Utilizing User-Defined Networks:

- Creating custom networks offers better control over IP addressing and enhances isolation.

- The performance benefits of user-defined networks were explored in detail.

-

Docker Compose for Network Optimization:

- Docker Compose simplifies multi-container deployments and allows for precise network configurations.

- Detailed instructions on specifying and optimizing networks within Docker Compose files were provided.

-

Advanced Network Configuration Techniques:

- Techniques such as configuring multi-host networking with Docker Swarm, service discovery, load balancing, and integration with third-party solutions were discussed for advanced users.

-

Monitoring and Troubleshooting:

- Monitoring network performance and identifying bottlenecks are crucial for maintaining high performance.

- Tools and commands to assist in monitoring and troubleshooting Docker network performance were outlined.

-

Load Testing with LoadForge:

- Load testing is essential to validate the performance of your Docker containers under stress.

- Step-by-step instructions on leveraging LoadForge to perform load tests, interpret results, and make necessary adjustments were covered to ensure robust application performance.

-

Case Studies and Practical Examples:

- Real-world examples and metrics were provided to showcase successful applications of the discussed techniques, reinforcing the effectiveness of proper network configuration.

Continuous Monitoring and Optimization:

Network performance is not a one-time setup but an ongoing process. Frequent monitoring, coupled with real-time analysis of network traffic and performance metrics, can help promptly identify and resolve issues. Tools like LoadForge play a crucial role in validating performance under different load scenarios, ensuring that your Docker containers are always optimized for peak performance.

Investing time in understanding and configuring your Docker network settings appropriately can yield significant performance gains. Whether you are managing a small set of containers or a complex, multi-host Docker Swarm deployment, the principles and techniques shared in this guide will serve as a valuable resource in your journey toward high-performance containerized applications.

By continually optimizing and monitoring your Docker networks, you can maintain a robust, efficient, and scalable infrastructure tailored to meet your application's demands.