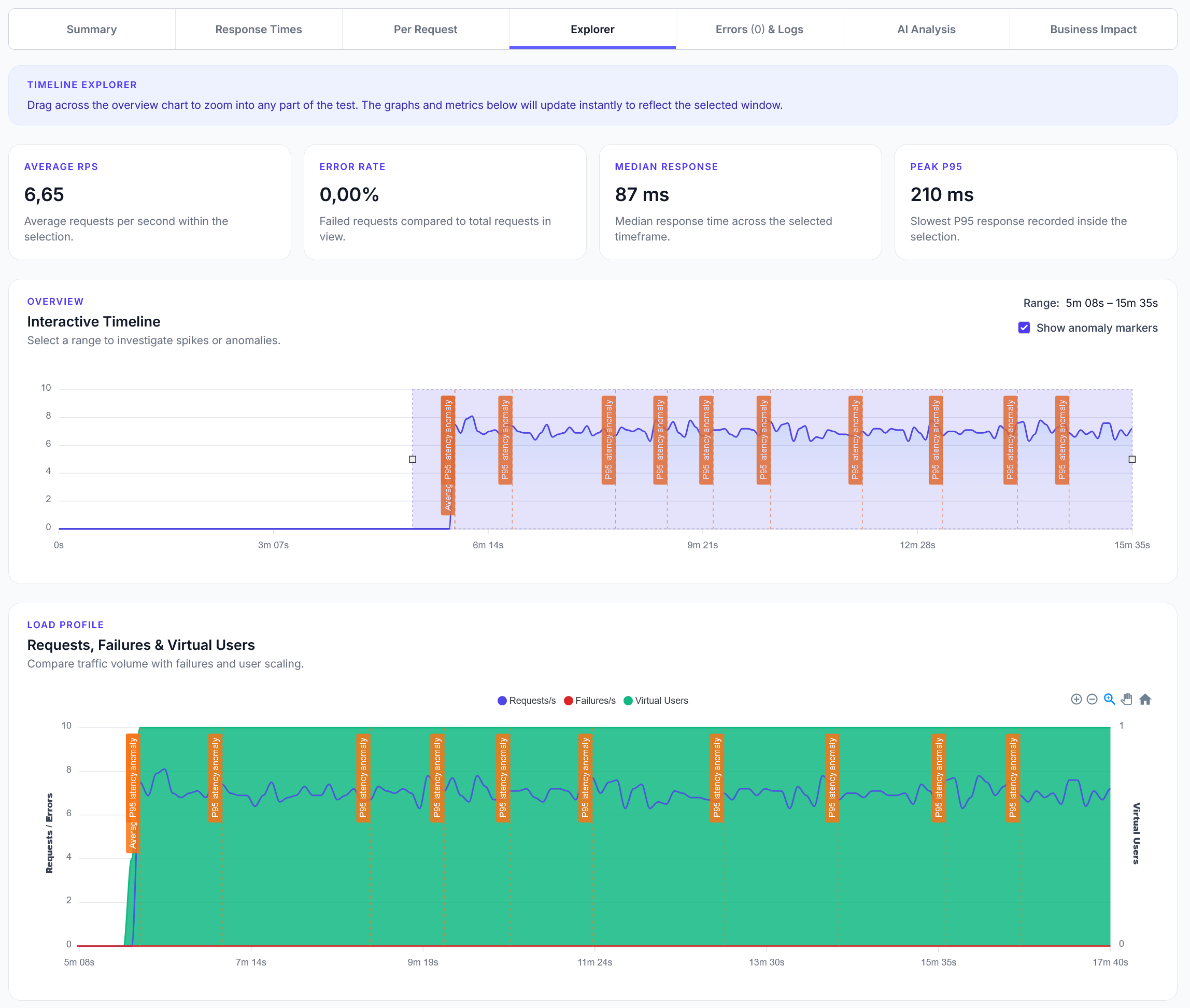

Explorer reports addition

We have added a new Explorer feature to reports, with a timeline scrubber and easy anomaly detection.

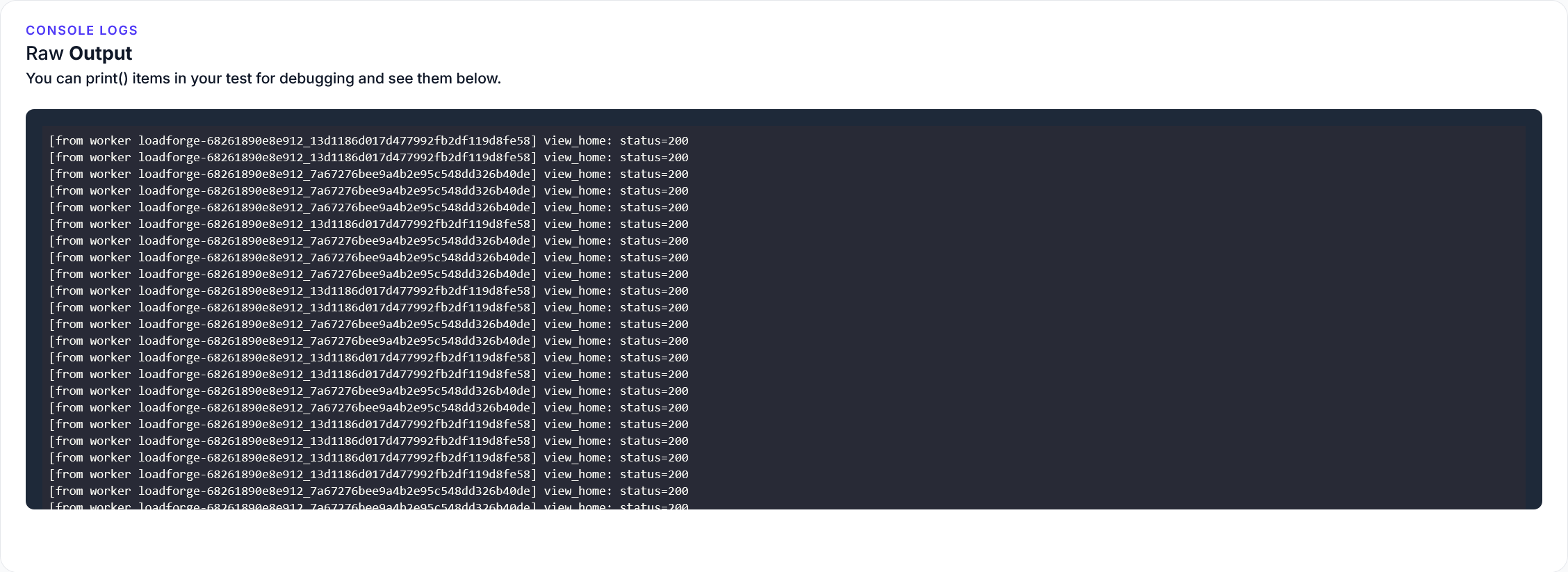

TLDR: LoadForge now offers comprehensive debug logging for test runs, allowing users to use Python print() statements in Locustfiles to log and view detailed insights on test behaviors, application performance, and potential issues directly in test results.

We're excited to announce a powerful new feature for LoadForge users: comprehensive debug logging for test runs. This highly requested capability makes it easier than ever to troubleshoot, validate, and understand what's happening during your load tests.

With this update, you can now use simple Python print() statements in your Locustfiles to log debug information that will be automatically collected and displayed in your test results. This provides valuable insights into the behavior of your test scripts and the application under test.

Using the debug logging feature is straightforward:

print() statements to your Locustfile wherever you need to log informationHere's a simple example:

from locust import HttpUser, task, between

<p>class QuickstartUser(HttpUser):

wait_time = between(1, 5)</p>

<pre><code>@task

def view_home(self):

r = self.client.get("/")

print(f"view_home: status={r.status_code}")

This will log the status code of each request to your home page, making it easy to verify that requests are succeeding.

Log detailed information about API responses to verify data integrity:

with self.client.get("/api/products", catch_response=True) as response:

try:

data = response.json()

print(f"Products API: status={response.status_code}, count={len(data['products'])}")

<pre><code> if len(data['products']) == 0:

print("WARNING: Products API returned empty list")

response.failure("Empty product list")

except json.JSONDecodeError:

print(f"ERROR: Invalid JSON response: {response.text[:100]}...")

response.failure("Invalid JSON")

Selectively log information about slow requests:

r = self.client.get(f"/products?category={category_id}")

<h1>Only log slow responses</h1>

<p>if r.elapsed.total_seconds() > 1.0:

print(f"SLOW REQUEST: /products?category={category_id} took {r.elapsed.total_seconds():.2f}s")

Create structured logs to track complex user journeys:

def log(self, message):

"""Custom logging with session context"""

print(f"[{self.session_id}] {message}")

<p>@task

def multi_step_process(self):

self.log("Starting multi-step process")

# ... perform steps ...

self.log("Process completed")

To get the most out of debug logging, we recommend:

For more detailed information on using debug logging in your LoadForge tests, check out our new documentation page.

We're continuously working to improve LoadForge based on your feedback. Debug logging is just one of many enhancements we have planned to make your load testing experience more productive and insightful.

Have questions or feedback about this new feature? We'd love to hear from you! Reach out to us at hello@loadforge.com or use the live chat in the LoadForge dashboard.

Happy testing!

LoadForge Team

The LoadForge Team