Explorer reports addition

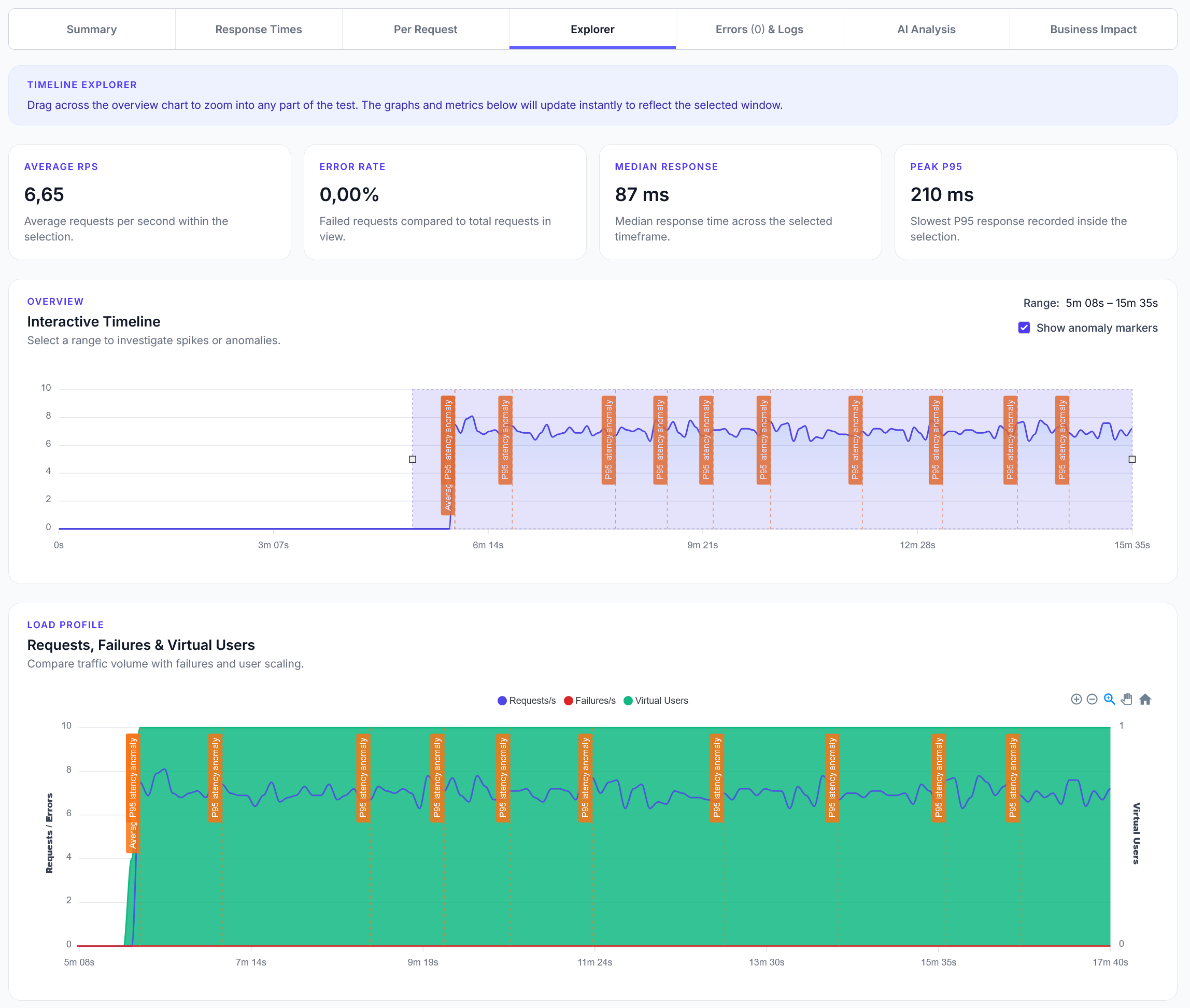

We have added a new Explorer feature to reports, with a timeline scrubber and easy anomaly detection.

Load test static sites and resources automatically with crawlers.

Flexible testing including login, state, csrf and more for apps/APIs.

Flexible Python API testing, with wizards or python scripts.

Test posts, categories, content and more automatically.

Test your online store, products, checkout and more.

Load test your Prestashop ecommerce site at scale.

Test your Joomla site and components.

Load test your Drupal website, CMS, and modules.

Load test dynamic NextJS sites with ease.

Test React applications, components and APIs.

Test any REST API platform, with the most scalable testing platform.

Fully test GraphQL APIs at scale, from multiple locations.

LoadForge can test any HTTP/S website, API, or application. Learn more →

The #1 rated website load testing solution, learn why.

Test up to 4,000,000 concurrent virtual users on the largest platform.

Script a perfect test, or upload a swagger and start immediately.

Simple, but detailed reports on your sites performance.

Monitor Web Vitals, Lighthouse, and response times to improve SEO.

Test real user experience with Chrome browsers and Core Web Vitals.

We have added a new Explorer feature to reports, with a timeline scrubber and easy anomaly detection.

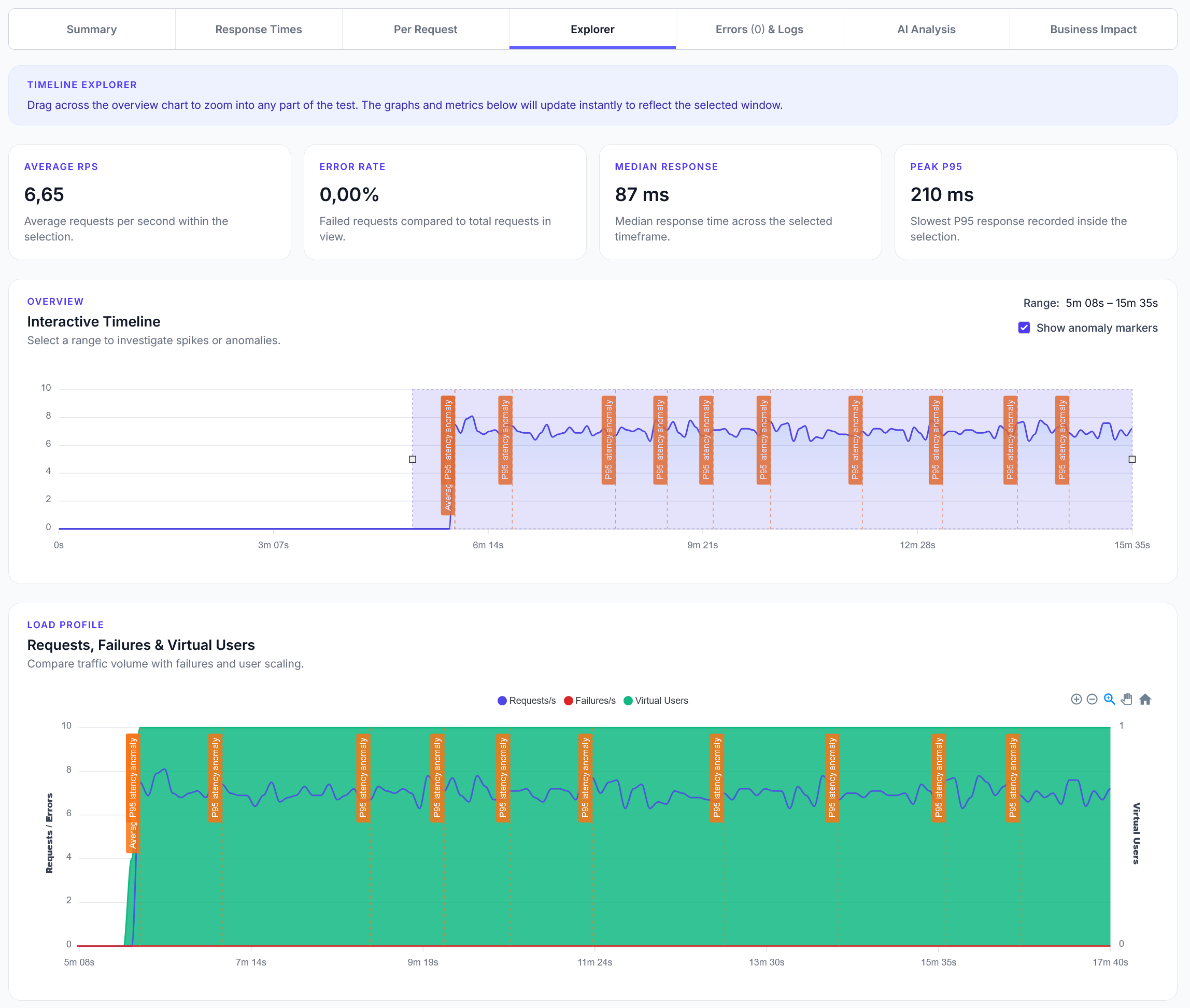

We have introduced a new AI executive summary on the Summary page of Run reports for all our clients.

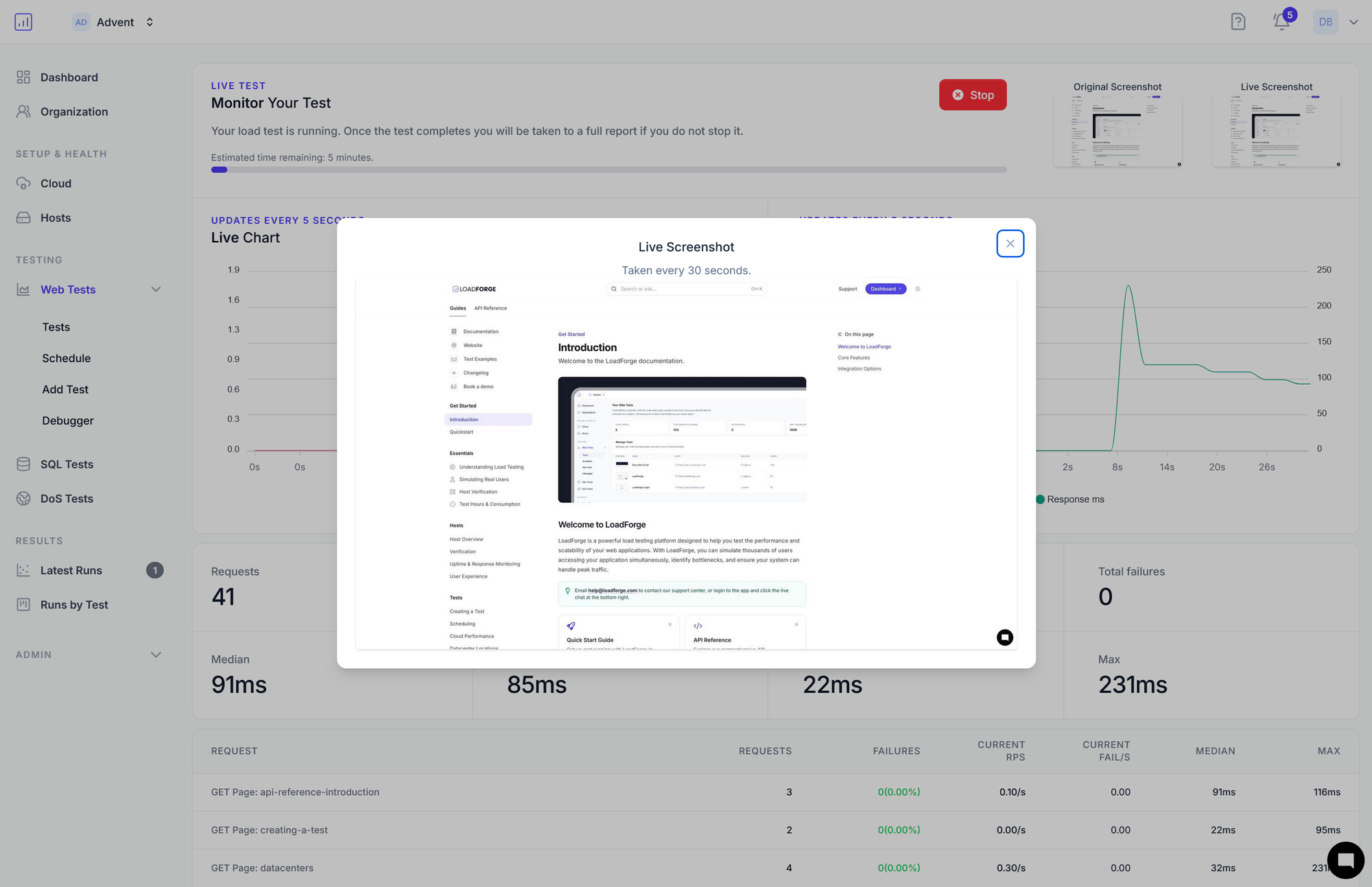

TLDR: We've introduced full-size clickable screenshots, faster test launches in 20-30 seconds, improved crawler speed and depth, and significantly quicker AI analysis by switching to o3-mini.

Sometimes it's the small things that make the load testing experience better!

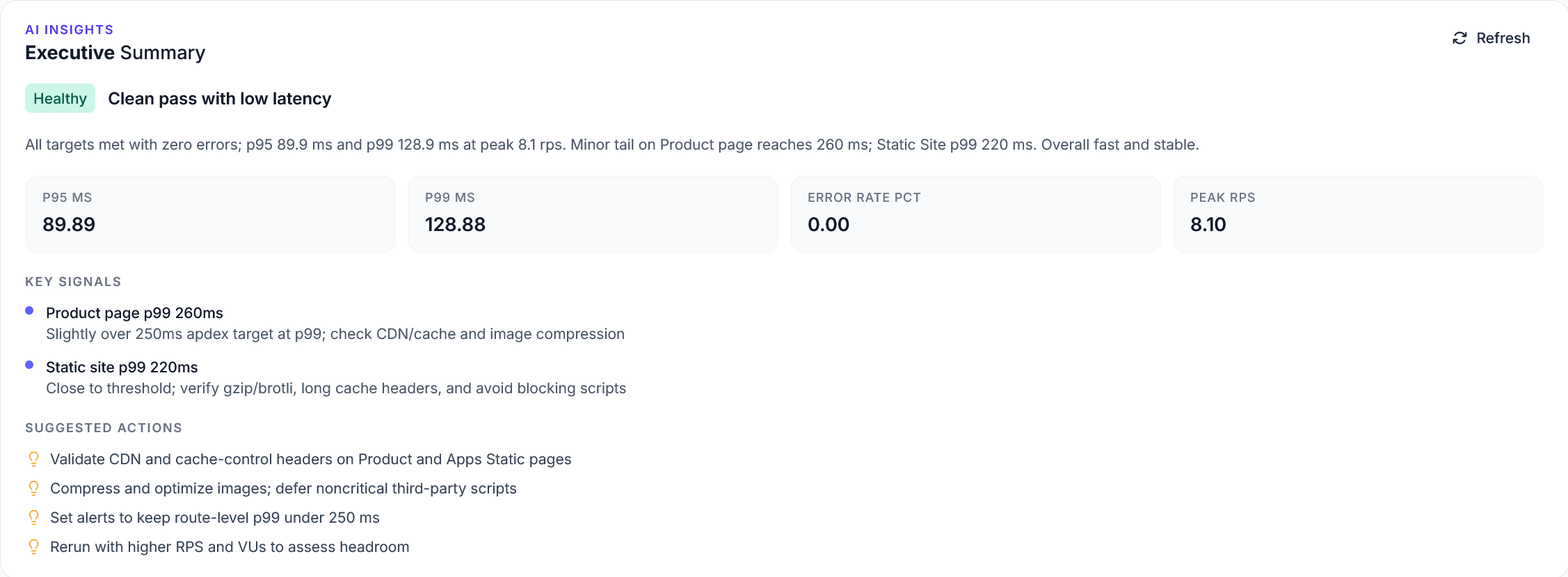

We've rolled out an update that lets you click on screenshots in the live monitor and on the report page to see a full size image - something that was requested quite often to our surprise!

We've also improved the speed to launch tests on our cloud (where installations are not required). Load runners now launch in 20-30 seconds, allowing your test to be fully running in half a minute.

We've also improved the speed of the crawler, and increased the depth - so it'll find more pages, faster! This primarily improves the Wizard when creating tests.

And finally, AI analysis and AI improvements to test scripts should be around 2.5-3x faster as we've switched from GPT4o to o3-mini.

LoadForge Team

The LoadForge Team