Jira Integration & Graphical Test Builder

We are proud to announce the release of two new features, available immediately. 🚀 Jira Integration: Automate Issue Tracking for...

In the world of web applications, performance is a critical factor that can significantly influence user experience and overall success. One of the key strategies to enhance performance is caching, a technique that stores frequently accessed data in a way...

In the world of web applications, performance is a critical factor that can significantly influence user experience and overall success. One of the key strategies to enhance performance is caching, a technique that stores frequently accessed data in a way that reduces the need for repeated computation or database queries. In the context of Phoenix, a high-performance web framework built with Elixir, caching can be a game-changer, ensuring that your applications are both fast and scalable.

Caching offers several benefits that make it an indispensable part of any high-performing web application:

Despite these advantages, implementing an effective caching strategy requires careful consideration and fine-tuning. Misconfigured caches can lead to stale data, inconsistent application states, and even unexpected failures.

Phoenix provides several built-in caching mechanisms and supports integration with various external caching systems. To configure caching effectively within a Phoenix application, developers must understand several key concepts and techniques:

As we delve into this guide, we will explore these concepts in greater detail, providing practical examples and best practices for each. By the end of this guide, you will have a comprehensive understanding of the various caching strategies available in Phoenix and how to implement them effectively to optimize the performance of your applications.

Remember, the ultimate goal is not just to cache, but to cache smartly. Properly implemented caching strategies can make your Phoenix application not only perform better under normal conditions but also remain resilient and responsive under heavy load. To that end, we will also cover how to use LoadForge for load testing your caching strategies, ensuring they hold up under real-world stress.

Let’s embark on this journey to master caching in Phoenix, diving into each aspect with the depth and clarity it deserves.

Caching is a crucial concept in web development that can dramatically improve the performance and scalability of your applications. At its core, caching involves storing copies of data in a temporary storage location, so future requests for that data can be served faster. Instead of hitting the database or performing expensive calculations each time, a cached response can be delivered quickly, enhancing the overall speed and efficiency of the application.

Caching is the process of storing copies of files or data in a temporary storage medium, so they can be accessed more quickly than fetching them from their original source. This temporary storage is often referred to as a "cache." By reducing the number of times resources need to be fetched or recalculated, caching can save time and reduce the load on servers and databases.

Understanding different types of caching is essential for implementing effective caching strategies. Here are some of the most common forms of caching:

HTTP Caching: This involves using HTTP headers to cache the responses of web requests. Common HTTP headers for caching include Cache-Control and ETag.

In-Memory Caching: This method stores data directly in the RAM of web servers. Examples include in-memory databases like Redis or Erlang Term Storage (ETS) in Elixir/Phoenix applications.

Fragment Caching: This involves caching parts or fragments of a page rather than the entire page. This is useful for personalizing content while still benefiting from caching.

Static Asset Caching: Caching static files like images, CSS, and JavaScript can significantly reduce load times for end users.

Database Query Caching: By storing the results of database queries, you can avoid hitting your database server for the same data repeatedly.

Implementing caching in your Phoenix (Elixir) application yields several advantages:

Improved Performance: Cached data can be retrieved much faster than fetching the original data. This minimizes latency and enhances the user experience.

Reduced Load: By caching frequently accessed data, you can significantly reduce the number of requests to your database or API, lowering the load on your servers.

Cost Efficiency: Caching helps in cutting down on resource consumption, which can translate into cost savings, particularly in cloud environments where you're billed based on usage.

Scalability: A well-implemented caching strategy enables your application to handle a higher number of concurrent users without degrading performance.

To illustrate a simple caching example, let's consider a Phoenix controller that caches the result of an expensive calculation using the :ets in-memory store.

defmodule MyAppWeb.PageController do

use MyAppWeb, :controller

require Logger

def index(conn, _params) do

case :ets.lookup(:my_cache, :expensive_calculation) do

[{:expensive_calculation, result}] ->

Logger.info("Serving from cache")

send_resp(conn, 200, result)

[] ->

result = perform_expensive_calculation()

:ets.insert(:my_cache, {:expensive_calculation, result})

Logger.info("Serving from computation")

send_resp(conn, 200, result)

end

end

defp perform_expensive_calculation do

# Expensive calculation logic here

"Expensive Data"

end

end

In this example, the result of perform_expensive_calculation/0 is stored in an ETS table. Subsequent requests check the cache first and only perform the calculation if the data is not already cached.

By grasping these caching basics, you can start to see how different types of caching mechanisms can be applied to optimize your Phoenix (Elixir) application. In the following sections, we will delve deeper into specific caching solutions and strategies tailored for Phoenix applications.

Caching is an integral part of optimizing web application performance, and Phoenix offers several built-in caching solutions that you can use effectively. These caching mechanisms help reduce response times, lessen database load, and enhance the overall user experience. In this section, we’ll explore the caching solutions provided natively within the Phoenix framework, showcasing how and when to utilize them.

Plug.Conn for HTTP CachingPhoenix provides native support for setting HTTP caching headers via Plug.Conn. These HTTP headers—such as Cache-Control, ETag, and Last-Modified—enable browsers and proxies to cache responses, reducing the need for repeated requests to the server.

For example, you can set a simple Cache-Control header in your Phoenix controller as follows:

defmodule MyAppWeb.PageController do

use MyAppWeb, :controller

def index(conn, _params) do

conn

|> put_resp_header("cache-control", "public, max-age=3600")

|> render("index.html")

end

end

This will instruct the client to cache the response for one hour (3600 seconds).

One common approach in HTTP caching is the use of ETags and Last-Modified headers to create conditional GET requests. By leveraging these headers, the server tells the client when content has changed, thus optimizing data transfer.

Here's an example of setting an ETag:

defmodule MyAppWeb.PageController do

use MyAppWeb, :controller

def show(conn, %{"id" => id}) do

page = MyApp.Pages.get_page!(id)

etag = :crypto.hash(:sha256, page.content) |> Base.encode16()

conn

|> put_resp_header("etag", etag)

|> conditional_render(page)

end

defp conditional_render(conn, page) do

case get_req_header(conn, "if-none-match") do

[^etag] -> send_resp(conn, 304, "")

_ -> render(conn, "show.html", page: page)

end

end

end

This way, if the content hasn’t changed (i.e., the ETag matches), the server responds with a 304 Not Modified, resulting in less bandwidth usage.

Phoenix inherently supports template caching, which recompiles templates only when changes are detected. This significantly reduces the rendering time for static template content.

For example, in a production environment, you can set the cache options in your configuration file:

config :my_app, MyAppWeb.Endpoint,

cache_static_manifest: "priv/static/cache_manifest.json",

check_origin: ["//myapp.com"]

By configuring the appropriate options, Phoenix can handle template compilation efficiently, ensuring that your application remains speedy under load.

While not exclusively part of Phoenix, Erlang Term Storage (ETS) is a powerful in-memory storage solution conveniently used in Elixir/Phoenix. It allows for quick, transient storage of application-wide data.

Here's a brief setup for an ETS-based cache:

defmodule MyApp.Cache do

use GenServer

def start_link(_) do

GenServer.start_link(__MODULE__, %{}, name: __MODULE__)

end

def init(_) do

:ets.new(:cache_table, [:named_table, read_concurrency: true])

{:ok, %{}}

end

def put(key, value) do

:ets.insert(:cache_table, {key, value})

end

def get(key) do

case :ets.lookup(:cache_table, key) do

[{_, value}] -> {:ok, value}

[] -> :error

end

end

end

This example demonstrates how to create a simple ETS-backed cache. You can extend it further to manage eviction policies, expiration times, and more.

Cache-Control, ETag, Last-Modified): When you need to control client-side caching behavior, especially for static resources or API endpoints.By taking advantage of these built-in caching mechanisms, you set a solid foundation for enhancing the performance of your Phoenix applications. In the upcoming sections, we'll delve deeper into advanced caching strategies, leveraging external caching stores, and more.

HTTP caching is a powerful mechanism to improve the performance and scalability of your Phoenix application by reducing the need to repeatedly fetch unchanged resources. By leveraging HTTP caching, you can not only decrease server load but also offer quicker response times to your users. In Phoenix, this can be efficiently implemented using the Plug library.

HTTP headers like ETag and Cache-Control play a significant role in managing caching policies. These headers inform browsers and intermediate caches (like CDNs) about how and when to cache the content.

ETag (Entity Tag) is a unique identifier for a specific version of a resource. When a resource changes, its ETag changes. This helps clients and servers validate if a cached version of a resource is still usable.

To set an ETag header in a Phoenix application, you can use a custom Plug.

defmodule MyAppWeb.Plugs.SetETag do

import Plug.Conn

def init(options), do: options

def call(conn, _opts) do

body = conn.resp_body

new_etag = calculate_etag(body)

conn

|> put_resp_header("etag", new_etag)

|> handle_if_none_match(new_etag)

end

defp calculate_etag(body) do

:crypto.hash(:md5, body) |> Base.encode64()

end

defp handle_if_none_match(conn, etag) do

case get_req_header(conn, "if-none-match") do

[^etag] -> conn |> send_resp(304, "") |> halt()

_ -> conn

end

end

end

Now, plug this module into your endpoint or individual controllers:

plug MyAppWeb.Plugs.SetETag when action in [:show, :index]

The Cache-Control header specifies directives for caching mechanisms in both requests and responses. Here’s how you can set the Cache-Control header:

defmodule MyAppWeb.Plugs.SetCacheControl do

import Plug.Conn

def init(options), do: options

def call(conn, _opts) do

conn

|> put_resp_header("cache-control", "public, max-age=3600")

end

end

Include this plug where necessary:

plug MyAppWeb.Plugs.SetCacheControl when action in [:show, :index]

For comprehensive caching, you may want to use both ETag and Cache-Control:

defmodule MyAppWeb.Plugs.CacheHeaders do

import Plug.Conn

def init(options), do: options

def call(conn, _opts) do

body = conn.resp_body

etag = calculate_etag(body)

conn

|> put_resp_header("etag", etag)

|> put_resp_header("cache-control", "public, max-age=3600")

|> handle_if_none_match(etag)

end

defp calculate_etag(body) do

:crypto.hash(:md5, body) |> Base.encode64()

end

defp handle_if_none_match(conn, etag) do

case get_req_header(conn, "if-none-match") do

[^etag] -> conn |> send_resp(304, "") |> halt()

_ -> conn

end

end

end

Add this combined plug to your controllers:

plug MyAppWeb.Plugs.CacheHeaders when action in [:show, :index]

Using Plug for configuring HTTP caching in Phoenix allows you to handle caching headers efficiently and flexibly. By setting up ETag and Cache-Control headers, you can significantly improve the client-side performance and reduce server load. These strategies, when used appropriately, ensure that your Phoenix application remains responsive and scalable. In the next sections, we will explore additional caching approaches including in-memory caching, external caching stores, and load testing your caching strategy with LoadForge.

In-memory caching can significantly enhance the performance of your Phoenix (Elixir) application by reducing the need to fetch data from external sources repeatedly. One robust solution for in-memory caching in Elixir is the Erlang Term Storage (ETS). ETS is a powerful storage system that allows you to store large amounts of data in memory and provides quick access with constant time complexity for both reads and writes.

Here's how you can implement in-memory caching with ETS in your Phoenix application.

First, let's set up an ETS table. This can be done during the application's startup. Typically, this is done in your application’s supervision tree. You can create a GenServer to manage your ETS table lifecycle:

defmodule MyApp.Cache do

use GenServer

# Starting the GenServer and initializing the ETS table

def start_link(_) do

GenServer.start_link(__MODULE__, :ok, name: __MODULE__)

end

def init(:ok) do

:ets.new(:my_cache, [:named_table, :public, read_concurrency: true])

{:ok, %{}}

end

# Public API for setting a cache entry

def put(key, value) do

:ets.insert(:my_cache, {key, value})

end

# Public API for fetching a cache entry

def get(key) do

case :ets.lookup(:my_cache, key) do

[{_key, value}] -> {:ok, value}

[] -> :error

end

end

# Public API for deleting a cache entry

def delete(key) do

:ets.delete(:my_cache, key)

end

end

To store data into your ETS cache, add a convenient function in your Phoenix context or controller:

defmodule MyApp.SomeContext do

alias MyApp.Cache

def store_to_cache(key, value) do

Cache.put(key, value)

end

end

You can then call this function whenever you want to cache specific data:

MyApp.SomeContext.store_to_cache("user_123", %{"name" => "John", "age" => 30})

To retrieve the cached data, implement a function that checks the ETS table first before hitting the main data source:

defmodule MyApp.SomeContext do

alias MyApp.Cache

def get_from_cache(key) do

case Cache.get(key) do

{:ok, value} -> value

:error -> fetch_from_db(key)

end

end

defp fetch_from_db(key) do

# Logic to fetch from DB, for example:

user = Repo.get(User, key)

Cache.put(key, user)

user

end

end

Here's how you can integrate ETS caching within a Phoenix controller:

defmodule MyAppWeb.UserController do

use MyAppWeb, :controller

alias MyApp.SomeContext

def show(conn, %{"id" => id}) do

user = SomeContext.get_from_cache(id)

render(conn, "show.html", user: user)

end

end

For cases where data is updated or deleted, make sure to reflect those changes in the cache:

defmodule MyApp.SomeContext do

alias MyApp.Cache

def update_user(id, attrs) do

user = Repo.update(User.changeset(%User{id: id}, attrs))

Cache.delete(id)

user

end

def delete_user(id) do

Repo.delete(User, id)

Cache.delete(id)

end

end

ETS provides a highly efficient way to implement in-memory caching in Phoenix applications. This section showed you how to set up an ETS table, insert and retrieve data, and manage cache consistency. Properly leveraging ETS can significantly reduce data access times and improve overall application performance.

In the next sections, we will explore how to integrate external caching systems like Redis and delve into more advanced caching strategies for various parts of your Phoenix application. Feel free to continue reading to expand your caching toolkit further.

In scenarios where you need distributed caching or find that built-in solutions do not meet your scaling requirements, integrating an external caching store will be essential. Redis is one of the most popular choices due to its high performance, versatility, and ease of integration with Phoenix (Elixir).

Redis offers various advantages over other caching solutions:

Before integrating Redis with Phoenix, you need to have a Redis server up and running. You can run Redis locally with Docker using:

docker run --name phoenix-redis -d -p 6379:6379 redis

To start using Redis in your Phoenix application, you'll need to add the redix library and the cachex caching library to your dependencies in mix.exs:

defp deps do

[

{:redix, ">= 0.0.0"},

{:cachex, "~> 3.3"}

]

end

Run mix deps.get to install the dependencies.

Add the following configuration to your config/config.exs:

config :my_app, MyApp.Cache,

default_ttl: :timer.minutes(5) # Optional default TTL

Initialize the Redix connection in your application supervision tree:

defmodule MyApp.Application do

use Application

def start(_type, _args) do

children = [

{Redix, name: :redix}

]

Supervisor.start_link(children, strategy: :one_for_one, name: MyApp.Supervisor)

end

end

Cachex leverages Redix for caching and provides a convenient API for managing cache operations:

defmodule MyApp.Cache do

use Cachex.Spec

@spec start_cache() :: :ok

def start_cache do

children = [

supervisor(Cachex, [:my_app_cache, [backend: Cachex.Backend.Redix, redix: :redix]])

]

Supervisor.start_link(children, strategy: :one_for_one)

end

@spec get(String.t()) :: any

def get(key) do

case Cachex.get(:my_app_cache, key) do

{:ok, nil} -> {:miss}

{:ok, value} -> {:ok, value}

{:error, _} = error -> error

end

end

@spec put(String.t(), any, non_neg_integer) :: :ok

def put(key, value, ttl \\ 300) do

Cachex.set(:my_app_cache, key, value, ttl: ttl)

end

end

Integrate caching into your context by using functions defined in your cache module:

defmodule MyApp.SomeContext do

alias MyApp.Cache

def get_data(id) do

case Cache.get("data:#{id}") do

{:ok, data} -> data

{:miss} ->

data = fetch_data_from_db(id)

Cache.put("data:#{id}", data)

data

end

end

defp fetch_data_from_db(id) do

# Your database fetching logic here

end

end

Integrating an external caching system like Redis with your Phoenix application can vastly enhance performance, especially in a distributed environment. It ensures your cached data remains consistent across multiple instances and provides advanced features that go beyond basic in-memory caching solutions.

By setting up and using Redis with tools like Redix and Cachex, you can significantly reduce latency and load on your backend systems, ultimately creating a more efficient and responsive application.

Efficiently caching static assets is crucial for reducing server load and improving the load times of your Phoenix (Elixir) application. Static assets include images, CSS files, JavaScript files, and other resources that do not change frequently. By caching these assets, you ensure that repeat visitors experience a faster loading website without repeatedly fetching the same content from the server.

Caching static assets provides multiple benefits:

Effective caching starts with setting proper HTTP cache headers for your static assets. This instructs the browser and intermediary caches on how to cache the files.

The Cache-Control header is the primary mechanism for controlling caching behavior. You can set this header to specify the maximum age for which the asset should be cached.

Example:

plug Plug.Static,

at: "/static",

from: :my_app,

gzip: false,

cache_control_for_etags: "public, max-age=31536000"

# The code specifies that assets should be cached for one year (31536000 seconds).

The ETag (Entity Tag) header helps browsers determine if a cached version of an asset is still valid. Phoenix’s Plug.Static sets ETags automatically for static assets:

plug Plug.Static,

at: "/static",

from: :my_app,

gzip: false,

etag: true

# The ETag header is automatically handled by Plug for static assets.

Enabling gzip compression for your static assets reduces their size, leading to faster transmission over the network. To enable gzip compression in Phoenix:

plug Plug.Static,

at: "/static",

from: :my_app,

gzip: true,

cache_control_for_etags: "public, max-age=31536000"

For even greater efficiency, precompress your assets during the build process and serve these precompressed versions directly to the client. Many build tools, such as Webpack, can generate gzipped versions of your assets.

plug Plug.Static,

at: "/static",

from: :my_app,

gzip: true,

cache_control_for_etags: "public, max-age=31536000",

precompressed: ["gzip"]

# Ensure that your build process outputs .gz files alongside the original assets.

Although caching assets is beneficial, it necessitates cache busting to ensure users get the latest versions of resources when they change. Cache busting updates the file URL when the content changes, typically by appending a hash or version number to the filename.

mix phx.digestPhoenix includes built-in support for cache busting with the mix phx.digest task, which generates hashed filenames for your static assets:

mix phx.digest

This will create files like:

app.css -> app-<hash>.css

app.js -> app-<hash>.js

Make sure to reference these digested filenames in your templates:

<%= static_path(@conn, "/css/app.css") %> # Becomes /css/app-<hash>.css

<%= static_path(@conn, "/js/app.js") %> # Becomes /js/app-<hash>.js

Combining all these strategies, a typical configuration for serving static assets in your Phoenix endpoint.ex file might look like:

plug Plug.Static,

at: "/static",

from: :my_app,

gzip: true,

cache_control_for_etags: "public, max-age=31536000",

etag: true,

precompressed: ["gzip"]

if Mix.env() == :prod do

plug Plug.Static, at: "/", from: :my_app, gzip: true, cache_control_for_etags: "public, max-age=31536000"

end

By implementing these caching strategies for static assets, you can significantly reduce the load on your Phoenix server and deliver a faster, more responsive user experience. Remember to test these settings under various conditions to ensure they work as expected, and combine them with other caching strategies discussed throughout this guide for the best performance outcomes.

Fragment caching is a powerful technique to optimize the performance of your Phoenix application by caching portions of rendered views that do not change frequently. By avoiding the need to re-render static or infrequently changing parts of your templates, you can significantly reduce server load and improve response times.

In web applications, certain parts of the UI remain constant across multiple requests. Common examples include navigation menus, footers, or sections of a dashboard that get updated infrequently. Rather than rendering these fragments repeatedly, you can store them in a cache and serve the cached version until it expires or gets invalidated.

Phoenix does not have a built-in fragment caching mechanism. However, you can leverage other components like ConCache and custom helpers to achieve effective fragment caching. Here's a step-by-step guide on how you can implement fragment caching in your Phoenix templates.

Add Dependencies

First, add con_cache to your mix.exs file:

defp deps do

[

{:con_cache, "~> 0.13.0"}

]

end

Then, fetch the dependencies:

mix deps.get

Configure ConCache

Set up ConCache in your application supervisor:

defmodule MyApp.Application do

use Application

def start(_type, _args) do

children = [

{ConCache, [name: :fragment_cache, ttl_check: :timer.seconds(60), ttl: :timer.minutes(30)]},

...

]

opts = [strategy: :one_for_one, name: MyApp.Supervisor]

Supervisor.start_link(children, opts)

end

end

Create a Caching Helper

Define a module to handle fragment caching:

defmodule MyAppWeb.FragmentCache do

alias ConCache

def get_or_store(key, fun) do

case ConCache.get(:fragment_cache, key) do

nil ->

value = fun.()

ConCache.put(:fragment_cache, key, value)

value

cached_value ->

cached_value

end

end

end

Use the Helper in Your Templates

Update your Phoenix templates to use the caching helper. For example, to cache a navigation menu:

defmodule MyAppWeb.LayoutView do

use MyAppWeb, :view

alias MyAppWeb.FragmentCache

def render_nav do

FragmentCache.get_or_store("navigation_menu", fn ->

render_to_string(MyAppWeb.SharedView, "_nav.html", %{})

end)

end

end

And then in your template:

<%= raw @view.render_nav %>

Here's an example of how to cache a complex dashboard widget that does not change frequently. First, create the widget rendering function in the view:

defmodule MyAppWeb.DashboardView do

use MyAppWeb, :view

alias MyAppWeb.FragmentCache

def render_stats_widget do

FragmentCache.get_or_store("dashboard_stats_widget", fn ->

render_to_string(MyAppWeb.SharedView, "_stats_widget.html", %{})

end)

end

end

Then, use this cached widget in your dashboard template:

<div class="dashboard">

<!-- Other parts of the dashboard -->

<%= raw render_stats_widget() %>

</div>

Implementing fragment caching in Phoenix templates can greatly enhance the performance and scalability of your application. By focusing on parts of the UI that are static or change infrequently, you can ensure efficient use of resources while maintaining a highly responsive user interface. In the following sections, we will explore additional caching strategies to further optimize your Phoenix application.

Database query caching is a crucial aspect of optimizing Phoenix (Elixir) applications, as it can significantly minimize database load and enhance overall performance. Caching query results instead of repeatedly executing the same database queries helps reduce latency and resource consumption. In this section, we will explore various techniques for implementing database query caching in Phoenix applications.

Before diving into the implementation details, it's essential to understand why caching database queries is beneficial:

ETS is an in-memory storage mechanism provided by the Erlang runtime, making it a powerful tool for caching data in Phoenix applications. Here’s how you can implement query caching with ETS:

defmodule MyApp.Cache do

use GenServer

def start_link(_) do

GenServer.start_link(__MODULE__, %{}, name: __MODULE__)

end

def init(state) do

:ets.new(:query_cache, [:named_table, :set, :public])

{:ok, state}

end

def get(key) do

case :ets.lookup(:query_cache, key) do

[{_key, value}] -> {:ok, value}

[] -> :miss

end

end

def put(key, value) do

:ets.insert(:query_cache, {key, value})

end

end

When querying the database, first check the cache for existing results:

defmodule MyApp.Repo do

alias MyApp.Cache

def get_user_by_id(id) do

case Cache.get(id) do

{:ok, user} -> user

:miss ->

user = MyApp.Repo.get(User, id)

Cache.put(id, user)

user

end

end

end

Redis is a widely-used in-memory data structure store that provides persistent and distributed caching. Integrating Redis with Phoenix can be done using the Redix library:

def deps do

[

{:redix, ">= 0.0.0"}

]

end

Configure and start the Redis connection in your application's supervision tree:

defmodule MyApp.Application do

use Application

def start(_type, _args) do

children = [

{Redix, name: :redix}

]

opts = [strategy: :one_for_one, name: MyApp.Supervisor]

Supervisor.start_link(children, opts)

end

end

Implement cache retrieval and storage in Redis:

defmodule MyApp.RedisCache do

@namespace "cache:queries"

def get(key) do

Redix.command!(:redix, ["GET", cache_key(key)])

|> case do

nil -> :miss

value -> {:ok, :erlang.binary_to_term(value)}

end

end

def put(key, value) do

Redix.command!(:redix, ["SET", cache_key(key), :erlang.term_to_binary(value)])

end

defp cache_key(key), do: "#{@namespace}:#{key}"

end

defmodule MyApp.Repo do

alias MyApp.RedisCache

def get_user_by_id(id) do

case RedisCache.get(id) do

{:ok, user} -> user

:miss ->

user = MyApp.Repo.get(User, id)

RedisCache.put(id, user)

user

end

end

end

Implementing database query caching is essential for improving the performance and scalability of Phoenix applications. By using in-memory storage solutions like ETS and external caching systems like Redis, you can effectively reduce the load on your database, decrease latency, and enhance user experiences. In the next sections, we'll continue to explore various caching strategies and their implementation in Phoenix applications.

Ensuring that your caching strategies are working correctly is critical for maintaining the performance and reliability of your Phoenix application. This section will explore various strategies and approaches to test cached content effectively.

One of the first steps in validating your caching strategy is to incorporate unit tests for your caching logic. This ensures that the cache behaves as expected during typical operations. Phoenix's testing framework, ExUnit, can be used for this purpose.

Here's a basic example of how you might write a unit test for an in-memory cache using ETS:

defmodule MyApp.CacheTest do

use ExUnit.Case

alias MyApp.Cache

setup do

:ok = Cache.start_link()

:ok

end

test "fetches and caches a value" do

assert Cache.fetch("key", fn -> "value" end) == "value"

assert Cache.get("key") == {:ok, "value"}

end

test "cache miss" do

assert Cache.get("nonexistent_key") == :error

end

end

Beyond unit tests, integration tests can be used to see how well your cache integrates with other parts of your Phoenix application. Tools like bypass can help to mock external requests and responses, ensuring that your cache is correctly storing and retrieving data as expected.

To ensure end-to-end functionality, you can write tests for your Phoenix controllers that validate whether cached responses are being returned. For instance:

defmodule MyAppWeb.PageControllerTest do

use MyAppWeb.ConnCase

test "caches index page response", %{conn: conn} do

# First request

conn = get(conn, "/")

etag = Plug.Conn.get_resp_header(conn, "etag")

# Second request with If-None-Match header

conn = build_conn() |> put_req_header("if-none-match", etag) |> get("/")

assert conn.status == 304

end

end

Using browser developer tools like Chrome DevTools, you can inspect HTTP headers and verify that caching headers like ETag and Cache-Control are correctly set. This is a quick way to manually confirm the presence and behavior of cache-related headers.

Tools like Selenium or Cypress can be used to automate browser-based tests, simulating real user interactions and verifying cached content. Automated acceptance tests can be part of your Continuous Integration (CI) pipeline to ensure that cache policies are consistently working correctly.

Real User Monitoring tools like New Relic or Datadog can help you gather data from actual users, seeing how often cached content is being served and measuring performance metrics. This provides valuable insights into the real-world effectiveness of your caching strategies.

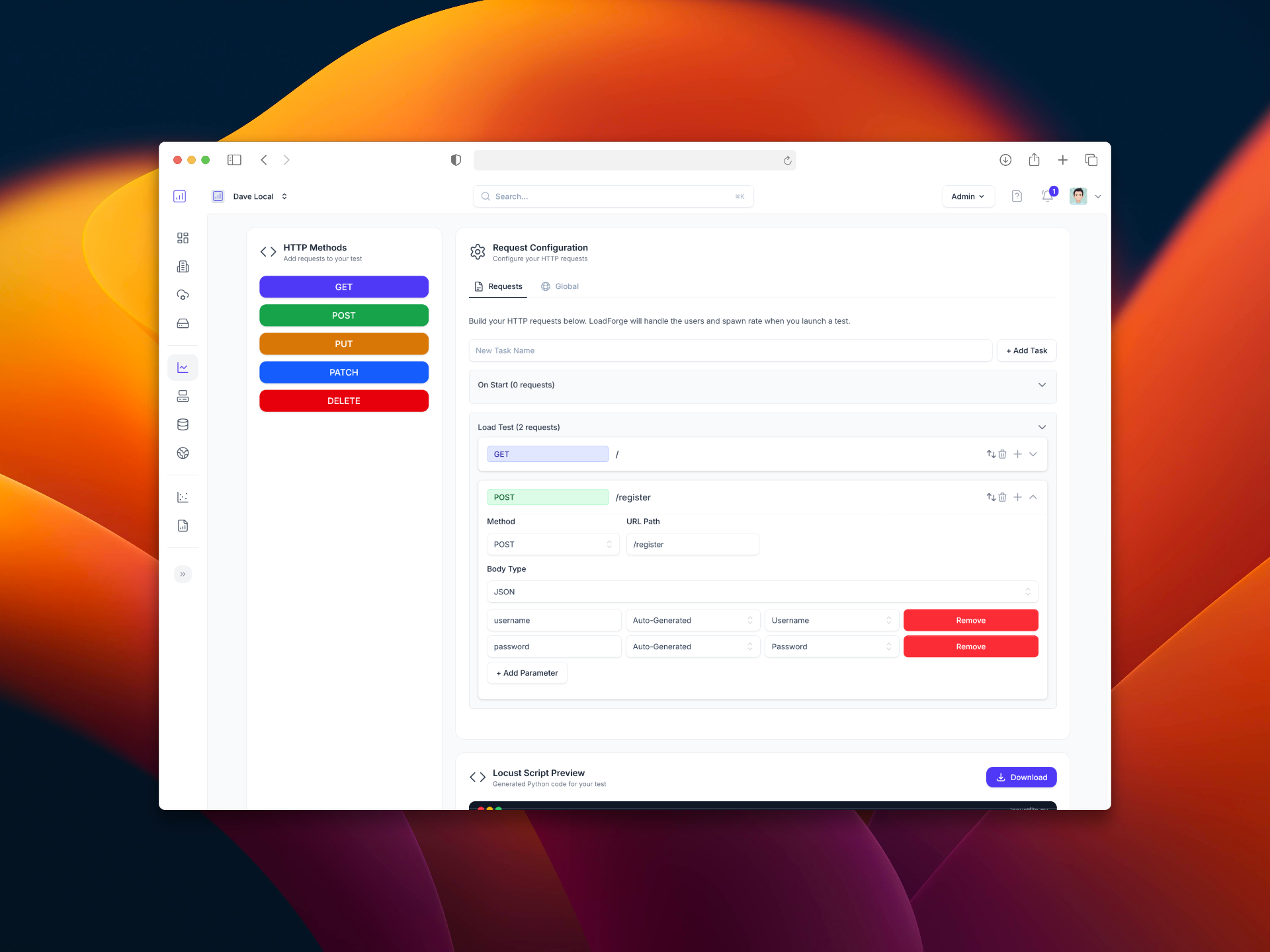

Finally, using a tool like LoadForge, you can simulate high traffic to your cached endpoints to ensure that your caching strategies hold up under load. By defining specific scenarios in LoadForge, you can benchmark your application's performance and tweak your caching setup accordingly.

To load test a cached endpoint using LoadForge:

These approaches collectively help ensure that your caching strategies are not just theoretically sound but also practically effective. By incorporating these testing methodologies, you can confidently optimize the performance of your Phoenix application.

Effectively managing cache expiration is vital to ensure that your Phoenix application serves fresh content while maintaining optimal performance. In this section, we will discuss tips and tools for monitoring cache performance and establishing suitable cache expiration policies.

Monitoring your cache performance involves tracking hit ratios, response times, and memory usage. These metrics can help you understand how your caching layer is performing and identify areas for improvement.

Telemetry: Phoenix's telemetry library is a powerful tool for gathering metrics. By instrumenting your application with telemetry, you can collect data on cache hit rates, misses, and other relevant metrics.

Example:

defmodule MyAppWeb.CacheTelemetry do

use Supervisor

def start_link(_) do

:telemetry.attach("cache-metrics", [:my_app, :cache, :request], &__MODULE__.handle_event/4, nil)

Supervisor.start_link([], strategy: :one_for_one)

end

def handle_event(_event, measurements, metadata, _config) do

IO.inspect(measurements)

IO.inspect(metadata)

end

end

Prometheus: Integrate Prometheus for more advanced monitoring and visualization. Using the prometheus_ex and prometheus_plug libraries, you can expose your metrics in a format that Prometheus can scrape and analyze.

Managing cache expiration policies involves setting the appropriate duration for stored items, ensuring that stale data is evicted in a timely manner.

Time-based Expiration: Use a time-based expiration policy to define how long an item should remain in the cache. This is typically done using TTL (Time-To-Live) values.

Example with Redis:

:ok = Redix.command(:redis_conn, ["SET", "key", "value", "EX", 3600])

Example with ETS:

:ets.update_element(:my_ets_table, key, {2, :erlang.system_time(:seconds) + 3600})

LRU (Least Recently Used) Strategy: Implements cache eviction based on usage patterns. Libraries like cachex can help manage an LRU strategy seamlessly.

Configuration with Cachex:

defmodule MyApp.Cache do

use Cachex.Spec

# Define our cache

def start_link do

Cachex.start_link(:my_cache, [

fallback: {MyApp.Fallback, :fetch},

expiration: Cachex.Spec.expiration(:limit, 100)

])

end

# Example of setting and getting from cache

def fetch(key) do

case Cachex.get(:my_cache, key) do

{:ok, value} -> value

_ -> nil

end

end

end

Custom Expiration Logic: Implement your own custom expiration logic if you have specific requirements that are not covered by standard strategies.

Example:

defp check_expiration(cache, key, ttl) do

case Cachex.ttl(cache, key) do

{:ok, value} when value < ttl -> :delete

_ -> :ok

end

end

By carefully monitoring your cache and implementing suitable expiration policies, you can ensure that your Phoenix application delivers fresh content efficiently. Remember to periodically review and adjust your strategies based on the evolving needs of your application.

Continue reading to learn about load testing your caching strategy with LoadForge in the next section.

In this guide, we have delved deep into various caching strategies to enhance the performance of Phoenix (Elixir) applications. Caching is a critical aspect of web application optimization, and by efficiently implementing different caching techniques, you can significantly reduce server load, improve response times, and provide a smoother user experience.

Importance of Caching:

Caching Basics:

Builtin Caching Solutions in Phoenix:

HTTP Caching with Plug:

ETag and Cache-Control to efficiently manage how browsers cache your content.plug :put_resp_header, "cache-control", "max-age=3600, public"

In-Memory Caching with ETS:

:ets.new(:my_cache, [:named_table, read_concurrency: true])

Integrating External Caching Stores:

Caching Static Assets:

Fragment Caching in Templates:

cache(@conn, "fragment_key") do

render(@conn, "some_template.html")

end

Database Query Caching:

Testing Cached Content:

Monitoring and Cache Expiration:

Load Testing with LoadForge:

# Example configuration for LoadForge load testing script

LoadForge.load_test("MyPhoenixApp",

concurrent_users: 1000,

duration: 300,

url: "https://myphoenixapp.test"

)

Optimizing your caching strategy in Phoenix requires a comprehensive understanding of the different caching mechanisms available and how to apply them effectively. By employing the techniques detailed in this guide, you can ensure that your application remains responsive and efficient, even under substantial load. Remember to continuously monitor, test, and tweak your caching settings as your application evolves to maintain optimal performance. Leveraging robust load testing tools like LoadForge can be instrumental in achieving a highly scalable and reliable caching strategy.

Adopting these caching strategies will empower your Phoenix application to deliver superior performance and a seamless user experience, ultimately contributing to the success and scalability of your web application.