Introduction to FastAPI

FastAPI is a modern, high-performance web framework for building APIs with Python 3.7+ based on standard Python type hints. It is designed with the goal of providing an easy-to-use yet powerful framework that allows developers to create robust and high-performing web applications quickly. FastAPI is gaining immense popularity due to its feature-rich offerings and rapid development capabilities.

Key Features of FastAPI

-

Fast to Code: FastAPI enables rapid development by reducing the amount of boilerplate code needed. Developers can create APIs swiftly with automatic interactive documentation.

-

Fast Execution: As its name suggests, FastAPI is extremely fast. It is built on top of Starlette for the web parts and Pydantic for the data parts, leveraging the speed and efficiency of asynchronous Python.

-

Based on Standards: FastAPI is constructed around two key standards - OpenAPI for API creation, including validation, serialization, and documentation and JSON Schema for data models.

-

Type Hints and Data Validation: With Python's type hints, FastAPI ensures that data validation happens automatically, leading to fewer errors and more reliable code.

-

Interactive Documentation: FastAPI generates OpenAPI and JSON Schema documentation easily accessible via automatically generated, interactive API docs (Swagger UI and ReDoc).

-

Dependency Injection: FastAPI provides a powerful yet simple dependency injection system making testing and modularity straightforward.

Why FastAPI is a Popular Choice

-

High Performance: Thanks to its asynchronous nature, FastAPI can handle many more requests per second compared to traditional Python web frameworks like Flask or Django. This can be critical for applications requiring high throughput.

-

Ease of Use: With built-in support for data validation, serialization, and comprehensive documentation, FastAPI reduces the complexity of building modern APIs.

-

Excellent Community and Ecosystem: FastAPI has a thriving community and growing ecosystem, with numerous third-party extensions, tools, and libraries readily available.

-

Type Safety: The extensive use of type hints helps in catching bugs early during development, leading to more reliable and maintainable code.

Here's a simple example of a FastAPI application:

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

def read_root():

return {"Hello": "World"}

@app.get("/items/{item_id}")

def read_item(item_id: int, q: str = None):

return {"item_id": item_id, "q": q}

Running this code will automatically generate interactive API documentation at /docs (Swagger UI) and /redoc (ReDoc).

The Importance of Load Testing

With FastAPI's high-performance capabilities, it stands in a unique position where understanding and testing the limits of your application are crucial. High traffic volumes, if not prepared for, can lead to unexpected downtimes and performance degradation. Load testing ensures your FastAPI application can sustain peak loads and provides insights into potential bottlenecks and scalability issues.

In this guide, we will delve into specific strategies and tools, particularly LoadForge, that can be leveraged to comprehensively load test your FastAPI applications. By doing so, you can ensure your application not only meets but exceeds performance expectations under various load conditions.

What is Load Testing?

Load testing is a critical process in software development that involves simulating a large number of users accessing your application simultaneously to assess its performance under high traffic conditions. This simulation helps to determine how well your application can handle high volumes of traffic and identifies potential performance bottlenecks.

Importance of Load Testing for FastAPI

FastAPI, known for its speed and efficiency in building APIs using Python, can greatly benefit from load testing. Despite its capabilities, a production-level application built with FastAPI must be able to handle surges in user activity without degrading in performance. Load testing ensures that your FastAPI application maintains reliability, responsiveness, and stability under various load conditions.

By load testing your FastAPI application, you can:

- Identify Performance Bottlenecks: Discover which parts of your application struggle under load, such as slow database queries, inefficient code, or insufficient server resources.

- Optimize Resource Utilization: Understand the resource requirements of your application during peak usage to better allocate hardware and software resources.

- Enhance User Experience: Ensure that your users experience minimal latency and high availability, even during traffic spikes.

- Prevent Downtime: Anticipate potential crashes or failures that could occur under stress, allowing you to proactively address issues before they impact end-users.

Key Metrics in Load Testing

During load testing, several key metrics are measured to provide insights into how your FastAPI application performs under stress. Understanding these metrics is crucial for analyzing the test results and driving performance improvements:

-

Response Time: The amount of time it takes for the application to respond to a request. This metric is crucial as it directly affects the user experience. Response time is usually measured in milliseconds (ms) or seconds (s).

-

Throughput: The number of requests processed by the application per unit of time, typically measured in requests per second (RPS). Higher throughput indicates better performance and the ability to handle more simultaneous users.

-

Error Rates: The percentage of requests that result in errors, such as HTTP status codes 4xx (client errors) and 5xx (server errors). High error rates can indicate issues within the application logic or server capacity problems.

-

Concurrency: The number of simultaneous users or requests that the application can handle. This helps in understanding the application's capacity and limits.

-

Latency: The delay between the time a request is sent and the time the response is received. While similar to response time, latency specifically refers to the network delay component.

Visual Representation of Key Metrics

Here's a simple table summarizing the key metrics you should focus on during load testing:

| Metric |

Description |

Importance |

| Response Time |

Time taken to respond to a request |

Direct impact on user experience |

| Throughput |

Number of requests processed per second |

Indicates scalability and performance under heavy load |

| Error Rates |

Percentage of failed requests |

Reveals stability and reliability of the application |

| Concurrency |

Number of simultaneous users or requests |

Helps determine the application's capacity |

| Latency |

Network delay in request-response |

Critical for understanding delays due to network issues |

Example: Measuring Response Time in FastAPI

To give a practical perspective, here’s an example of measuring response time using a basic FastAPI application:

from fastapi import FastAPI

import time

app = FastAPI()

@app.get("/ping")

async def ping():

start_time = time.time()

result = {"message": "pong"}

response_time = time.time() - start_time

result["response_time"] = response_time

return result

This simple endpoint /ping returns a "pong" message and measures the time taken to handle the request. This basic measurement can be expanded into more complex scenarios during load testing to gather comprehensive performance data.

By understanding and analyzing these metrics, you can make informed decisions on optimizing your FastAPI application to handle higher loads efficiently and effectively.

Why Choose LoadForge for Load Testing?

When it comes to ensuring that your FastAPI application can handle the demands of real-world traffic, choosing the right load testing tool is crucial. LoadForge stands out as a premier choice for several compelling reasons:

Ease of Use

One of the primary advantages of LoadForge is its user-friendly interface and ease of use. Setting up and initiating load tests is straightforward, even for those who are relatively new to load testing. The intuitive dashboard allows you to quickly configure test scenarios, monitor performance metrics in real-time, and analyze results after the tests are completed.

Here's an example of how simple it is to create a basic load test scenario:

{

"test_name": "Basic FastAPI Load Test",

"requests": [

{

"method": "GET",

"url": "/api/v1/resource",

"headers": {

"Content-Type": "application/json"

}

}

],

"load_profile": {

"initial_load": 50,

"peak_load": 500,

"duration": "10m"

}

}

Scalability

LoadForge is designed to scale with your needs, whether you're testing a small API or a complex system with thousands of concurrent users. The platform can simulate a wide range of traffic scenarios, helping you understand how your FastAPI application responds under different load conditions. This scalability ensures that you're ready to handle sudden traffic spikes or sustained high traffic volumes.

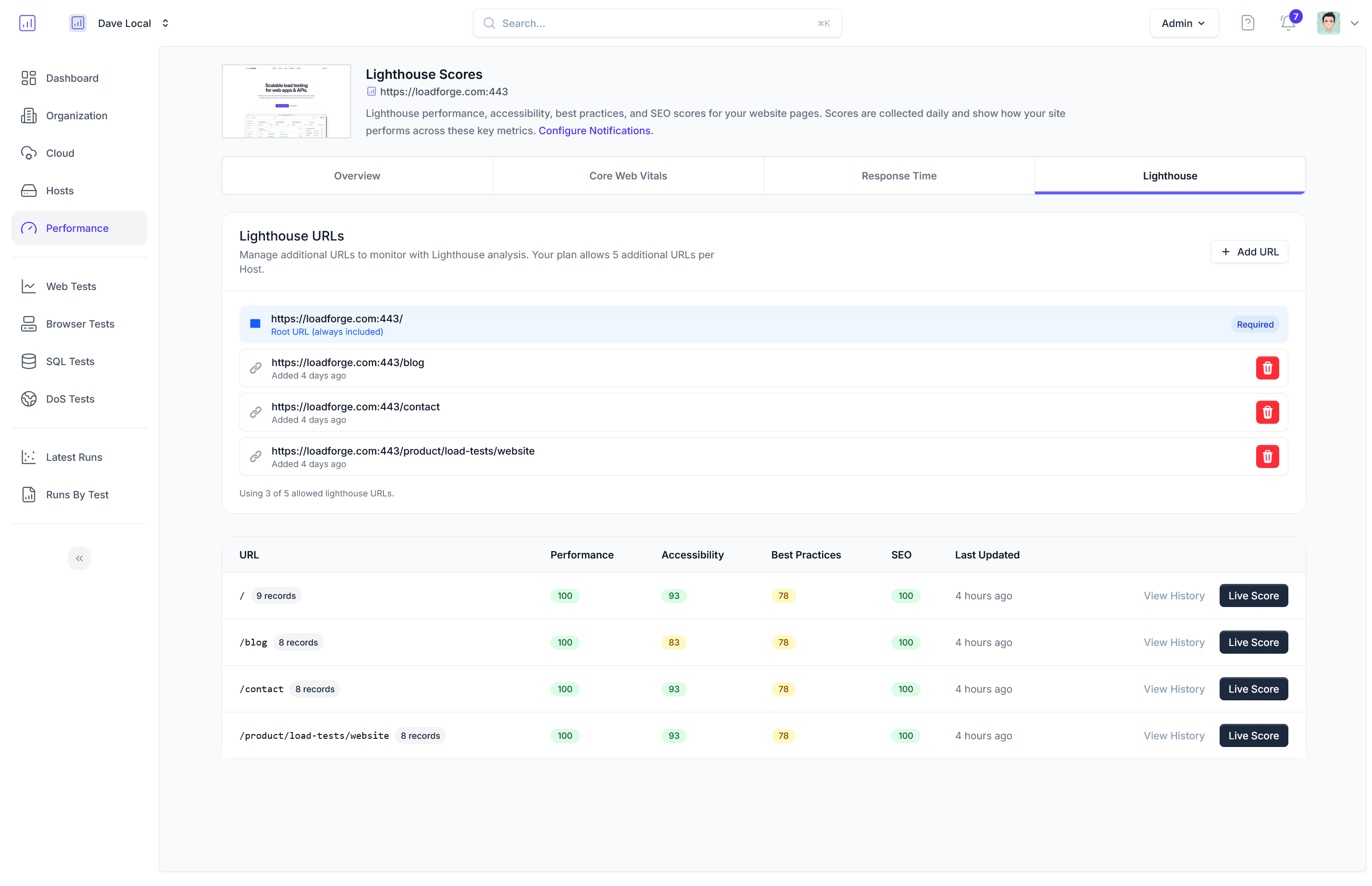

Detailed Analytics

Detailed analytics and reporting are key features of LoadForge that set it apart. After running a load test, you get comprehensive insights into various performance metrics such as response times, error rates, and throughput. The analytics dashboard lets you drill down into these metrics to identify performance bottlenecks and areas needing improvement.

LoadForge provides visualizations such as:

- Response Time Distribution: Graphs showing the spread of response times, helping you understand the impact of load on performance.

- Error Rate Tracking: Charts that help you identify specific error responses and their frequency.

- Throughput Analysis: Metrics on the number of requests handled per second, which is crucial for understanding your API's capacity.

Integrations with Development Tools

LoadForge seamlessly integrates with various tools commonly used in the development lifecycle. This includes CI/CD platforms like Jenkins, GitHub Actions, and GitLab CI, as well as monitoring tools like Prometheus and Grafana. These integrations allow you to incorporate load testing within your continuous delivery pipeline, ensuring that performance testing becomes an integral part of your development and deployment processes.

For example, integrating LoadForge with a CI/CD pipeline might look like this in a Jenkinsfile:

pipeline {

agent any

stages {

stage('Load Test') {

steps {

script {

def response = httpRequest(

url: 'https://api.loadforge.io/test',

httpMode: 'POST',

customHeaders: [

[name: 'Authorization', value: "Bearer ${LOADFORGE_API_KEY}"]

],

requestBody: """

{

"test_name": "CI/CD Pipeline FastAPI Load Test",

"load_profile": { "initial_load": 50, "peak_load": 300, "duration": "5m" }

}

"""

)

echo "LoadForge test response: ${response.content}"

}

}

}

}

}

Summary

LoadForge offers a powerful combination of ease of use, scalability, detailed analytics, and seamless integration with your existing tools. These features make it an optimal choice for load testing your FastAPI applications, helping you ensure that your APIs are robust and performant, even under the most demanding conditions.

By choosing LoadForge, you leverage a tool that simplifies load testing while providing deep insights and extensive support for your development workflow.

Setting Up FastAPI for Load Testing

Before diving into load testing your FastAPI application using LoadForge, it is crucial to ensure your environment is properly set up for accurate and efficient tests. This section provides a step-by-step guide to preparing your FastAPI application, setting up necessary dependencies, and configuring your project.

Step 1: Install FastAPI and Uvicorn

First, you'll need to install FastAPI along with Uvicorn, an ASGI server for running your application. Use the following pip command to install them:

pip install fastapi uvicorn

Step 2: Create a Simple FastAPI Application

Create a basic FastAPI application to serve as your load testing target. This minimal example includes a single endpoint for demonstration purposes:

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

async def read_root():

return {"message": "Hello World"}

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

Save this code in a file named main.py.

Step 3: Set Up the Environment

Ensure your development environment is prepared for testing. This may include steps like setting up virtual environments, ensuring network configurations are stable, and other preparations:

-

Create a Virtual Environment:

python -m venv env

source env/bin/activate # On Windows use `env\Scripts\activate`

-

Install Requirements:

Backup your dependencies in a requirements.txt file for easy setup on different machines:

pip freeze > requirements.txt

To install dependencies in the future, use:

pip install -r requirements.txt

-

Network Configuration:

- Ensure that your application is accessible from where LoadForge will run its tests.

- If running locally, confirm that firewalls or other security software allow traffic on the testing port (default is 8000).

Step 4: Configure the FastAPI Application for Testing

Before running the load tests, it’s essential to ensure that your application is configured for an environment that mimics production as closely as possible:

-

Environment Variables:

Use environment variables to differentiate between production and testing settings:

import os

from fastapi import FastAPI

app = FastAPI()

environment = os.getenv("ENVIRONMENT", "development")

@app.get("/")

async def read_root():

return {"environment": environment, "message": "Hello World"}

-

Database Mocking/Stubbing (if applicable):

If your API interacts with a database, consider using a testing database or stubs to prevent affecting production data:

from unittest.mock import Mock

db = Mock()

@app.get("/items/{item_id}")

async def read_item(item_id: int):

item = db.get_item(item_id)

return {"item_id": item_id, "item": item}

-

Logging Configuration:

Adjust logging levels to capture performance-related logs without overwhelming the output:

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("fastapi")

@app.get("/log")

async def test_logging():

logger.info("Log endpoint accessed")

return {"message": "Logging is configured"}

Step 5: Running the Application

Ensure your application is running and accessible for LoadForge to start load testing:

uvicorn main:app --host 0.0.0.0 --port 8000

With your FastAPI application set up and running, you are now ready to proceed with creating load testing scenarios in LoadForge.

By following these steps, you ensure that your FastAPI application is adequately prepared for load testing, providing a reliable environment to gain actionable insights into your application's performance. The next section will guide you through creating specific load test scenarios using LoadForge, simulating various user behaviors and request types to thoroughly evaluate your FastAPI application's robustness.

Creating LoadForge Test Scenarios for FastAPI

Creating effective load testing scenarios in LoadForge involves simulating realistic user behaviors and configuring different types of requests that your FastAPI application will handle. This section will guide you through setting up these scenarios step-by-step.

Step 1: Understand Your Endpoints

Before diving into LoadForge, it's crucial to understand the endpoints and user interactions within your FastAPI application. List down the various routes (e.g., /users, /items/{item_id}, /auth/login) and determine the types of HTTP methods (GET, POST, PUT, DELETE, etc.) that each endpoint supports.

Step 2: Setting Up LoadForge

If you haven't already, sign up for a LoadForge account and set up your testing environment. Ensure your FastAPI application is deployed and accessible from the internet.

Step 3: Creating a New Test Scenario

-

Login to LoadForge:

Navigate to your LoadForge dashboard and log in with your credentials.

-

Create a New Scenario:

Select the option to create a new test scenario. You will be prompted to configure various parameters.

-

Define User Behavior:

Specify the sequence of requests that a virtual user will perform.

Example - Defining Requests

Consider an example where a user visits the homepage, logs in, and fetches their profile information.

{

"requests": [

{

"method": "GET",

"url": "https://your-fastapi-app.com/",

"headers": {}

},

{

"method": "POST",

"url": "https://your-fastapi-app.com/auth/login",

"headers": {

"Content-Type": "application/json"

},

"body": {

"username": "testuser",

"password": "testpassword"

}

},

{

"method": "GET",

"url": "https://your-fastapi-app.com/users/profile",

"headers": {

"Authorization": "Bearer <token>"

}

}

]

}

Step 4: Setting Up Different Types of Requests

Within LoadForge, you can set up various types of requests to mimic real-world usage patterns:

-

GET Requests:

Fetch data from the server.

{

"method": "GET",

"url": "https://your-fastapi-app.com/items"

}

-

POST Requests:

Send data to the server to create a new resource.

{

"method": "POST",

"url": "https://your-fastapi-app.com/items",

"body": {

"name": "New Item",

"description": "This is a new item."

}

}

-

PUT Requests:

Update an existing resource.

{

"method": "PUT",

"url": "https://your-fastapi-app.com/items/1",

"body": {

"name": "Updated Item",

"description": "This item has been updated."

}

}

-

DELETE Requests:

Remove a resource.

{

"method": "DELETE",

"url": "https://your-fastapi-app.com/items/1"

}

Step 5: Configuring Load Profiles

Determine the load profile for your test scenario:

- Users: Specify the number of concurrent virtual users.

- Ramp-Up Period: Define how quickly users ramp up to the specified number.

- Test Duration: Set the length of time for which the test will run.

Step 6: Adding Assertions

Add assertions to verify the response status codes and body content. This ensures the application behaves as expected under load.

{

"assertions": [

{

"type": "status_code",

"expected": 200

},

{

"type": "json_path",

"expression": "$.id",

"expected": 1

}

]

}

Example of a Complete Load Test Scenario

Here's an example JSON to upload or configure in LoadForge:

{

"name": "Sample FastAPI Load Test",

"requests": [

{

"method": "GET",

"url": "https://your-fastapi-app.com/"

},

{

"method": "POST",

"url": "https://your-fastapi-app.com/auth/login",

"headers": {

"Content-Type": "application/json"

},

"body": { "username": "testuser", "password": "testpassword" }

},

{

"method": "GET",

"url": "https://your-fastapi-app.com/users/profile",

"headers": { "Authorization": "Bearer <token>" }

}

],

"load_profile": {

"users": 100,

"ramp_up": 10,

"duration": 3600

},

"assertions": [

{

"type": "status_code",

"expected": 200

}

]

}

By following these steps, you'll create a robust test scenario in LoadForge tailored to your FastAPI application. The next sections will cover executing these tests and analyzing the results to continuously optimize your application performance.

Running Your First Load Test with LoadForge

Once your FastAPI application is ready and you have set up LoadForge, it’s time to run your first load test. This section provides a step-by-step guide on how to execute load tests using LoadForge, monitor the tests, and interpret the preliminary results.

Step 1: Prerequisites

Before running your first load test, ensure you have completed the following:

- FastAPI Application: A running FastAPI application that you wish to test.

- LoadForge Account: A valid LoadForge account.

- Environment Prepared: Any dependencies and configurations set up on LoadForge and on your FastAPI application.

Step 2: Creating a LoadForge Test Scenario

To begin load testing, you need to create a load testing scenario in LoadForge:

- Login to LoadForge: Sign in to your LoadForge account and navigate to the dashboard.

- Create New Test: Click on the "Create New Test" button.

- Name Your Test: Provide a descriptive name for your test (e.g., "FastAPI Load Test").

- Define Test Parameters:

- Endpoint: Enter the endpoint of your FastAPI application you wish to test (e.g.,

https://your-fastapi-app/api/v1/resource).

- Load Profile: Configure how many virtual users (VUs) you want to simulate (e.g., 100 VUs).

- Duration: Specify the duration for which the test should run (e.g., 10 minutes).

- Add Requests:

- GET Request: If you want to test a GET endpoint, you can add it in the "Requests" section.

- POST Request: Add a POST endpoint and specify the payload you want to send.

{

"username": "testuser",

"email": "testuser@example.com"

}

Step 3: Configuring Load Profiles

Configure how LoadForge will generate the load during the test:

- Ramp-Up Time: Set up a ramp-up time to gradually increase the load, giving your system time to adapt.

- Steady State: Define a steady state during which the maximum load is maintained.

- Ramp-Down Time: Gradually reduce the load at the end of the test to prevent abrupt disconnections.

Step 4: Running the Load Test

Follow these steps to execute the load test:

- Start the Test: Review your test settings and click on the "Start Test" button.

- Monitor the Test: Once the test begins, you can monitor live metrics including response times, throughput, and error rates via the LoadForge dashboard.

- Interact with the Test: Depending on the live metrics, you can make adjustments or even stop the test if necessary.

Step 5: Stopping the Test

At the end of your test duration, LoadForge will automatically stop the test. However, you can manually stop it if needed by clicking on the "Stop Test" button on the dashboard.

Best Practices for Meaningful Results

To ensure that your load test provides meaningful and actionable insights, keep the following best practices in mind:

- Isolate Environment: Run tests in a controlled and isolated environment to minimize external noise affecting your results.

- Repeat Tests: Conduct multiple test runs to ensure consistency in your results.

- Incremental Load Testing: Start with a smaller load and gradually increase it to identify the breaking points of your application.

- Monitor System Resources: Keep an eye on your system's CPU, memory, and network usage during tests to identify hardware bottlenecks.

By following these steps and best practices, you will execute your first load test with LoadForge effectively, allowing you to gather crucial performance data for your FastAPI application. Next, we'll delve into analyzing these results to identify performance bottlenecks and optimize your application.

Analyzing Load Test Results

Once you have executed your load tests using LoadForge, the next crucial step is to analyze the load test results. Proper interpretation of these results is fundamental to understanding the performance dynamics of your FastAPI application. This section will guide you through reading the load test reports generated by LoadForge, covering vital metrics such as response times, error rates, throughput, and identifying performance bottlenecks.

Key Metrics to Observe

LoadForge provides a comprehensive suite of metrics that offer insights into your application's performance. Below are the primary metrics you should focus on:

1. Response Times:

- Average Response Time: The mean time taken to process a request.

- 90th Percentile Response Time: The response time below which 90% of the requests fall.

- Maximum Response Time: The longest time taken to process a request.

2. Error Rates:

- HTTP Error Codes: Number of errors per HTTP status code (e.g., 404, 500).

- Failures: Total number of failed requests.

3. Throughput:

- Requests Per Second (RPS): Number of requests served per second.

- Data Transferred: Amount of data sent and received during the test.

Analyzing Response Times

Response times are crucial for understanding how quickly your FastAPI application can process requests. Here's how to interpret these metrics:

- Average Response Time offers a general sense of performance but can be skewed by outliers.

- 90th Percentile Response Time is more useful for identifying the upper limit of typical response times, helping you understand the experience of most users.

- Maximum Response Time identifies worst-case scenarios and can highlight significant performance issues that need addressing.

Example:

Average Response Time: 300ms

90th Percentile Response Time: 450ms

Maximum Response Time: 1200ms

If your 90th percentile response time is exceedingly high, it could indicate that a significant portion of your users is experiencing slow responses, necessitating deeper investigation into potential causes such as:

- Inefficient code.

- Database latency.

- Network issues.

Reviewing Error Rates

Error rates help you gauge the reliability of your FastAPI application. High error rates suggest that many requests are failing, which could be due to:

- Application bugs.

- Database connection issues.

- Server resource constraints.

Example:

HTTP 500 Errors: 150

HTTP 404 Errors: 50

Failures: 200

Analyze the distribution of error types to pinpoint the root causes. For instance, numerous HTTP 500 errors could indicate server-side issues, while HTTP 404 errors might hint at misconfigured routes or missing resources.

Understanding Throughput

Throughput measures how many requests your application can handle within a given timeframe. This metric is vital for assessing whether your FastAPI application can sustain the desired load.

- Requests Per Second (RPS): Higher values indicate better performance under load.

- Data Transferred: Helps you understand the network load and can be used to plan for bandwidth requirements.

Example:

Requests Per Second: 2500 RPS

Data Sent: 500 MB

Data Received: 400 MB

Identifying Bottlenecks

Identifying performance bottlenecks is the ultimate goal of load testing. Here are some common indicators of bottlenecks:

- High Response Times: Could indicate inefficient code or heavy database operations.

- High Error Rates: May signal issues with application stability or resource limitations.

- Low Throughput: Suggests that your application cannot handle the expected load, necessitating optimization or scaling.

LoadForge Reports

LoadForge provides detailed analytics and visual reports to make this data easily understandable. Utilize graphs and charts to observe trends over time, compare different loads, and visualize performance under varying conditions.

Example LoadForge Report Insights:

- Response Time Trends: See how response times change with increased load.

- Error Rate Distribution: Visualize how errors correlate with specific load conditions.

- Throughput Graphs: Observe how RPS varies during the test.

By systematically analyzing these metrics and utilizing LoadForge's reporting tools, you can gain a comprehensive understanding of your FastAPI application's performance, pinpoint bottlenecks, and make informed decisions for optimization.

Next Steps

After identifying the areas that require improvement, refer to the following sections for strategies on optimizing FastAPI's performance. Continuous load testing using LoadForge, integrated with your CI/CD pipeline, ensures your application maintains its performance standards over time.

This foundation will equip you with the insights needed to enhance your FastAPI application's ability to handle high traffic volumes efficiently and reliably.

Optimizing FastAPI for Better Performance

In this section, we will delve into the strategies and best practices for optimizing your FastAPI application based on the insights gained from LoadForge load testing. Your goal after running load tests is to identify bottlenecks and performance issues, then take concrete steps to enhance the efficiency, responsiveness, and scalability of your application. Key areas of optimization include code refinement, database query tuning, and infrastructure scaling.

Code Optimization

-

Asynchronous Programming: Leverage FastAPI's support for async programming to handle high numbers of concurrent requests more efficiently.

from fastapi import FastAPI

app = FastAPI()

@app.get("/items")

async def read_items():

# Simulate async operation

await some_async_function()

return {"items": "List of items"}

-

Efficient Data Serialization: Use FastAPI's Pydantic models for validation and serialization to ensure quick and efficient data handling.

from pydantic import BaseModel

class Item(BaseModel):

name: str

description: str

@app.post("/items/")

async def create_item(item: Item):

return item

-

Caching: Implement caching strategies to reduce redundant database queries and external API requests. Tools like Redis can be integrated for this purpose.

import aioredis

cache = await aioredis.create_redis_pool('redis://localhost')

@app.get("/items/{item_id}")

async def read_item(item_id: int):

cached_item = await cache.get(f"item_{item_id}")

if cached_item:

return cached_item

# Else, fetch from database and store in cache

Database Query Tuning

-

Indexing: Ensure that your database tables are properly indexed, particularly on columns that are frequently used in query filters and joins.

-

Query Optimization: Optimize your SQL queries by avoiding SELECT * and retrieving only the columns you need. Use query optimization tools and techniques to identify slow queries.

SELECT name, description FROM items WHERE id = 1;

-

Connection Pooling: Utilize connection pooling to manage database connections efficiently, reducing the overhead of establishing connections for every request.

from sqlalchemy.ext.asyncio import create_async_engine, AsyncSession

from sqlalchemy.orm import sessionmaker

DB_URL = "postgresql+asyncpg://user:password@localhost/dbname"

engine = create_async_engine(DB_URL, pool_size=10)

SessionLocal = sessionmaker(autocommit=False, autoflush=False, bind=engine, class_=AsyncSession)

async def get_db():

async with SessionLocal() as session:

yield session

Infrastructure Scaling

- Horizontal Scaling: Scale your application horizontally by adding more instances behind a load balancer. This helps distribute incoming traffic across multiple servers, preventing any single instance from becoming a bottleneck.

- Vertical Scaling: Improve your server's capacity by upgrading hardware resources such as CPU and memory, thus allowing each instance to handle more traffic individually.

- Containerization and Orchestration: Use Docker for containerization and Kubernetes for orchestration to efficiently manage and scale your application services.

# Example Kubernetes Deployment YAML

apiVersion: apps/v1

kind: Deployment

metadata:

name: fastapi-app

spec:

replicas: 3

selector:

matchLabels:

app: fastapi-app

template:

metadata:

labels:

app: fastapi-app

spec:

containers:

- name: fastapi

image: your-docker-image

ports:

- containerPort: 80

- Content Delivery Network (CDN): Use a CDN to distribute static assets, reducing latency by serving content from servers geographically closer to the user.

Conclusion

By systematically addressing these areas — code optimization, database query tuning, and infrastructure scaling — you can significantly enhance the performance of your FastAPI application. Load testing with LoadForge provides invaluable insights into where your application can improve, allowing you to address issues proactively. Following these optimization strategies will ensure your FastAPI application remains performant and scalable under varying loads.

In the next section, we will explore how to integrate continuous load testing with LoadForge into your CI/CD pipeline for ongoing performance monitoring.

Running Continuous Load Testing with LoadForge and FastAPI

Incorporating load testing into your CI/CD pipeline is crucial to ensure that your FastAPI application remains performant as it evolves. Continuous load testing helps catch performance regressions early, provides an ongoing benchmark for your application's performance, and ensures that any new code changes do not degrade the user experience under load. In this section, we will explore the benefits of continuous load testing and how to seamlessly integrate LoadForge with your CI/CD pipeline for continuous performance monitoring.

Benefits of Continuous Load Testing

- Early Detection of Issues: Running tests automatically with each code change helps identify performance bottlenecks, memory leaks, and other issues early in the development cycle.

- Consistent Performance Benchmarks: Continuous testing provides consistent performance data, making it easier to track improvements or declines over time.

- Informed Decision Making: With regular performance data at hand, stakeholders can make better-informed decisions regarding resource allocation and application scaling.

- Increased Confidence: Knowing that your application can handle expected traffic provides confidence for planning feature rollouts and marketing campaigns.

Integrating LoadForge with Your CI/CD Pipeline

To integrate LoadForge with your existing CI/CD pipeline, you need to follow several key steps:

- Prepare Your Environment: Ensure that your CI/CD environment has access to the necessary credentials and configurations to run LoadForge tests.

- Create LoadForge Test Scripts: Develop the load testing scenarios that simulate the expected user behavior on your FastAPI application.

- Setup CI/CD Pipeline Configuration: Integrate the LoadForge test execution into your CI/CD pipeline scripts.

Here, we'll provide an example of how you might configure a pipeline using GitHub Actions.

Example: Integrating LoadForge with GitHub Actions

-

Set Up Your GitHub Repository:

- Ensure you have a FastAPI repository set up on GitHub.

- Create a directory named

.github/workflows in your repository if it doesn't already exist.

-

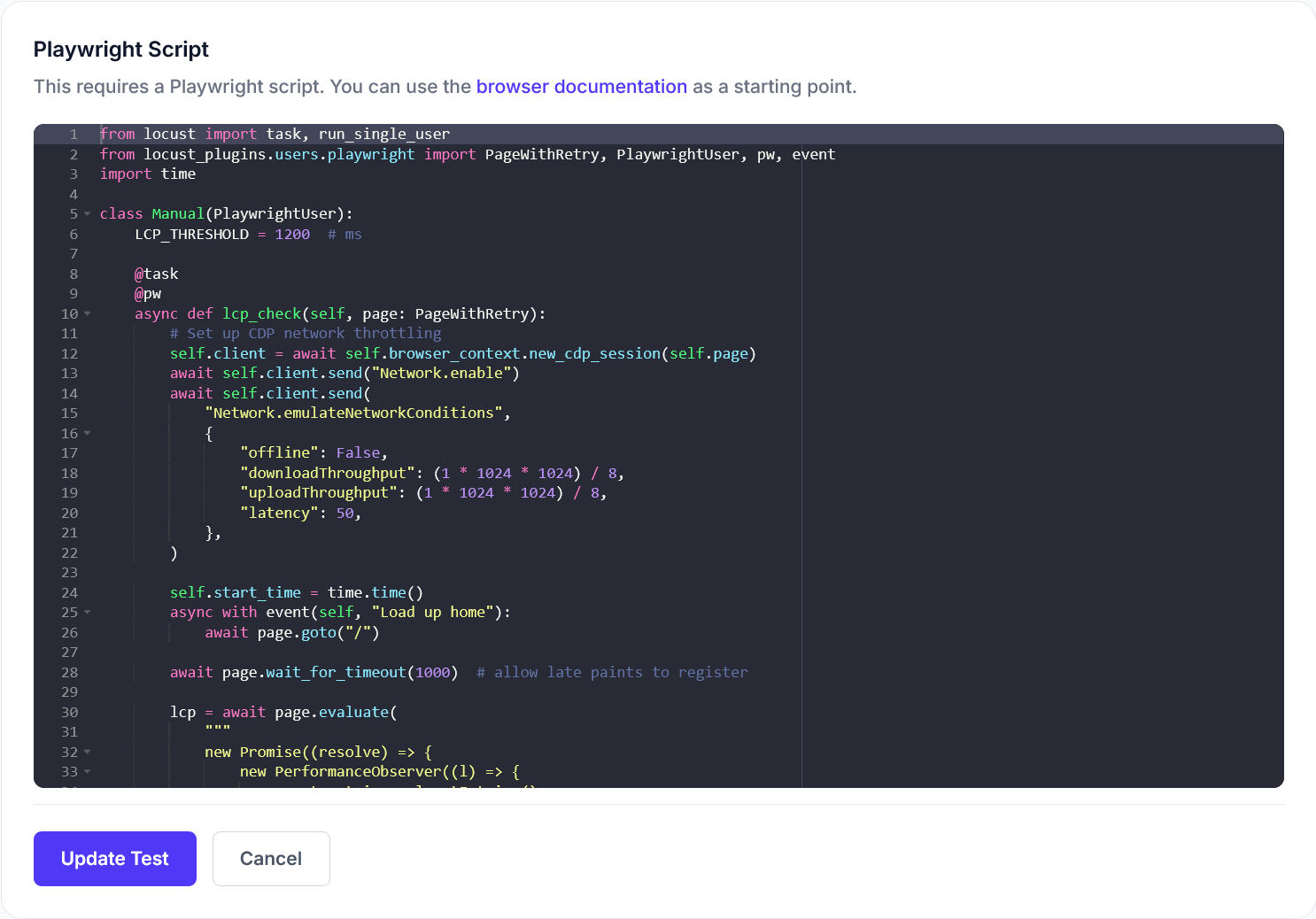

Create Your LoadForge Test Script:

-

Add a GitHub Actions Workflow:

-

Create a new file in .github/workflows directory, for example loadforge.yml:

name: LoadForge Continuous Load Testing

on:

push:

branches:

- main

pull_request:

branches:

- main

jobs:

load-testing:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: '3.9'

- name: Install Dependencies

run: |

python -m pip install --upgrade pip

pip install fastapi

- name: Run FastAPI Server

run: |

uvicorn myapp:app --host 0.0.0.0 --port 8000 &

sleep 5

- name: Run LoadForge Test

run: |

curl -X POST "https://api.loadforge.com/v1/start_test" \

-H "Authorization: Bearer ${{ secrets.LOADFORGE_API_KEY }}" \

-H "Content-Type: application/json" \

-d @tests/load_test.yaml

-

In the above script:

on: push: Triggers the load tests on every push to the main branch and on pull requests.setup-python@v2: Sets up the necessary Python environment.uvicorn myapp:app --host 0.0.0.0 --port 8000 &: Starts the FastAPI server.curl -X POST: Sends a POST request to the LoadForge API to start the load test using the configuration specified in load_test.yaml.

Maintaining Test Efficacy Over Time

- Regular Review of Test Scenarios: Regularly update your load testing scenarios to reflect any changes in user behavior or application functionality.

- Monitor Test Results: Continuously monitor the results and reports from LoadForge to ensure your application maintains its performance benchmarks.

- Iterate Based on Results: Use the insights gained from load testing to iteratively optimize your FastAPI application, focusing on identified bottlenecks and performance issues.

Conclusion

Integrating LoadForge with your CI/CD pipeline ensures continuous load testing for your FastAPI application. By doing so, you can assure consistent application performance and robust handling of traffic spikes, providing a reliable and efficient user experience. Remember, continuous load testing is not a one-time setup; it is an ongoing process that evolves with your application, enabling proactive performance improvements.

Case Study: Success Story with LoadForge and FastAPI

Overview

In this case study, we'll explore how ACME Corp used LoadForge to successfully load test their FastAPI application, resulting in significant performance improvements and a robust, scalable API. ACME Corp is a medium-sized e-commerce company that recently transitioned from a monolithic architecture to microservices, choosing FastAPI for its blazing speed and asynchronous capabilities.

The Challenge

ACME Corp faced several challenges:

- Scalability: Their existing monolithic architecture couldn't handle increasing user demands during peak shopping seasons.

- Performance: Slow response times were affecting user experience, leading to cart abandonment and revenue loss.

- Error Rates: High error rates under load, particularly during flash sales.

They needed to ensure that their new FastAPI microservices were resilient and could handle high traffic volumes without performance dips or increased error rates.

The Solution

ACME Corp decided to use LoadForge for load testing their FastAPI application. Here's how they approached it:

-

Preparation:

- They set up a staging environment identical to their production setup.

- Ensured database seeding with realistic data for accurate test results.

- Configured necessary environment variables and dependencies.

-

Creating Load Scenarios:

- Utilized LoadForge's user-friendly interface to create comprehensive test scenarios.

- Simulated different types of user behavior, including browsing, placing orders, and account management.

- Configured various requests including

GET and POST across multiple endpoints.

{

"scenarios": [

{

"name": "Browse Products",

"requests": [

{"method": "GET", "url": "/api/products"}

]

},

{

"name": "Place Order",

"requests": [

{"method": "POST", "url": "/api/orders", "body": {"product_id": 123, "quantity": 2}}

]

}

]

}

-

Execution and Monitoring:

- Executed the load tests during off-peak hours to avoid affecting ongoing development.

- Monitored real-time performance metrics like response time, throughput, and error rates using LoadForge's dashboard.

loadforge start --test-config acme-load-test.json

-

Analyzing Results:

- Post-test, ACME Corp thoroughly analyzed LoadForge’s detailed reports.

- Identified bottlenecks at multiple levels, including API endpoints and database queries.

- Noticed that the

/api/orders endpoint was significantly slower under load due to inefficient SQL queries.

-

Optimization:

- Optimized the inefficient SQL queries identified during load testing.

- Implemented caching strategies for frequently accessed data.

- Scaled microservices horizontally to distribute the load more evenly.

# Example of caching implementation

from fastapi import FastAPI, Depends

from fastapi_cache import FastAPICache

from fastapi_cache.backends.memory import InMemoryBackend

app = FastAPI()

@app.on_event("startup")

async def startup():

FastAPICache.init(InMemoryBackend(), prefix="fastapi-cache")

Positive Outcomes

After iterative testing and optimization, ACME Corp observed substantial improvements:

- Reduced Response Times: The average response time dropped from 500ms to 150ms.

- Increased Throughput: The system could handle 3x more requests per second compared to the initial setup.

- Lower Error Rates: Error rates decreased from 5% under load to less than 0.5%.

By leveraging LoadForge for comprehensive load testing, ACME Corp ensured their FastAPI application was not only high-performing but also resilient under heavy traffic. This directly translated to improved user experience, reduced cart abandonment, and increased revenue.

Conclusion

This case study underscores the importance of load testing with LoadForge to identify and rectify performance issues proactively. ACME Corp's successful implementation highlights how businesses can optimize their FastAPI applications to meet user demands effectively.

Conclusion and Next Steps

In this comprehensive guide, we have delved into the essentials of load testing FastAPI applications using LoadForge. Here is a summary of the key points we covered:

-

Introduction to FastAPI: We explored what FastAPI is, highlighted its features, and discussed why it’s a popular choice for building APIs in Python. Understanding these basics set the stage for recognizing the importance of load testing.

-

What is Load Testing?: We defined load testing and emphasized its significance in verifying that your FastAPI application can handle high traffic volumes. The crucial metrics we looked at include response time, throughput, and error rates.

-

Why Choose LoadForge for Load Testing?: We outlined the benefits of using LoadForge, particularly its ease of use, scalable architecture, detailed analytics, and rich integrations with other tools in your development stack.

-

Setting Up FastAPI for Load Testing: A step-by-step guide was provided to help you prepare your FastAPI application for load testing, covering environment setup, necessary dependencies, and configurations.

-

Creating LoadForge Test Scenarios for FastAPI: We walked through the process of creating load test scenarios tailored to FastAPI applications, including simulating user behaviors, setting up various request types, and configuring different load profiles.

-

Running Your First Load Test with LoadForge: We detailed instructions to execute load tests effectively using LoadForge, from initiation to monitoring and stopping tests, and shared best practices for obtaining significant results.

-

Analyzing Load Test Results: Guidance was offered on interpreting the comprehensive reports generated by LoadForge. We discussed assessing key metrics and identifying bottlenecks or performance issues in your FastAPI application.

-

Optimizing FastAPI for Better Performance: Practical tips and strategies were suggested for optimizing FastAPI applications based on load testing insights, including code optimization, database query tuning, and scaling infrastructure.

-

Running Continuous Load Testing with LoadForge and FastAPI: We explored the advantages of continuous load testing. We also discussed how to seamlessly integrate LoadForge with your CI/CD pipeline for sustained performance monitoring.

-

Case Study: Success Story with LoadForge and FastAPI: A real-world case study demonstrated the practical application of LoadForge for load testing a FastAPI application, showcasing challenges faced, solutions implemented, and positive outcomes achieved.

Next Steps

To further enhance your knowledge and expertise in FastAPI and load testing with LoadForge, consider the following steps:

-

Deepen Your FastAPI Knowledge:

-

Master LoadForge:

- Visit the LoadForge Documentation for detailed guides and advanced features.

- Experiment with different load testing scenarios in LoadForge to see how your FastAPI application responds under various conditions.

-

Continuous Improvement:

- Regularly conduct load tests as part of your development cycle.

- Integrate load testing into your CI/CD pipeline to catch performance issues early.

-

Performance Optimization:

- Refer to performance tuning techniques and continually refine your FastAPI application based on load testing insights.

- Stay updated on best practices and advancements in backend optimization.

-

Community Engagement:

- Share your experiences and learn from others by participating in FastAPI and LoadForge communities.

- Attend or view online webinars, tutorials, and workshops.

By following these steps, you'll be well on your way to building robust, high-performing APIs with FastAPI and ensuring their resilience under load with LoadForge. Happy testing!

For additional resources and further reading:

Embark on your journey to mastering FastAPI and load testing with LoadForge, and take your application’s performance to the next level!