Introduction

In today's digital landscape, ensuring that your web applications and services can handle high levels of traffic is crucial for maintaining performance and user satisfaction. HAProxy, a reliable and high-performance load balancer, is widely used to distribute traffic across backend servers. However, it's essential to validate that your HAProxy setup can efficiently handle the anticipated load. This is where distributed load testing comes into play.

In this guide, we will walk you through performing distributed load tests on HAProxy using LoadForge. LoadForge is a cloud-based load testing platform that leverages the power of locustfiles, enabling you to simulate extensive and diversified traffic on your HAProxy instance from various global locations. By following this guide, you will be able to:

- Validate Performance: Ensure your HAProxy instance can handle significant loads without performance degradation.

- Identify Bottlenecks: Detect potential issues in your HAProxy configuration, backend servers, or network setup.

- Optimize Configuration: Apply insights gained from load tests to refine and enhance your HAProxy setup.

Here’s a brief overview of what we’ll cover in this comprehensive guide:

- Prerequisites: Necessary tools and environments you need before starting.

- Setting Up Your HAProxy: Basic setup and configuration of your HAProxy instance.

- Creating Your Locustfile: Writing a locustfile to define your load test.

- Locustfile Code Example: A sample locustfile to test your HAProxy setup.

- Running the Test: Steps to execute your load test using LoadForge.

- Analyzing Test Results: Interpreting the test results to understand your HAProxy's performance.

- Optimizing Performance: Tips for enhancing your HAProxy configuration based on your test findings.

- Conclusion: Recap and next steps for continual performance monitoring and load testing.

By the end of this guide, you'll have a solid understanding of how to perform distributed load tests on your HAProxy setup using LoadForge and be equipped with the knowledge to ensure your system can scale effectively under load.

Let's get started!

Prerequisites

Before diving into the intricacies of load testing HAProxy with LoadForge, let's ensure you have everything you need to get started. A successful load test depends heavily on your initial setup, so it's crucial to check off all the essentials.

HAProxy Instance

-

Running HAProxy Instance: Ensure you have an operational HAProxy instance. This means:

- HAProxy is installed and configured.

- Backend servers are set up.

- HAProxy is running and can serve traffic efficiently.

-

Network Accessibility:

- Verify that your HAProxy instance is accessible over the network from the LoadForge servers. This typically involves:

- Configuring firewalls and security groups.

- Ensuring DNS resolution (if applicable).

-

Performance Checks:

- Confirm that your HAProxy setup can handle a substantial amount of traffic. It's advisable to perform lightweight tests initially to validate connectivity and basic performance.

LoadForge Account

To run your load tests on the LoadForge platform, you need:

-

LoadForge Account:

- Sign up for a LoadForge account at LoadForge Signup.

- Verify your account through the provided email confirmation.

-

API Access:

- Obtain API keys from your LoadForge account settings. These keys are essential for integrating your locustfiles with LoadForge's distributed testing infrastructure.

Basic Understanding

-

Basic Locust Knowledge:

- Familiarize yourself with Locust, an open-source load testing tool. Understanding Locust concepts such as Locust classes, Tasks, and User behavior is crucial.

- Locust Documentation is a great resource to get you started.

-

Python Proficiency:

- Basic Python programming skills are required to write and customize your locustfile. This involves:

- Writing and executing Python scripts.

- Understanding basic Python concepts such as functions, classes, and modules.

Example Setup

To give you an idea of a minimal locustfile, here's a simple structure you might start with:

from locust import HttpUser, task, between

class MyUser(HttpUser):

wait_time = between(1, 5)

@task

def index(self):

self.client.get("/")

This script defines a load test with a single user making GET requests to the root endpoint of your server. Further customization will be done in later sections.

Recap

Before we proceed, double-check that you have:

- An operational and network-accessible HAProxy instance.

- A LoadForge account with API access.

- A basic understanding of Locust and Python.

With these prerequisites in place, you're ready to set up your environment and create a custom locustfile tailored to your HAProxy load testing needs. Let's move forward to ensure your system can handle real-world traffic scenarios efficiently.

Setting Up Your HAProxy

In this section, we’ll ensure your HAProxy instance is properly configured and ready to handle significant load from distributed locations using LoadForge. Follow the steps below to set up and verify your HAProxy configuration.

Step 1: Configure Backend Servers

First, you need to set up your HAProxy configuration file with the details of your backend servers. Open your haproxy.cfg file and add your backend server configurations as shown below:

frontend http_front

bind *:80

default_backend servers

backend servers

balance roundrobin

server server1 192.168.1.1:80 check

server server2 192.168.1.2:80 check

This configuration tells HAProxy to:

- Listen for HTTP traffic on port 80.

- Use a round-robin load balancing method to distribute requests to the backend servers (

server1 and server2).

Step 2: Verify Network Accessibility

Ensure that your HAProxy instance is accessible from the LoadForge servers. You can test this using basic network tools like ping or curl:

If you receive responses, your HAProxy instance is reachable.

Step 3: Performance Tuning

To ensure HAProxy can handle a significant load, you must fine-tune its configuration. Consider the following settings:

-

Tune Maximum Connections:

Increase the maximum number of concurrent connections HAProxy can handle.

global

maxconn 50000

-

Timeouts:

Set appropriate timeouts for client, server, and connection.

defaults

timeout connect 10s

timeout client 30s

timeout server 30s

Step 4: Testing HAProxy Configuration

Before deploying your instance for a LoadForge test, perform some basic load tests locally using tools like ab (Apache Benchmark) or siege.

-

Apache Benchmark Example:

ab -n 1000 -c 100 http://<haproxy_public_ip>/

-

Siege Example:

siege -c 100 -t 1M http://<haproxy_public_ip>/

These tests will give you a preliminary understanding of how your HAProxy setup handles load.

Step 5: Confirm Logging Configuration

Ensure that HAProxy logging is enabled to capture detailed logs for analysis. Add the following snippet to your haproxy.cfg:

global

log /dev/log local0

log /dev/log local1 notice

defaults

log global

option httplog

option dontlognull

With these steps, you have configured and verified that your HAProxy instance is ready to handle significant load. Moving forward, you can create your locustfile to simulate client requests from different geographical locations using LoadForge.

Creating Your Locustfile

In this section, we will create a locustfile, which is a Python script that defines the tasks and behavior of your load test. This locustfile will be responsible for simulating multiple clients accessing your HAProxy server from different locations.

Understanding the Components

A typical locustfile consists of the following components:

- Import Statements: Import necessary modules, including Locust and HttpUser.

- User Class: Define a user class that inherits from

HttpUser, encapsulating the behavior of a simulated user.

- Tasks: Within the user class, define tasks as methods.

- Test Settings: Set up the attributes like

wait_time to simulate user think time.

Creating the Locustfile

We will now create a locustfile named locustfile.py. This file will include an example of a basic load test script to simulate users making HTTP requests to your HAProxy server.

from locust import HttpUser, TaskSet, task, between

class UserBehavior(TaskSet):

@task(1)

def index(self):

self.client.get("/")

@task(2)

def search(self):

self.client.get("/search?q=load+testing")

class WebsiteUser(HttpUser):

tasks = [UserBehavior]

wait_time = between(5, 15)

# Optional: Add an on_start method if you have a login step or setup

# class UserBehavior(TaskSet):

# def on_start(self):

# self.client.post("/login", {"username":"test_user", "password":"password"})

Explanation of the Code

-

Import Statements: We import essential classes from the Locust library.

from locust import HttpUser, TaskSet, task, between

-

TaskSet Class: Defines user behavior. In this example, we have two tasks:

- Access the homepage (

/)

- Perform a search query (

/search?q=load+testing)

class UserBehavior(TaskSet):

@task(1)

def index(self):

self.client.get("/")

@task(2)

def search(self):

self.client.get("/search?q=load+testing")

The @task decorator specifies the weight of each task, thus search will be performed twice as often as the index.

-

HttpUser Class: Represents an individual user. We link the tasks attribute to the UserBehavior class and define the wait_time attribute, which simulates user think time between requests.

class WebsiteUser(HttpUser):

tasks = [UserBehavior]

wait_time = between(5, 15)

Customizing Your Locustfile

You can customize the locustfile to reflect more complex scenarios such as:

- Using different HTTP methods (e.g., POST, PUT, DELETE).

- Simulating user sessions with login and logout.

- Testing endpoints with dynamic parameters.

For example, if your HAProxy setup includes an authentication mechanism, you might define an on_start method within your TaskSet class to log users in at the beginning of the test.

class UserBehavior(TaskSet):

def on_start(self):

self.client.post("/login", {"username": "test_user", "password": "password"})

@task(1)

def index(self):

self.client.get("/")

@task(2)

def search(self):

self.client.get("/search?q=load+testing")

This offers greater realism in simulating user interactions with your HAProxy-proxied services.

By understanding and following these steps, you'll be well on your way to creating effective load tests for your HAProxy setup using LoadForge. Next, we'll cover how to configure and run your tests on LoadForge's platform.

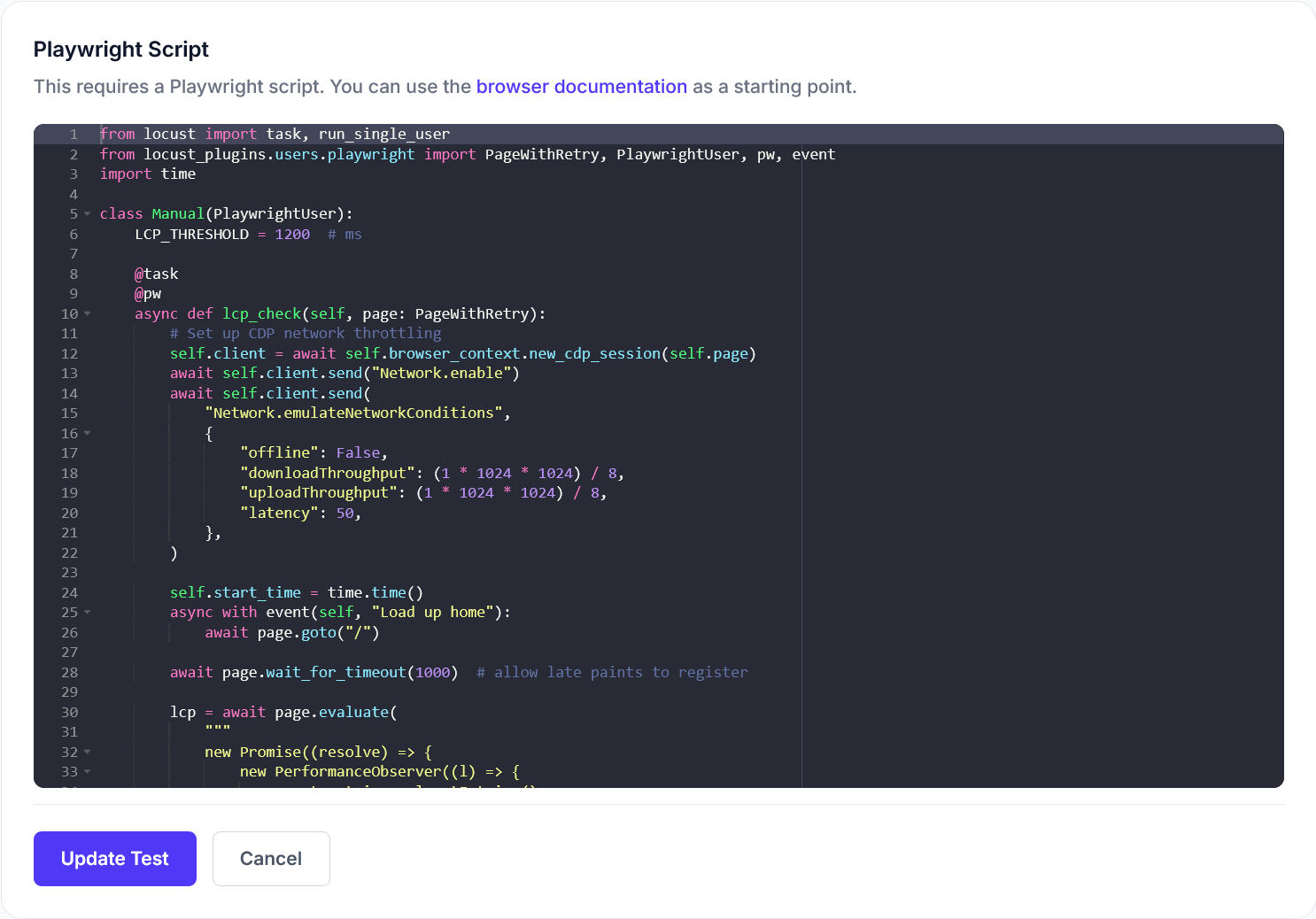

Locustfile Code Example

To load test your HAProxy setup, we need to create a locustfile. This locustfile is essentially a Python script that defines the tasks and behavior of your test, simulating multiple clients making HTTP requests to your HAProxy server. Below, we provide an example locustfile that you can use as a starting point.

Example Locustfile

Here is a simple locustfile that simulates users accessing a web application through HAProxy. This script will define a Locust user class with tasks that replicate typical user behavior:

import random

from locust import HttpUser, TaskSet, task, between

class UserBehavior(TaskSet):

@task(1)

def index(self):

self.client.get("/")

@task(2)

def about(self):

self.client.get("/about")

@task(3)

def contact(self):

self.client.get("/contact")

class WebsiteUser(HttpUser):

tasks = [UserBehavior]

wait_time = between(1, 5)

Explanation

-

Imports: We import the necessary modules from Locust. HttpUser allows us to simulate HTTP user behavior, TaskSet helps group related tasks, and task is a decorator to mark methods as tasks. The between function introduces random waiting times between tasks.

-

UserBehavior Class: This class encapsulates the behavior of the simulated users. In this example, we define three tasks representing HTTP GET requests to different endpoints (/, /about, /contact). Each task simulates a user visiting a specific page.

index task: Represents a GET request to the home page.about task: Represents a GET request to the about page.contact task: Represents a GET request to the contact page.

The number within the task decorator (1, 2, or 3) indicates the relative weight of each task. In this case, visiting the about and contact pages are more frequent than the home page.

-

WebsiteUser Class: This class represents the user configuration. The tasks attribute indicates the TaskSet to use, and wait_time defines the random waiting time between executing tasks for each simulated user, mimicking human interaction delay.

Customizing the Locustfile

Depending on your HAProxy setup and the application it manages, you may want to:

- Add more tasks to simulate different user interactions.

- Include POST requests and simulate form submissions.

- Adjust the weight of each task to better reflect typical user behavior.

- Parameterize URLs and endpoints if your HAProxy setup involves multiple applications.

This locustfile serves as a basic template that you can expand and refine to better suit your testing requirements.

Conclusion

In this section, we created a basic locustfile to test your HAProxy setup. This script simulates users accessing different endpoints of your web application through HAProxy. In the next sections, we will guide you through running this locustfile on LoadForge and analyzing the results to ensure your HAProxy can handle the expected load efficiently.

Running the Test

After setting up your locustfile, we are ready to run the load test using LoadForge's platform. This section will guide you through uploading your locustfile to LoadForge, configuring the test parameters, and executing the distributed load test.

Step 1: Upload Your Locustfile to LoadForge

-

Log In to LoadForge Account:

- Navigate to LoadForge and log in with your account credentials.

-

Access the Load Test Dashboard:

- Once logged in, go to the Dashboard. From here, you can manage your load tests, view historical data, and initiate new tests.

-

Upload Your Locustfile:

- Click on the New Test button to start the process of setting up a new test.

- You will be prompted to upload your locustfile. Click the Upload button and select the locustfile you created.

Step 2: Configure Test Parameters

-

Test Configuration:

- After uploading your locustfile, you need to configure the test parameters. These parameters define how the test should be executed.

-

Select Test Type:

- Choose the type of test you want to run. Options typically include stress tests, load tests, and scalability tests. For this guide, we'll focus on a basic load test.

-

Set Number of Users:

- Define the number of users to simulate during the test. Start with a small number and gradually increase it to understand how your HAProxy setup handles different loads.

-

Geographical Locations:

- LoadForge allows you to run tests from multiple locations worldwide. Select the regions from which you want to simulate traffic. This helps in assessing the global performance of your HAProxy.

-

Test Duration:

- Specify the duration of the test. Typical load tests can run from a few minutes to several hours, depending on the objectives of your test.

-

Ramp-up Time:

- Define the ramp-up time, which is the period over which the number of simulated users will gradually increase to the target number. This helps in observing how the system reacts to increasing traffic.

Step 3: Execute the Distributed Load Test

-

Review Settings:

- Before initiating the test, review all the parameters and settings to ensure everything is configured correctly.

-

Start Test:

- Click the Start Test button to begin the load test. LoadForge will distribute the load across its infrastructure and start sending traffic to your HAProxy server according to the specified parameters.

-

Monitor Test Progress:

- As the test runs, you can monitor its progress in real-time from the LoadForge dashboard. Metrics such as the number of requests per second, response times, and errors will be displayed.

Example Monitoring Dashboard

| Metric |

Value |

| Requests per Second |

2000 |

| Average Response Time |

350ms |

| Error Rate |

0.5% |

By following these steps, you'll be able to effectively run a distributed load test on your HAProxy setup using LoadForge. This process will provide you with the necessary insights to ensure your HAProxy can handle high traffic levels efficiently.

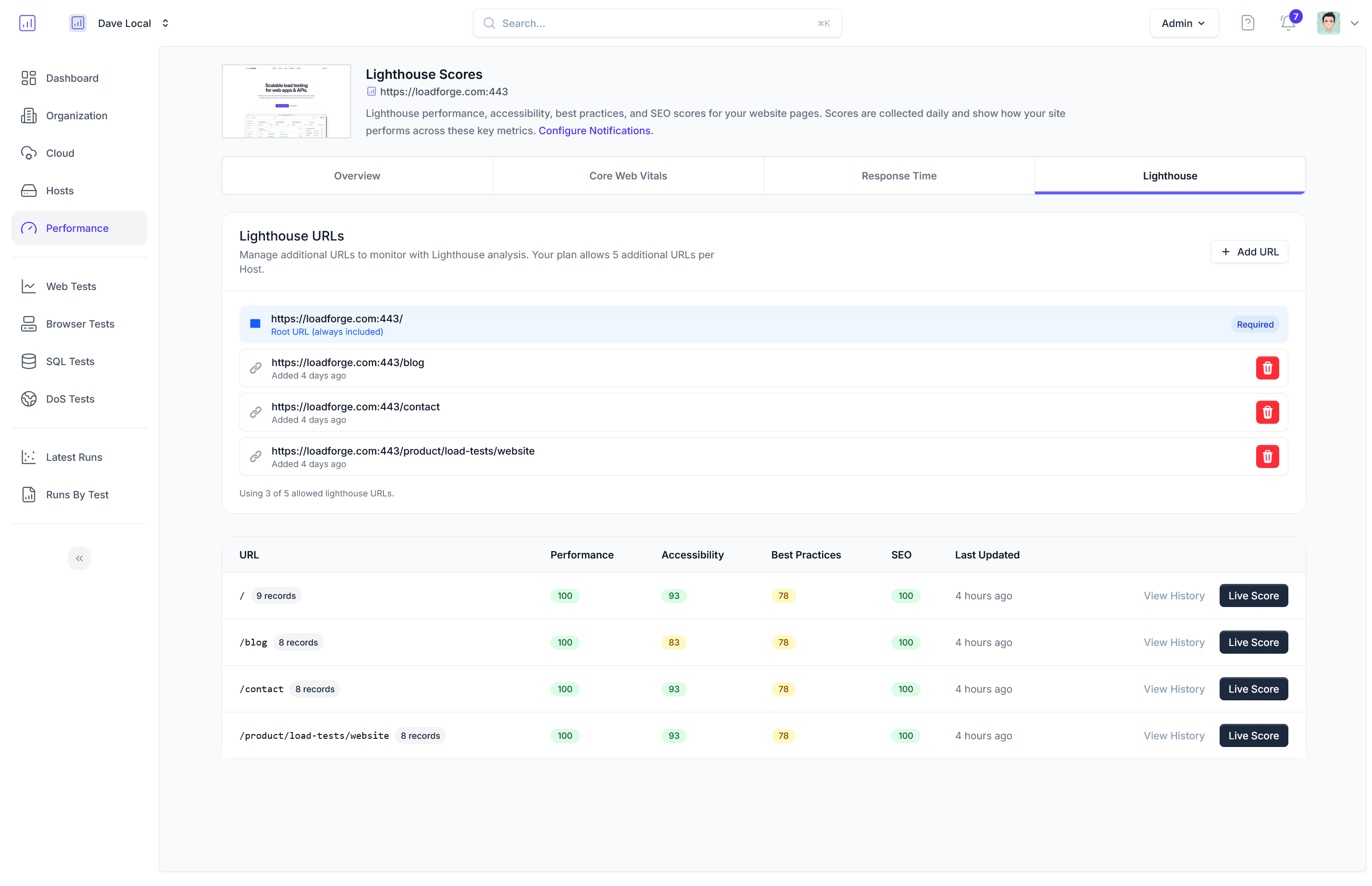

## Analyzing Test Results

Once your load test is complete, LoadForge provides comprehensive reports and analytics to help you understand the performance of your HAProxy setup under load. Analyzing these results is crucial to identify any bottlenecks and areas for optimization. In this section, we will review key performance metrics including response times, error rates, and throughput.

### Understanding the LoadForge Report

The LoadForge platform presents a detailed report once your load test has run to completion. Here's a breakdown of the key sections you'll need to focus on:

1. **Response Times**:

- **Minimum, Average, and Maximum Response Times**: These metrics show the range of latency experienced by users during the test.

- **Percentiles (e.g., 50th, 95th, 99th)**: These indicate the percentage of requests that fell below a certain response time threshold. For instance, the 95th percentile shows the time within which 95% of requests were completed.

2. **Throughput**:

- **Requests per Second (RPS)**: This metric tells you how many requests your HAProxy was able to handle per second. Higher RPS indicates better performance.

- **Bandwidth Utilization**: This shows the amount of data transferred per unit time, measured in bytes per second (BPS). It helps you understand the network load on HAProxy.

3. **Error Rates**:

- **Total Errors**: The aggregate count of server-side errors that occurred during the load test.

- **Types of Errors**: A breakdown of different error types, such as connection timeouts, 4xx (client errors), and 5xx (server errors).

### Example of Test Results

Below is a hypothetical output snippet from a LoadForge test result:

```yaml

{

"response_times": {

"min": 102,

"average": 350,

"max": 1050,

"percentiles": {

"50": 340,

"95": 700,

"99": 900

}

},

"throughput": {

"requests_per_second": 130,

"bandwidth_utilization": 10500

},

"error_rates": {

"total_errors": 3,

"error_breakdown": {

"connection_timeouts": 1,

"client_errors": 2,

"server_errors": 0

}

}

}

Key Insights

-

Response Times: In the example above, the average response time of 350ms is reasonable, but the max response time of 1050ms suggests some requests experienced significant delays. Focus on reducing the higher percentiles (95th and 99th) to ensure a consistent user experience.

-

Throughput: An RPS of 130 indicates that your HAProxy setup could comfortably handle 130 requests per second. Bandwidth utilization at 10500 BPS shows that the network layer is being utilized effectively.

-

Error Rates: With a low total error count and no server errors, your HAProxy setup is mostly reliable. However, you should investigate the cause of client errors and connection timeouts.

Advanced Reporting Metrics

LoadForge also provides advanced metrics and visualizations that can help you dig deeper:

- Time Series Graphs: Track performance metrics over the duration of the test.

- Heat Maps: Visualize response time or error rate concentrations.

- Geographical Distribution: Analyze performance based on the geographic location of the load sources.

Actionable Steps

- Identify Bottlenecks: Look for high latency or error concentration points.

- Compare Against SLAs: Ensure your performance metrics align with your Service Level Agreements (SLAs).

- Optimize Configurations: Based on the insights, tweak your HAProxy configurations, such as adjusting timeouts or load balancing algorithms.

- Re-Test: Run additional tests to verify if optimizations have improved the performance.

Conclusion

By thoroughly analyzing your LoadForge test results, you can gain valuable insights into the performance and resilience of your HAProxy setup. This will help you make informed decisions to optimize your infrastructure for handling increased traffic loads efficiently.

Optimizing Performance

Based on the test results, it's clear where and how your HAProxy setup can be improved to better handle higher traffic loads. Here are some tips and strategies for optimizing your HAProxy configuration:

1. Tuning HAProxy Configuration

Fine-Tuning Timeouts

Optimize HAProxy timeouts to ensure that idle connections are closed promptly, freeing up resources. Adjust the timeout client, timeout server, and timeout connect parameters:

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

Max Connections

Ensure that HAProxy is configured to handle a high number of concurrent connections by setting the maxconn parameter adequately.

global

maxconn 10000

2. Backend Server Optimization

Load Balancing Algorithms

Choose the appropriate load-balancing algorithm for your use case. For instance, use leastconn for services with long-lived connections or roundrobin for even distribution.

backend my_backend

balance roundrobin

Server Health Checks

Enable health checks to ensure that traffic is only sent to healthy backend servers.

backend my_backend

server srv1 192.168.1.1:80 check

server srv2 192.168.1.2:80 check

3. SSL/TLS Configuration

If your HAProxy instance terminates SSL/TLS, ensure that it is optimized for performance. Use modern ciphers and enable session reuse.

frontend my_frontend

bind *:443 ssl crt /path/to/cert.pem

http-request set-header X-Forwarded-Proto https

http-request add-header X-SSL-Cipher %[ssl_c_cipher]

4. Caching Mechanisms

Consider using HAProxy's built-in caching to reduce load on backend servers. This is particularly useful for static content.

backend static_cache

http-request cache-use my_cache

http-response cache-store my_cache

cache my_cache

total-max-size 100

max-age 240

5. Resource Monitoring and Scaling

Resource Utilization

Monitor resource utilization (CPU, Memory, Network I/O) on both HAProxy and backend servers. Identify bottlenecks and scale up resources as needed.

Auto-Scaling

Leverage auto-scaling mechanisms to dynamically adjust the number of HAProxy instances based on load.

6. Log Analysis and Continuous Monitoring

Regularly analyze HAProxy logs to identify trends or issues. Utilize logging features such as HTTP response codes, timings, and request paths for detailed insights.

global

log /dev/log local0

log /dev/log local1 notice

Enable continuous monitoring and alerting to respond proactively to performance issues. Consider integrating with monitoring platforms like Prometheus and Grafana for comprehensive dashboards.

Conclusion

By carefully analyzing the results of your load tests and implementing the strategies outlined above, you can significantly enhance the performance of your HAProxy setup. Optimizing configurations, resource management, and continuous monitoring are key to ensuring that your HAProxy instance can efficiently handle high traffic loads.

Conclusion

In this guide, we've walked through the steps necessary to perform distributed load tests on your HAProxy setup using the LoadForge platform. Here's a quick summary of the key points we covered:

- Introduction to Load Testing on HAProxy: Understanding the importance of load testing for ensuring the reliability and scalability of your HAProxy setup.

- Prerequisites: Ensuring that you have an operational HAProxy instance, a LoadForge account, and a basic understanding of locust and Python.

- Setting Up Your HAProxy: Making sure your HAProxy is properly configured and capable of handling substantial loads.

- Creating Your Locustfile: Writing a locustfile to define the behavior and tasks of your load test, simulating multiple clients accessing your HAProxy server.

- Locustfile Code Example: Providing a practical example of a locustfile to be used in your load testing.

- Running the Test: Using LoadForge's platform to upload your locustfile, configure test parameters, and execute a distributed load test.

- Analyzing Test Results: Reviewing the detailed reports and analytics provided by LoadForge to assess the performance of your HAProxy setup under load.

- Optimizing Performance: Offering strategies and tips for improving your HAProxy configuration based on the test results to better handle high traffic loads.

Load testing is a critical step in validating the performance and robustness of your HAProxy deployment. By leveraging LoadForge's distributed testing capabilities, you can simulate real-world traffic conditions from multiple geographical locations, ensuring that your HAProxy setup can handle the expected load effortlessly. Moreover, continuous performance monitoring and iterative optimization based on testing results will help you maintain a scalable and reliable system.

In essence, integrating rigorous load testing and performance monitoring into your development lifecycle not only helps you identify and rectify potential bottlenecks but also provides assurance that your HAProxy setup can meet the demands of your users, even during peak traffic periods.

By following the steps detailed in this guide, you are well on your way to achieving a robust and scalable HAProxy infrastructure capable of delivering consistent performance under varying loads. Happy testing!